In the evolving landscape of data engineering, choosing the right workflow orchestration tool can dramatically impact your team’s productivity and your pipeline’s reliability. Luigi and Prefect represent two distinct approaches to handling complex data workflows, each with unique strengths and ideal use cases. Understanding when to use each tool requires exploring their design philosophies, technical capabilities, and operational characteristics.

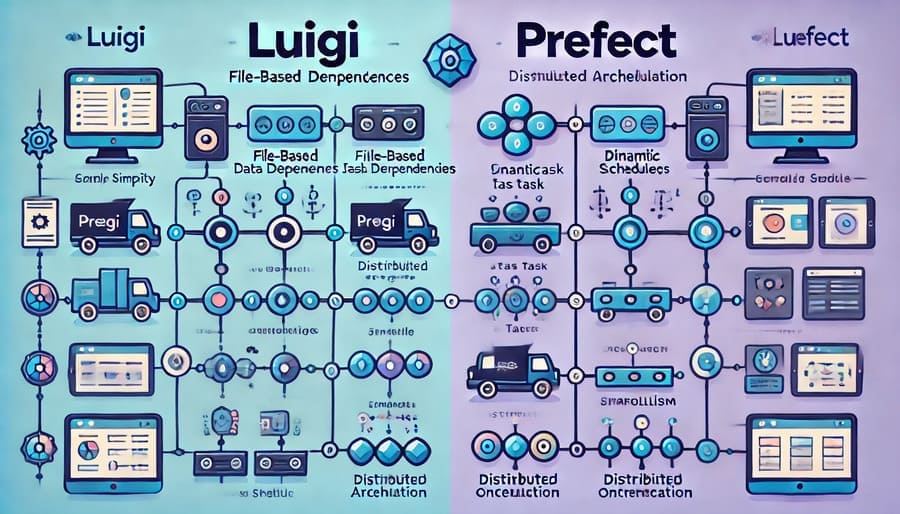

Before diving into specific scenarios, let’s examine what sets these tools apart conceptually:

Luigi, developed by Spotify and released in 2012, approaches workflow orchestration with a focus on simplicity and dependency resolution.

Key attributes:

- Task-dependency focused architecture

- Simple Python API with minimal abstractions

- Centralized scheduler model

- Built-in support for common data tools

- Visualization through a basic web UI

- Emphasis on explicit dependencies

Prefect, a newer entrant to the workflow management space, was designed to address many of the limitations of earlier tools like Luigi and Airflow.

Key attributes:

- Dynamic, data-aware workflows

- Rich Python API with decorators and context managers

- Distributed execution architecture

- Hybrid cloud-local deployments

- Comprehensive observability with a modern UI

- Emphasis on positive engineering (expecting things to work vs. handling failures)

Luigi becomes the optimal choice in these scenarios:

When your workflows primarily consist of straightforward task dependencies:

- ETL jobs with predictable file inputs and outputs

- Batch processing workflows with deterministic steps

- Data pipelines with minimal conditional logic

Example: A daily data pipeline that extracts data from a database, transforms it through several processing steps, and loads the results into a data warehouse.

# Luigi example for a simple ETL pipeline

import luigi

class ExtractData(luigi.Task):

date = luigi.DateParameter()

def output(self):

return luigi.LocalTarget(f"data/extracted_{self.date}.csv")

def run(self):

# Extract logic here

with self.output().open('w') as f:

f.write(extracted_data)

class TransformData(luigi.Task):

date = luigi.DateParameter()

def requires(self):

return ExtractData(date=self.date)

def output(self):

return luigi.LocalTarget(f"data/transformed_{self.date}.csv")

def run(self):

# Transform logic using input from ExtractData

with self.input().open('r') as in_f, self.output().open('w') as out_f:

out_f.write(transform(in_f.read()))

class LoadData(luigi.Task):

date = luigi.DateParameter()

def requires(self):

return TransformData(date=self.date)

def output(self):

return luigi.LocalTarget(f"data/loaded_{self.date}.success")

def run(self):

# Load logic using input from TransformData

with self.input().open('r') as f:

load_to_warehouse(f.read())

with self.output().open('w') as success_f:

success_f.write('success')

if __name__ == "__main__":

luigi.build([LoadData(date=datetime.date.today())], local_scheduler=True)

When you need workflow management but have limited resources for maintaining complex infrastructure:

- Small data teams with limited DevOps support

- Projects requiring lightweight deployment

- Environments where simplicity is valued over feature richness

Example: A research team needs to process datasets regularly but lacks dedicated infrastructure engineers. Luigi’s simpler deployment model with an optional central scheduler makes it easier to maintain.

When your pipelines revolve around file creation, processing, and dependencies:

- Data processing that generates intermediate files

- Workflows where tasks produce and consume file outputs

- Pipelines dealing with large file transformations

Example: A media processing pipeline that converts videos through multiple formats, validates outputs, and generates thumbnails—each step producing files that subsequent steps consume.

When your workflows are relatively stable and don’t need frequent changes:

- Established data pipelines with minimal evolution

- Processes with well-understood dependencies

- Scenarios where you don’t need advanced dynamic capability

Example: Monthly financial reporting pipelines that run the same series of transformations with little variation except for date parameters.

Prefect shines in these specific scenarios:

When your pipelines need to adapt based on data or runtime conditions:

- Workflows with complex branching logic

- Pipelines where task creation depends on data

- Processes requiring parametrization beyond simple inputs

Example: An ML training pipeline that dynamically creates preprocessing tasks based on data characteristics, runs parallel feature engineering paths, and conditionally executes model validation steps.

# Prefect example for a dynamic, data-dependent workflow

from prefect import task, flow

import pandas as pd

@task

def extract_data(source_id: str):

# Extract data logic

return pd.DataFrame(...)

@task

def detect_data_features(data: pd.DataFrame):

# Analyze data and return required processing steps

required_transformations = []

if data['column_a'].isnull().any():

required_transformations.append("handle_missing_values")

if data['categorical_col'].nunique() > 20:

required_transformations.append("reduce_cardinality")

return required_transformations

@task

def handle_missing_values(data: pd.DataFrame):

# Handle missing values

return data.fillna(...)

@task

def reduce_cardinality(data: pd.DataFrame):

# Reduce cardinality in categorical columns

return data.with_column_aggregated(...)

@task

def train_model(data: pd.DataFrame):

# Train model

return model_object

@flow

def ml_pipeline(source_id: str):

# Extract data

data = extract_data(source_id)

# Dynamically determine required transformations

transformations = detect_data_features(data)

# Apply transformations conditionally

if "handle_missing_values" in transformations:

data = handle_missing_values(data)

if "reduce_cardinality" in transformations:

data = reduce_cardinality(data)

# Train model

model = train_model(data)

return model

if __name__ == "__main__":

ml_pipeline("customer_data")

When you need comprehensive monitoring, logging, and introspection:

- Production-critical data pipelines

- Workflows requiring detailed execution history

- Scenarios demanding rich failure information

Example: A financial data processing pipeline requires detailed audit trails, execution monitoring, and alerting capabilities to meet compliance requirements.

When your workflows need to run across distributed environments:

- Pipelines spanning multiple compute environments

- Workflows requiring elastic scaling

- Processes that need to integrate with cloud services

Example: A data science team runs processing partly on local development environments, partly on dedicated compute clusters, and needs seamless transitions between environments with minimal configuration changes.

When your pipeline requirements change frequently:

- Experimental data science workflows

- Processes under active development

- Scenarios requiring frequent iterations

Example: A growing analytics team frequently updates their feature engineering pipeline as they discover new business requirements and data patterns.

Understanding the technical distinctions helps make an informed decision:

Luigi’s approach:

- Explicit task classes with

requires()andoutput()methods - File-based dependency management by default

- Central directed acyclic graph (DAG) constructed at runtime

Prefect’s approach:

- Flexible task decorators and functional style

- Data-based dependency tracking

- Dynamic, lazy DAG construction

Luigi’s approach:

- Central scheduler model

- Worker-based execution

- Simpler deployment, more manual scaling

Prefect’s approach:

- Distributed execution architecture

- Agent-based deployment model

- Cloud or self-hosted orchestration options

Luigi’s approach:

- Basic retry mechanisms

- Manual failure investigation via UI

- Focus on task completion status

Prefect’s approach:

- Sophisticated retry and state handlers

- Rich failure context preservation

- Proactive failure notifications

Luigi’s approach:

- Simple Python API with minimal abstraction

- Straightforward debugging through standard Python tools

- Limited testing utilities

Prefect’s approach:

- Context managers for flow and task execution

- Local testing and execution utilities

- Interactive development tools

When evaluating these tools for your organization, consider:

- Workflow Complexity

- Simple, file-based pipelines → Luigi

- Dynamic, data-dependent workflows → Prefect

- Complex conditional logic → Prefect

- Straightforward task sequences → Either, with Luigi being simpler

- Team Expertise

- Familiarity with basic Python → Luigi

- Modern Python practices (decorators, context managers) → Prefect

- Limited DevOps resources → Luigi

- Strong infrastructure team → Either, with Prefect leveraging it better

- Operational Requirements

- Basic workflow visibility → Luigi

- Comprehensive monitoring → Prefect

- Multi-environment execution → Prefect

- Simple deployment → Luigi

- Growth Trajectory

- Stable, established processes → Luigi may suffice

- Rapidly expanding data capabilities → Prefect offers more room to grow

- Evolution toward event-driven architecture → Prefect

Many organizations start with Luigi and later consider migrating to Prefect. Here’s what to consider:

- Complexity Thresholds:

- Your workflows involve increasingly complex conditionals

- You’re building workarounds to handle dynamic behavior

- Task dependencies become difficult to express clearly

- Operational Pain Points:

- Failure investigation is becoming time-consuming

- Scaling Luigi workers is challenging

- Observability needs have outgrown the UI

- Team Friction:

- Developers find Luigi’s API limiting

- Difficulty integrating with modern cloud services

- Testing workflows becomes cumbersome

If migrating from Luigi to Prefect:

- Start with New Workflows:

- Begin by implementing new pipelines in Prefect

- Gain expertise before tackling existing workflows

- Incremental Conversion:

- Convert peripheral workflows first

- Move central, critical pipelines only after establishing patterns

- Parallel Operation:

- Run both systems during transition

- Compare reliability and performance

A media company processes thousands of video assets daily through a predictable series of steps:

- File ingestion

- Format conversion

- Quality verification

- Metadata extraction

- Thumbnail generation

Luigi’s file-based dependency model perfectly fits this workflow, where each step produces files that subsequent steps consume. The simple deployment model allows the small engineering team to maintain the system with minimal overhead.

A fintech company processes transaction data through a complex pipeline:

- Data collection from multiple sources (APIs, databases, files)

- Dynamic validation based on source characteristics

- Conditional enrichment depending on transaction types

- Custom aggregations for different client needs

- Report generation based on client configurations

Prefect’s dynamic workflow capabilities handle the variable nature of this process, while its rich observability features provide the audit trail required in financial applications.

The choice between Luigi and Prefect ultimately depends on your specific needs:

- Choose Luigi when simplicity, file-based workflows, and straightforward dependency management are your priorities. It excels in stable, predictable pipelines with clear inputs and outputs.

- Choose Prefect when you need dynamic, data-aware workflows with comprehensive observability and distributed execution capabilities. It shines in complex, evolving pipelines that require advanced orchestration features.

Both tools have their place in the modern data stack. By understanding their distinct strengths and aligning them with your requirements, you can select the workflow orchestration tool that will best support your data engineering journey.

#DataEngineering #WorkflowOrchestration #Luigi #Prefect #DataPipelines #TaskManagement #ETL #DataProcessing #Python #Orchestration