AWS (Amazon Web Services)

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) continues to stand as the dominant force, capturing approximately one-third of the global cloud infrastructure market. For data engineers, AWS offers an unparalleled ecosystem of services that can support everything from simple data storage to complex, real-time analytics pipelines. This comprehensive guide explores how AWS is shaping the field of data engineering and why it remains the platform of choice for organizations building scalable data infrastructure.

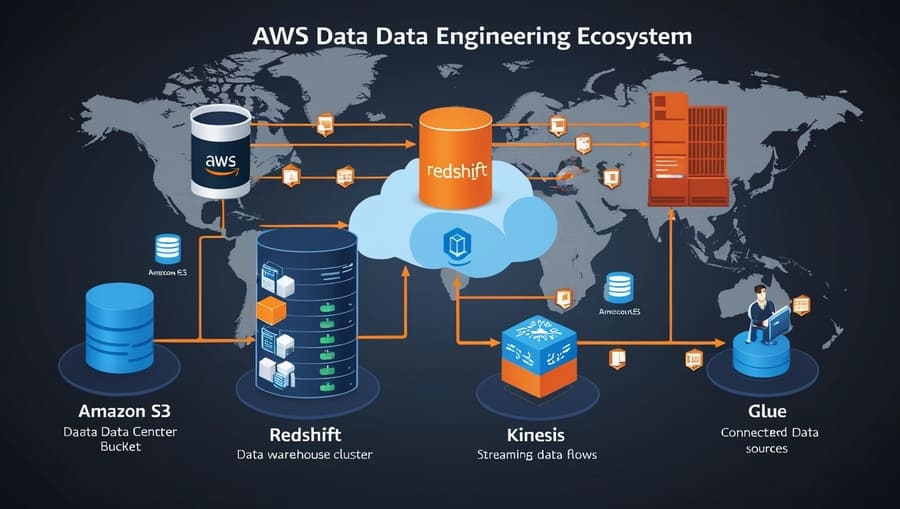

AWS provides a remarkably complete set of tools that allow data engineers to construct end-to-end pipelines within a single ecosystem. This integration eliminates many of the compatibility issues that arise when piecing together solutions from multiple vendors, creating a more seamless development experience.

Amazon S3 (Simple Storage Service) serves as the cornerstone of many AWS-based data architectures. As an object storage service offering industry-leading scalability and durability (99.999999999%), S3 provides the ideal foundation for data lakes. Key features that make S3 indispensable for data engineers include:

- Storage Classes: From frequently accessed hot data (S3 Standard) to rarely accessed archives (S3 Glacier Deep Archive), engineers can optimize costs based on access patterns.

- Versioning and Lifecycle Policies: Automated management of data throughout its lifecycle, ensuring compliance while controlling costs.

- Event Notifications: Trigger processing workflows automatically when new data arrives, enabling real-time pipeline orchestration.

- S3 Select and Glacier Select: Query capabilities that allow retrieval of specific data subsets without downloading entire objects, dramatically improving performance for certain workloads.

For more structured data needs, Amazon RDS provides managed relational database services supporting multiple engines (PostgreSQL, MySQL, SQL Server, Oracle, and MariaDB), while Amazon DynamoDB offers a fully managed NoSQL database service with single-digit millisecond performance at any scale.

AWS excels in providing scalable processing options for data transformation and analysis:

Amazon Redshift remains the flagship data warehousing service, offering:

- Petabyte-scale performance

- Columnar storage optimization

- Advanced query optimization

- Integration with business intelligence tools

- Redshift Spectrum for querying data directly in S3

For stream processing, Amazon Kinesis provides real-time data collection, processing, and analysis capabilities. The Kinesis family includes:

- Kinesis Data Streams: for real-time data ingestion

- Kinesis Data Firehose: for loading streaming data into storage services

- Kinesis Data Analytics: for processing streaming data using SQL or Apache Flink

- Kinesis Video Streams: specialized service for video data

The Amazon EMR (Elastic MapReduce) service offers managed Hadoop, Spark, HBase, Presto, and Hive clusters, providing the horsepower needed for processing massive datasets while simplifying cluster management.

AWS Glue has revolutionized ETL workloads with its serverless architecture:

- Automated schema discovery and catalog creation

- Visual ETL job designer and notebook interfaces

- Support for Python and Scala with Spark

- Job bookmarking for efficient incremental processing

- Built-in job scheduling and monitoring

For workflow orchestration, AWS Step Functions allows engineers to coordinate multiple AWS services into serverless workflows through a visual interface. This service makes it easier to build and monitor multi-step data processing pipelines with automatic error handling and retry logic.

AWS has pioneered the serverless approach, which is transforming how data engineers work by eliminating infrastructure management:

AWS Lambda enables event-driven data processing without provisioning servers. Data engineers can:

- Process data automatically when new files arrive in S3

- Transform streaming data from Kinesis

- Create APIs that trigger data processing workflows

- Schedule periodic data quality checks or transformations

Amazon Athena provides a serverless query service for analyzing data directly in S3 using standard SQL. With no infrastructure to manage, engineers can focus on writing queries rather than maintaining servers.

The combination of these serverless options allows for the creation of highly scalable, cost-efficient data pipelines that automatically adapt to changing workloads.

AWS seamlessly extends data pipelines into the machine learning domain:

Amazon SageMaker provides a comprehensive platform for building, training, and deploying machine learning models at scale. For data engineers, this means the ability to incorporate ML predictions directly into data workflows.

AWS Comprehend, Rekognition, Transcribe, and Translate offer pre-built AI capabilities that can be integrated into data pipelines to extract insights from unstructured data, including text, images, video, and audio.

AWS provides robust security capabilities that are crucial for data engineering teams handling sensitive information:

- AWS IAM (Identity and Access Management) enables fine-grained access control across all services

- AWS KMS (Key Management Service) provides centralized control over encryption keys

- AWS CloudTrail delivers audit logging for compliance and troubleshooting

- VPC (Virtual Private Cloud) enables network isolation for sensitive data workloads

- AWS Lake Formation simplifies setting up secure data lakes with centralized permissions

The AWS Control Tower and AWS Config services help establish and maintain governance at scale, which is particularly important for organizations with extensive data estates.

AWS offers several mechanisms to optimize costs, a critical consideration for data engineering teams managing large-scale processing:

- Spot Instances can reduce compute costs by up to 90% for interruptible workloads

- Reserved Instances provide significant discounts for predictable workloads

- Auto Scaling ensures resources match current demands

- AWS Cost Explorer and AWS Budgets provide visibility and control over spending

- S3 Storage Classes and lifecycle policies automatically move data to more cost-effective storage tiers

AWS’s global infrastructure spans 27 geographic regions and 87 availability zones, providing data engineers with the ability to:

- Deploy architectures that meet data sovereignty requirements

- Create disaster recovery solutions across geographic boundaries

- Minimize latency by positioning data close to users

- Build highly available systems that can withstand regional outages

The comprehensive nature of AWS creates several distinct advantages for data engineering teams:

- Reduced integration complexity: Services are designed to work together seamlessly

- Scalability without limits: Handle gigabytes to petabytes without architecture changes

- Innovation velocity: New features and services are constantly being added

- Extensive documentation and community: Vast resources for learning and problem-solving

- Pay-as-you-go pricing: Start small and grow without large upfront investments

Despite its strengths, working with AWS does present some challenges:

- Service sprawl: The sheer number of services can be overwhelming for newcomers

- Cost management: Without proper governance, costs can escalate quickly

- Learning curve: Mastering the AWS ecosystem requires significant investment in training

- Lock-in concerns: Deep integration with AWS-specific services can increase switching costs

The AWS platform continues to evolve, with several emerging trends likely to impact data engineering:

- Increased automation: More sophisticated auto-scaling and self-optimizing services

- Enhanced developer experience: Higher-level abstractions to increase productivity

- Deeper AI/ML integration: More seamless incorporation of AI capabilities into data flows

- Edge computing expansion: Moving processing closer to data sources to reduce latency

- Sustainability focus: Tools to help optimize resource usage and reduce carbon footprint

AWS’s combination of breadth, depth, and maturity continues to make it the preferred choice for organizations building serious data engineering capabilities. While competitors have made significant strides, AWS’s first-mover advantage, continuous innovation, and comprehensive ecosystem create compelling value for data teams.

For data engineers looking to build scalable, reliable, and cost-effective data pipelines, AWS provides not just tools but a complete platform that can grow with your organization’s needs. The ability to start small with serverless components and seamlessly scale to enterprise-grade infrastructure makes AWS uniquely positioned to support data initiatives at any stage of maturity.

As data continues to grow in volume, variety, and velocity, AWS’s market-leading position and commitment to innovation ensure that it will remain an essential platform for data engineering excellence in the years to come.

#AWS #DataEngineering #CloudComputing #S3 #Redshift #Kinesis #AWSGlue #Serverless #DataLake #DataWarehouse #DataPipelines #BigData #ETL #StreamProcessing #CloudStorage #DataAnalytics #AWSLambda #DataArchitecture #CloudInfrastructure #DataGovernance