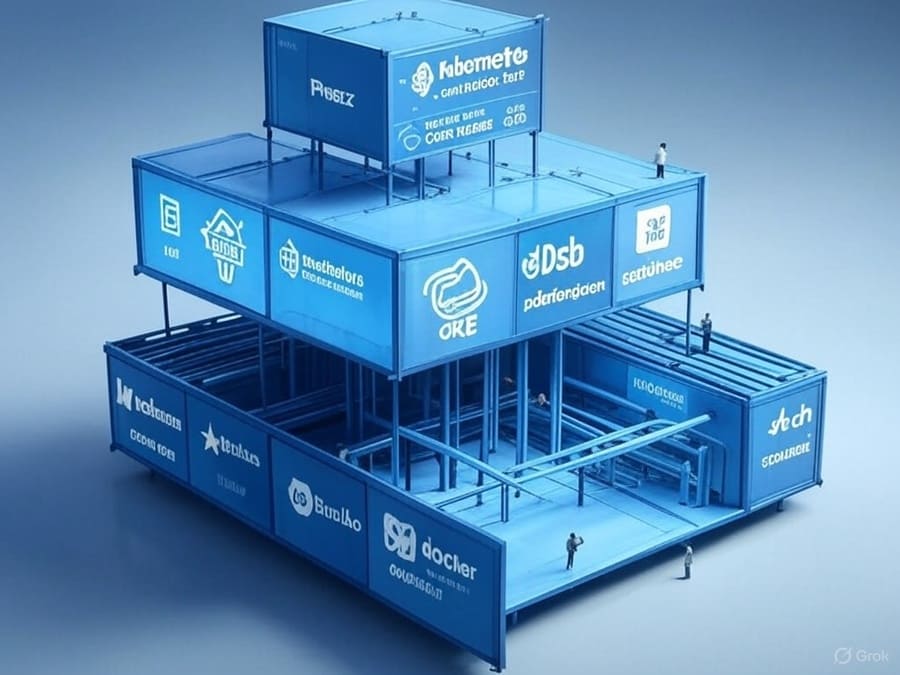

Containerization & Orchestration

In today’s data engineering landscape, containerization and orchestration have revolutionized how we build, deploy, and scale data pipelines and applications. These technologies have become essential components of the modern data stack, enabling consistency across environments, efficient resource utilization, and simplified management of complex distributed systems.

Containerization provides a standardized way to package applications and their dependencies into lightweight, portable units that can run consistently across different computing environments. For data engineers, this solves the age-old problem of “it works on my machine” by ensuring that data pipelines behave identically in development, testing, and production environments.

- Environment isolation: Each container has its own file system, processes, and network interfaces

- Dependency management: All required libraries and tools are packaged within the container

- Reproducibility: Containerized pipelines produce consistent results across environments

- Resource efficiency: Containers share the host OS kernel, making them lighter than VMs

- Rapid deployment: Containers can be started and stopped in seconds

As data volumes grow and architectures become more distributed, managing individual containers becomes impractical. Container orchestration platforms automate the deployment, scaling, networking, and management of containerized applications, allowing data engineering teams to focus on building pipelines rather than managing infrastructure.

- Automated scaling: Dynamically adjust resources based on workload demands

- Self-healing: Automatically restart failed containers

- Load balancing: Distribute processing across available resources

- Configuration management: Centralized management of environment variables and secrets

- Resource optimization: Efficient placement of containers based on available capacity

Docker revolutionized application development by making containers accessible to developers across all domains, including data engineering. As the most widely adopted containerization platform, Docker provides:

- Dockerfile: A simple text file defining how to build container images

- Docker Hub: A repository for sharing and discovering container images

- Docker Compose: A tool for defining multi-container applications

- Rich ecosystem: Extensive documentation, tools, and community support

Docker has become the de facto standard for containerizing data applications, from simple ETL scripts to complex distributed systems.

Podman offers a more secure approach to containerization by eliminating the need for a daemon running with root privileges. Key advantages include:

- Rootless containers: Run containers without elevated privileges

- Docker compatibility: Uses the same commands and Dockerfiles

- Pod support: Native Kubernetes-like pod concept for multi-container applications

- Integration with systemd: Better support for running containers as system services

Podman has gained popularity in enterprise environments where security and compliance are paramount concerns.

containerd serves as the underlying container runtime for many orchestration platforms, including Docker and Kubernetes. Its focus is on:

- Simplicity: Core container execution functionality

- Stability: Production-grade reliability

- Performance: Optimized for minimal overhead

- OCI compatibility: Support for industry-standard container formats

While data engineers rarely interact with containerd directly, it powers many of the containerization tools they use daily.

Kaniko addresses the challenge of building container images within Kubernetes clusters without requiring privileged access:

- No daemon: Doesn’t require the Docker daemon

- Build context flexibility: Supports various sources including Git repositories

- Cache support: Optimization for faster builds

- Security focus: Designed for restricted environments

Kaniko is particularly valuable in CI/CD pipelines where building container images securely is a requirement.

Buildah provides a more flexible approach to building OCI-compliant container images:

- Daemon-free operation: Doesn’t require a container runtime daemon

- Granular control: Fine-grained commands for container creation

- Integration with Podman: Works seamlessly in Podman environments

- Scripting support: Easy integration with shell scripts

Buildah is often chosen by teams seeking more control over the image building process than Dockerfile provides.

Kubernetes (often abbreviated as K8s) has emerged as the dominant container orchestration platform, providing a robust framework for deploying and managing containerized applications at scale:

- Declarative configuration: Define desired state using YAML manifests

- Automatic scaling: Horizontal pod autoscaling based on resource utilization

- Self-healing: Automatic replacement of failed containers

- Service discovery: Built-in DNS for inter-service communication

- Storage orchestration: Dynamic provisioning of persistent storage

- Rolling updates: Zero-downtime deployments with automatic rollback

For data engineering teams, Kubernetes provides the foundation for building resilient, scalable data platforms that can adapt to changing workloads.

Amazon Elastic Kubernetes Service (EKS) offers a managed Kubernetes service integrated with the AWS ecosystem:

- Control plane management: AWS handles the Kubernetes control plane

- AWS integration: Seamless connection with IAM, VPC, and other AWS services

- Fargate support: Option for serverless Kubernetes pod execution

- Cluster add-ons: Simplified management of common components

EKS is ideal for data engineering teams already leveraging AWS services like S3, Redshift, or Glue, providing a consistent infrastructure approach.

Google Kubernetes Engine (GKE) offers a highly optimized Kubernetes experience on Google Cloud:

- Autopilot mode: Fully managed, hands-off Kubernetes experience

- Release channels: Control the pace of Kubernetes version updates

- Advanced networking: Support for advanced networking features

- Integration with GCP services: Seamless connection with BigQuery, Dataflow, and other data services

GKE provides a natural home for data pipelines that interact heavily with Google’s data analytics services.

Azure Kubernetes Service (AKS) integrates Kubernetes with Microsoft’s cloud platform:

- Integration with Azure services: Seamless connection with Azure Data Factory, Synapse, and other data services

- Virtual node support: Serverless container execution using Azure Container Instances

- Azure Monitor integration: Comprehensive monitoring and logging

- Azure Active Directory integration: Enterprise-grade identity management

AKS is particularly valuable for data engineering teams in Microsoft-centric organizations who want to maintain technology consistency.

Red Hat OpenShift extends Kubernetes with developer-focused features and enterprise-grade security:

- Developer experience: Integrated CI/CD, source-to-image builds, and developer console

- Operator framework: Simplified management of complex applications

- Multi-tenancy: Advanced isolation between teams and workloads

- Compliance certifications: Support for regulated environments

OpenShift is often chosen by enterprises requiring stronger governance and security controls for their data platforms.

Rancher simplifies the management of Kubernetes clusters across different environments:

- Multi-cluster management: Centralized control of Kubernetes across cloud providers and on-premises

- Application catalog: Simplified deployment of common applications

- User management: Centralized authentication and authorization

- Monitoring and logging: Integrated observability stack

Rancher helps data engineering teams standardize their approach across different environments and cloud providers.

Docker Swarm provides native clustering for Docker, offering a simpler alternative to Kubernetes:

- Docker CLI integration: Uses familiar Docker commands

- Simplified setup: Easier to deploy and manage than Kubernetes

- Service concepts: Declarative service definitions

- Built-in load balancing: Automatic distribution of requests

While less feature-rich than Kubernetes, Docker Swarm offers a gentler learning curve for teams new to container orchestration.

Modern data engineering workflows leverage containerization at multiple levels:

Containers enable consistent, scalable data processing:

- Packaged ETL tools: Run tools like Apache NiFi, Airflow, or custom ETL scripts in containers

- Scheduled batch jobs: Use Kubernetes CronJobs for regular processing

- Stream processing: Deploy Kafka Streams or Flink applications in containers

- Event-driven processing: Trigger containerized workloads based on data events

Containerization simplifies deployment of data processing frameworks:

- Spark on Kubernetes: Run Spark jobs directly on Kubernetes

- Distributed processing: Scale data transformations horizontally

- GPU acceleration: Containerize ML preprocessing with GPU support

- Language flexibility: Mix Python, Java, and other languages in the same pipeline

Containers provide consistent deployment for data access layers:

- API services: Deploy REST or GraphQL interfaces to data

- Caching layers: Containerize Redis or Memcached for performance

- Query engines: Run Presto, Trino, or custom query services

- Real-time serving: Deploy model serving infrastructure for online predictions

Several practices can enhance container usage in data engineering:

- Lightweight base images: Use minimal images appropriate for your workload

- Layer optimization: Order Dockerfile commands to maximize caching

- Multi-stage builds: Separate build-time dependencies from runtime requirements

- Version pinning: Specify exact versions of dependencies

- Right-sizing containers: Allocate appropriate CPU and memory resources

- Resource limits: Set both requests and limits for predictable behavior

- Quality of service: Understand Kubernetes QoS classes for critical workloads

- Autoscaling configuration: Set appropriate metrics for horizontal scaling

- Persistent volumes: Use appropriate storage classes for different workloads

- Data locality: Consider data proximity for I/O-intensive operations

- Backup strategies: Implement consistent backup procedures for stateful containers

- State management: Carefully handle application state in distributed environments

- Log aggregation: Centralize logs from containerized applications

- Metrics collection: Gather performance data for capacity planning

- Tracing implementation: Add distributed tracing for complex pipelines

- Alerting configuration: Set up proactive notifications for potential issues

Containerization and orchestration have fundamentally changed how data engineering teams build and operate data platforms. By providing consistency, scalability, and operational efficiency, these technologies enable data engineers to focus on extracting value from data rather than managing infrastructure.

As data volumes continue to grow and processing requirements become more complex, containerization and orchestration will become even more essential components of the modern data engineering toolkit. Teams that master these technologies position themselves to build adaptable, resilient data platforms that can evolve with changing business needs.

Whether you’re just beginning to explore containerization or looking to optimize an existing Kubernetes deployment, investing in these technologies provides a solid foundation for your data engineering practice. The ecosystem continues to evolve rapidly, offering increasingly sophisticated tools for managing containerized data workloads across hybrid and multi-cloud environments.

#DataEngineering #Containerization #Kubernetes #Docker #Orchestration #DataInfrastructure #Microservices #CloudNative #DevOps #DataOps #ETL #DataPipelines #Podman #OpenShift #AKS #GKE #EKS #CloudComputing #BigData #DataArchitecture

Containerization

- Docker: Platform for developing, shipping, and running applications in containers

- Podman: Daemonless container engine

- containerd: Industry-standard container runtime

- Kaniko: Tool for building container images from a Dockerfile

- Buildah: Tool for building OCI container images

- Kubernetes: Container orchestration platform

- Amazon EKS: Managed Kubernetes service

- Google Kubernetes Engine (GKE): Managed Kubernetes service

- Azure Kubernetes Service (AKS): Managed Kubernetes service

- OpenShift: Kubernetes platform with developer and operations-focused tools

- Rancher: Complete software stack for teams adopting containers

- Docker Swarm: Native clustering for Docker