Kubernetes: Navigating the Container Orchestration Revolution

In the ever-evolving landscape of cloud computing and microservices architecture, Kubernetes has emerged as the definitive solution for container orchestration. This powerful, open-source platform automates the deployment, scaling, and management of containerized applications, transforming how organizations build and operate modern software systems. But what makes Kubernetes so essential in today’s technological ecosystem, and how can you harness its full potential? Let’s dive deep into the world of Kubernetes.

Kubernetes (often abbreviated as K8s) began life as an internal Google project called Borg. Drawing on Google’s decade-plus experience running containerized workloads at massive scale, Kubernetes was open-sourced in 2014 and subsequently donated to the Cloud Native Computing Foundation (CNCF). This strategic move catalyzed unprecedented industry collaboration, with contributors from companies like Red Hat, Microsoft, IBM, and Amazon rapidly enhancing the platform.

Today, Kubernetes serves as the backbone of modern infrastructure across industries, from startups to Fortune 500 enterprises, unifying container management across on-premises data centers, public clouds, and hybrid environments.

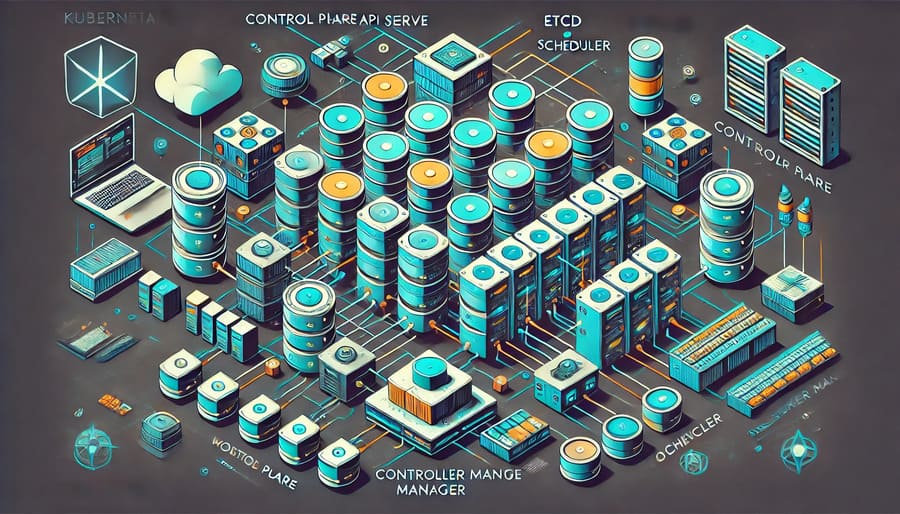

Kubernetes operates on a distributed architecture with a clear separation of concerns:

- API Server: The front door to the Kubernetes cluster, processing RESTful requests and updating the cluster state

- etcd: A distributed key-value store that maintains cluster configuration data

- Scheduler: Assigns workloads to nodes based on resource requirements and constraints

- Controller Manager: Regulates the state of the cluster, ensuring desired state matches actual state

- Cloud Controller Manager: Interfaces with underlying cloud providers

- Kubelet: The primary node agent ensuring containers run in a Pod

- Container Runtime: The software responsible for running containers (e.g., containerd, CRI-O)

- Kube-proxy: Maintains network rules to allow communication to Pods from within and outside the cluster

- Pods: The smallest deployable units containing one or more containers

- Deployments: Declarative updates for Pods and ReplicaSets

- Services: Abstract way to expose applications running on Pods

- ConfigMaps and Secrets: Configuration management for applications

- Namespaces: Virtual clusters within a physical cluster for resource isolation

Kubernetes delivers significant advantages that transform how teams build and operate applications:

- Self-healing capabilities: Automatically restarts containers that fail, replaces containers, kills containers that don’t respond to health checks, and avoids advertising them to clients until they’re ready

- Automated rollouts and rollbacks: Change application state progressively while monitoring health to ensure no downtime

- Horizontal scaling: Scale applications up or down with a simple command, UI, or automatically based on metrics

- Service discovery and load balancing: Containers receive their own IP addresses and DNS names, eliminating the need to modify applications for Kubernetes-specific service discovery

- Storage orchestration: Automatic mounting of storage systems, whether local or cloud-based

- Secret and configuration management: Deploy and update secrets and application configuration without rebuilding container images

- Batch execution: Efficiently manages batch and CI workloads, replacing failed containers when needed

- Extensible architecture: Kubernetes’ API-driven design allows for easy integration with monitoring, logging, and alerting systems

- RBAC security model: Role-based access control provides fine-grained permission management

Beginning your Kubernetes journey requires understanding a few key components:

# Start a local Kubernetes cluster with Minikube

minikube start

# Or use Kind (Kubernetes in Docker)

kind create cluster

# Simple deployment example

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.21

ports:

- containerPort: 80

# View all resources in your cluster

kubectl get all

# Deploy an application

kubectl apply -f deployment.yaml

# Scale a deployment

kubectl scale deployment nginx-deployment --replicas=5

# View logs

kubectl logs deployment/nginx-deployment

# Access a running Pod

kubectl exec -it nginx-deployment-pod -- /bin/bash

Taking Kubernetes to production requires consideration of several advanced topics:

A production-grade Kubernetes cluster should feature multiple control plane nodes distributed across failure domains, with etcd clusters configured for quorum-based consistency. Regular etcd backups are essential for disaster recovery planning.

Production environments must carefully manage compute resources:

spec:

containers:

- name: app

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "256Mi"

cpu: "200m"

Implementing Horizontal Pod Autoscaling (HPA) and cluster autoscaling helps optimize resource utilization and cost:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: app-deployment

minReplicas: 3

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

Securing Kubernetes involves multiple layers:

- Network Policies: Implement micro-segmentation with network policies

- Pod Security Standards: Enforce Pod Security Standards to restrict Pod privileges

- Image Security: Scan container images for vulnerabilities before deployment

- Secret Management: Use external secrets managers or encrypt etcd data

- Audit Logging: Enable comprehensive audit logging for security analysis

Sophisticated deployment techniques minimize risk and downtime:

- Blue/Green Deployments: Maintain two identical environments, switching traffic between them

- Canary Deployments: Gradually release to a subset of users before full release

- Feature Flags: Dynamically enable or disable features without redeployment

One of Kubernetes’ greatest strengths is its extensible ecosystem:

Helm simplifies application deployment and management with reusable charts:

# Install a database using Helm

helm install my-database bitnami/postgresql

# Upgrade an application

helm upgrade my-app ./app-chart --values production-values.yaml

Service meshes like Istio, Linkerd, and Cilium provide:

- Advanced traffic management (circuit breaking, fault injection)

- Enhanced security with mTLS encryption

- Detailed observability metrics

GitOps tools like Flux and ArgoCD synchronize Kubernetes state with Git repositories, enabling:

- Version-controlled infrastructure changes

- Automated deployment reconciliation

- Improved audit capabilities

A comprehensive observability strategy typically includes:

- Prometheus: Metrics collection and alerting

- Grafana: Visualization and dashboarding

- Jaeger/OpenTelemetry: Distributed tracing

- Loki/Elasticsearch: Log aggregation and analysis

Physical resource limitations often challenge Kubernetes operations. Solutions include:

- Implementing efficient resource quotas

- Properly configuring resource requests and limits

- Using quality of service (QoS) classes to prioritize workloads

Kubernetes networking can be complicated. Consider:

- Selecting appropriate CNI plugins based on requirements

- Using service meshes for complex networking needs

- Implementing NetworkPolicies for microsegmentation

Managing stateful applications requires special consideration:

- Using StatefulSets for ordered, stable identities

- Implementing appropriate storage classes with PersistentVolumeClaims

- Considering backup and recovery strategies for databases

The Kubernetes landscape continues to evolve:

- WebAssembly support: Exploring lighter-weight alternatives to containers

- Multi-cluster federation: Improving cross-cluster workload management

- Sustainability efforts: Optimizing energy usage and carbon footprint

- Simplified developer experience: Making Kubernetes more accessible

Kubernetes has fundamentally changed how we deploy, scale, and manage containerized applications. As organizations increasingly adopt cloud-native architectures, understanding and leveraging Kubernetes becomes not just an advantage but a necessity.

Whether you’re just beginning your containerization journey or looking to optimize an existing Kubernetes environment, focusing on operational excellence, security, and ecosystem integration will help you navigate the complexities of modern infrastructure and deliver resilient, scalable applications.

#Kubernetes #K8s #ContainerOrchestration #CloudNative #DevOps #Microservices #CNCF #Containers #Docker #InfrastructureAsCode #GitOps #ServiceMesh #Helm #ContinuousDeployment #CloudComputing #KubernetesCluster #PodSecurity #MicroservicesArchitecture #ContainerManagement #ScalableSystems #KubernetesOperator