Databand: Revolutionizing Data Pipeline Observability for Modern Data Teams

In today’s data-driven business landscape, organizations face a significant challenge: as data pipelines grow increasingly complex, the risk of quality issues, failures, and performance bottlenecks rises dramatically. These operational problems silently undermine the reliability of data that business leaders depend on for critical decisions. Data engineering teams often find themselves in a frustrating cycle of reactive firefighting – discovering problems only after reports fail, dashboards display incorrect information, or machine learning models produce unexpected results.

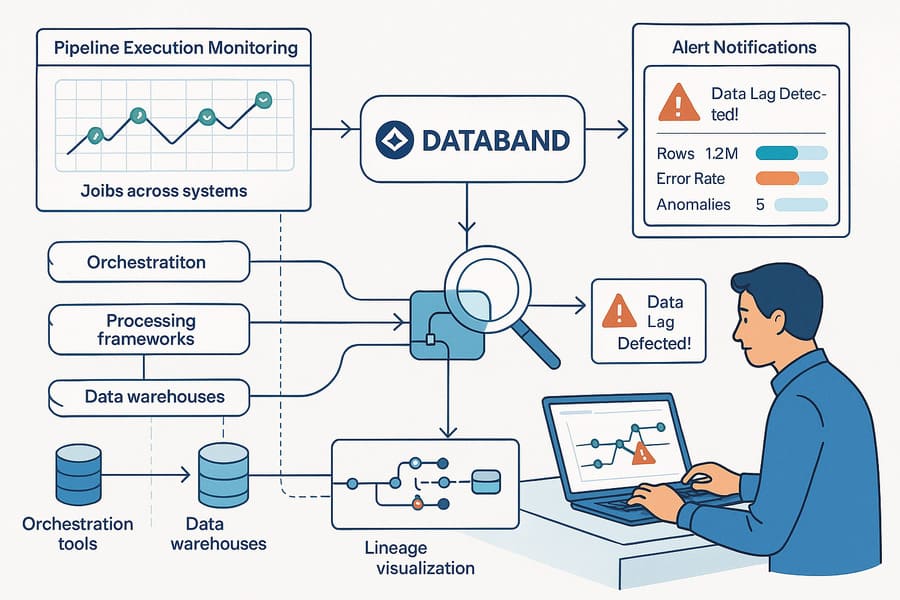

Databand emerges as a powerful solution to this challenge, offering specialized observability designed specifically for modern data pipelines. Acquired by IBM in 2022, Databand provides comprehensive visibility into data pipeline operations, helping teams detect, troubleshoot, and prevent data quality issues before they impact downstream consumers. This article explores how Databand is transforming the way organizations monitor and maintain the health of their data pipelines, enabling proactive data reliability management in complex data environments.

Before examining Databand’s capabilities, it’s essential to understand the fundamental challenges in monitoring modern data pipelines:

Today’s data ecosystems are intricate webs of technologies and processes:

- Distributed processing: Data flows across multiple systems and platforms

- Diverse technologies: Pipelines combine various tools and frameworks

- Hybrid environments: Processing spans on-premises and multiple clouds

- Micro-batch architectures: Increasing pipeline frequency creates more failure points

- Complex dependencies: Cascading relationships between pipeline steps

This complexity creates significant blind spots where issues can hide until they affect business outcomes.

Traditional monitoring approaches fall short in several critical areas:

- Infrastructure-focused: System metrics don’t reveal data quality issues

- Siloed visibility: Each tool provides only part of the pipeline picture

- Missing context: Hard to connect technical failures to business impact

- Reactive discovery: Problems found after they affect downstream users

- Manual correlation: Difficult to trace issues across disparate systems

These limitations force data teams to spend excessive time investigating issues rather than preventing them.

The consequences of inadequate pipeline monitoring are substantial:

- Data scientists spend up to 60% of their time handling data quality issues

- Critical business decisions are delayed or made with unreliable information

- Data engineering teams waste resources on reactive troubleshooting

- Data consumers lose trust in analytics and data products

- Opportunities for data-driven innovation are missed due to reliability concerns

Databand is a specialized data observability platform designed to monitor the health, quality, and performance of data pipelines. Unlike general-purpose monitoring tools or basic workflow schedulers, Databand provides comprehensive visibility specifically tailored to data engineering use cases.

Databand’s approach is built around several key principles:

- Data-Aware Monitoring: Focus on data characteristics and quality, not just process execution

- End-to-End Visibility: Track pipelines across multiple systems and technologies

- Context-Rich Insights: Connect technical metrics to business impact

- Proactive Detection: Identify issues before they affect downstream consumers

- Root Cause Analysis: Quickly trace problems to their source

These principles enable a fundamentally different approach to pipeline reliability.

Databand offers a comprehensive set of capabilities for data pipeline monitoring:

At its foundation, Databand provides visibility into pipeline execution:

# Example: Databand integration with Airflow

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime

from dbnd import task, dataset

@task

def process_customer_data(input_dataset: dataset, output_path: str):

# Databand automatically tracks:

# - Function parameters

# - Runtime metrics

# - Data statistics

# - Dependencies

data = input_dataset.read()

processed_data = transform_data(data)

processed_data.to_csv(output_path)

return output_path

with DAG('customer_data_pipeline', start_date=datetime(2023, 1, 1)) as dag:

process_task = PythonOperator(

task_id='process_customer_data',

python_callable=process_customer_data,

op_kwargs={

'input_dataset': 'gs://data/raw/customers.csv',

'output_path': 'gs://data/processed/customers_processed.csv'

}

)

This observability includes:

- Execution Tracking: Monitor pipeline runs, completions, and failures

- Performance Metrics: Track runtime, resource utilization, and bottlenecks

- Dependency Mapping: Visualize relationships between pipeline components

- Historical Trends: Compare current behavior against past performance

- Alert Management: Configure notifications for critical pipeline issues

Beyond process execution, Databand provides visibility into the data itself:

# Example: Data quality monitoring with Databand

from dbnd import task, dataset

import pandas as pd

@task

def validate_order_data(input_data: dataset):

# Databand automatically captures data statistics:

# - Record counts

# - Schema details

# - Value distributions

# - Freshness metrics

df = input_data.read()

# Define explicit quality expectations

data_quality = {

'order_id_unique': df['order_id'].is_unique,

'has_minimum_orders': len(df) >= 1000,

'customer_id_null_percentage': df['customer_id'].isna().mean() * 100,

'price_range_valid': (df['price'] >= 0).all() and (df['price'] < 10000).all()

}

# Databand tracks these metrics for anomaly detection

for metric_name, metric_value in data_quality.items():

log_metric(metric_name, metric_value)

return df

This data-aware monitoring includes:

- Schema Tracking: Detect changes in data structure and types

- Volume Monitoring: Identify unexpected changes in data quantities

- Freshness Checks: Ensure timely data processing and delivery

- Distribution Analysis: Spot anomalies in data patterns and values

- Custom Quality Metrics: Define and track domain-specific quality indicators

When issues occur, Databand accelerates troubleshooting:

- Lineage Visualization: Trace data flows to identify affected downstream processes

- Contextual Information: See parameter settings, environment variables, and code versions

- Log Integration: Access relevant logs directly within the context of pipeline execution

- Comparative Analysis: Contrast failed runs with successful historical executions

- Artifact Examination: Inspect data inputs and outputs to identify quality issues

These capabilities dramatically reduce mean time to resolution for pipeline problems.

Databand connects with the broader data ecosystem:

- Orchestration Tools: Apache Airflow, Prefect, Dagster, Luigi

- Processing Frameworks: Spark, Pandas, dbt, SQL

- Data Platforms: Snowflake, Databricks, BigQuery, Redshift

- Storage Systems: S3, GCS, HDFS, Azure Blob Storage

- Notification Systems: Slack, MS Teams, Email, PagerDuty

This broad integration capability provides unified visibility across diverse environments.

Successfully implementing Databand involves several key stages:

The first step is mapping your data ecosystem and planning integrations:

- Pipeline Inventory: Document key data pipelines and their components

- Critical Path Analysis: Identify highest-value monitoring targets

- Integration Approach: Determine whether to use agents, API integration, or native connectors

- Metadata Strategy: Define what pipeline metadata to capture and analyze

- Success Criteria: Establish metrics for implementation success

This planning ensures focused implementation and quick time-to-value.

The implementation typically begins with core monitoring capabilities:

- Platform Setup: Deploy Databand according to your environment requirements

- Core Integrations: Connect priority orchestration and processing systems

- Basic Monitoring: Configure execution tracking and alerting

- Team Onboarding: Train key personnel on the platform

- Initial Validation: Confirm monitoring accuracy with test pipelines

This phase establishes foundational visibility into pipeline operations.

With basics in place, teams then implement more sophisticated monitoring:

- Data Quality Rules: Define indicators of data health for key datasets

- Custom Metrics: Implement domain-specific monitoring requirements

- Advanced Alerting: Configure proactive notifications based on data patterns

- Dashboard Creation: Build visualization and reporting for various stakeholders

- Anomaly Detection: Enable machine learning-based pattern recognition

This enhancement phase transforms basic monitoring into comprehensive observability.

The final stage embeds Databand into everyday data operations:

- Workflow Integration: Incorporate Databand insights into regular development processes

- SLA Definition: Establish formal reliability targets for critical pipelines

- Governance Alignment: Connect monitoring with broader data governance initiatives

- Continuous Improvement: Regularly refine monitoring based on emerging patterns

- Cultural Adoption: Build observability into team responsibilities and practices

This operational integration ensures sustainable, long-term value from the platform.

Databand has been successfully applied across industries to solve diverse data pipeline challenges:

A global financial institution implemented Databand to ensure reliable regulatory reporting:

- Challenge: Ensuring accuracy and timeliness of complex risk reporting pipelines

- Implementation:

- Integrated Databand with Airflow and Spark processing

- Implemented data volume and completeness monitoring

- Created alerts for regulatory SLA breaches

- Established data quality checks for critical financial metrics

- Results:

- 85% reduction in time to detect pipeline issues

- Eliminated regulatory penalties from late reporting

- Improved data engineering team efficiency by 30%

- Enhanced trust in automated financial analytics

An online retailer used Databand to improve the reliability of customer analytics:

- Challenge: Ensuring consistent, accurate customer data for personalization and marketing

- Implementation:

- Monitored data freshness for real-time customer analytics

- Tracked schema consistency across multiple pipeline stages

- Implemented anomaly detection for customer behavior metrics

- Created unified visibility across cloud and on-premises systems

- Results:

- Identified data quality issues affecting 15% of customer segmentation

- Reduced pipeline failures by 40%

- Accelerated troubleshooting from days to hours

- Improved marketing campaign performance through more reliable data

A healthcare provider deployed Databand to monitor clinical data processing:

- Challenge: Ensuring reliable integration of patient data from multiple sources

- Implementation:

- Monitored data completeness for patient records

- Implemented validation for medical coding accuracy

- Created alerts for patient data processing delays

- Established lineage tracking across the clinical data pipeline

- Results:

- Improved patient record matching accuracy by 25%

- Identified data quality issues before they affected clinical decisions

- Reduced data integration incidents by 60%

- Enhanced compliance reporting capabilities

Beyond basic monitoring, Databand enables several advanced observability patterns:

Advanced implementations use historical patterns to predict potential issues:

# Conceptual example of predictive monitoring

from dbnd import task, dataset, log_metric

import pandas as pd

from sklearn.ensemble import IsolationForest

@task

def monitor_pipeline_health(pipeline_metrics_history: dataset):

# Load historical pipeline performance data

history_df = pipeline_metrics_history.read()

# Train anomaly detection model

model = IsolationForest(contamination=0.05)

model.fit(history_df[['duration', 'records_processed', 'error_rate']])

# Get current metrics

current_metrics = get_current_pipeline_metrics()

# Predict likelihood of pipeline issues

anomaly_score = model.decision_function([current_metrics])

failure_probability = transform_to_probability(anomaly_score)

# Log for tracking

log_metric("pipeline_failure_probability", failure_probability)

# Take preventive action if needed

if failure_probability > 0.7:

trigger_preventive_maintenance()

This predictive approach enables:

- Early Warning System: Identify potential failures before they occur

- Preventive Maintenance: Address issues during non-critical periods

- Resource Optimization: Allocate engineering time to highest-risk areas

- Continuous Improvement: Learn from near-misses to enhance reliability

Advanced implementations often establish formal monitoring of data “contracts” between teams:

# Example: Data contract monitoring

from dbnd import task, dataset, log_metric, config

import great_expectations as ge

@config

class OrderDataContract:

min_orders_per_day = 1000

max_null_customer_percentage = 0.01

required_fields = ['order_id', 'customer_id', 'amount', 'timestamp']

sla_freshness_hours = 2

@task

def validate_order_data_contract(order_data: dataset):

# Read and validate the dataset

df = order_data.read()

# Create validation context

context = ge.dataset.PandasDataset(df)

# Validate schema contract

for field in OrderDataContract.required_fields:

context.expect_column_to_exist(field)

# Validate volume contract

context.expect_table_row_count_to_be_between(

min_value=OrderDataContract.min_orders_per_day,

max_value=None

)

# Validate quality contract

for field in OrderDataContract.required_fields:

context.expect_column_values_to_not_be_null(

field,

mostly=1.0 - OrderDataContract.max_null_customer_percentage

)

# Validate freshness contract

latest_timestamp = df['timestamp'].max()

hours_delay = (current_time() - latest_timestamp).total_seconds() / 3600

freshness_compliant = hours_delay <= OrderDataContract.sla_freshness_hours

# Log contract compliance

log_metric("contract_compliance", context.validate().success)

log_metric("freshness_compliance", freshness_compliant)

return context.validate()

This contract-based approach enables:

- Clear Expectations: Formal definitions of data requirements

- Team Accountability: Transparent responsibility for data quality

- SLA Monitoring: Tracking compliance with service agreements

- Change Management: Controlled evolution of data contracts

Advanced users leverage Databand to understand relationships between different pipelines:

- Dependency Visualization: Map how data flows between different pipeline systems

- Impact Analysis: Identify downstream effects of pipeline changes or issues

- Resource Correlation: Understand how pipelines interact in shared environments

- Business Process Mapping: Connect technical pipelines to business operations

- Cross-Team Visibility: Create shared understanding across organizational boundaries

This correlation capability provides a comprehensive view of the data ecosystem.

Organizations implementing Databand typically realize several key benefits:

The financial impact of improved pipeline reliability is significant:

- Reduced Downtime: 30-70% decrease in pipeline failures and delays

- Faster Resolution: 50-80% reduction in mean time to detection and resolution

- Engineering Efficiency: 25-40% reduction in time spent troubleshooting

- Business Protection: Prevention of costly decisions based on unreliable data

These metrics translate directly to bottom-line benefits through operational efficiency and risk reduction.

Beyond operational improvements, Databand builds confidence in data assets:

- Verified Reliability: Demonstrated track record of data pipeline performance

- Transparent Quality: Clear visibility into data characteristics and issues

- Consistent Delivery: Predictable data availability for business processes

- Issue Transparency: Open communication about data challenges and resolutions

This trust enables greater organizational adoption of data-driven practices.

Reliable pipelines create a foundation for data innovation:

- Faster Iteration: More time building new capabilities, less time fixing issues

- Reduced Risk: Confidence to deploy new data products and pipelines

- Higher Quality Insights: Better business decisions based on reliable information

- Self-Service Enablement: Empowered business users with trusted data

Organizations with reliable pipelines can innovate faster with lower risk.

As data ecosystems continue to evolve, pipeline observability is advancing in several key directions:

Machine learning is increasingly central to advanced pipeline monitoring:

- Autonomous Anomaly Detection: Self-learning systems that identify unusual patterns

- Root Cause Prediction: AI-driven suggestions for probable failure causes

- Optimal Resolution Paths: Recommended troubleshooting approaches based on historical successes

- Preventive Intervention: Automated adjustments to prevent predicted failures

These capabilities promise to further reduce manual monitoring overhead.

Pipeline observability is becoming central to DataOps practices:

- Continuous Quality: Quality validation throughout the development lifecycle

- Observability as Code: Pipeline monitoring defined alongside pipeline logic

- Automated Remediation: Self-healing pipelines for common failure patterns

- Service Level Objectives: Formal reliability targets for data services

This integration embeds reliability into the fabric of data engineering.

The distinction between application and data observability is blurring:

- Full-Stack Visibility: Connected monitoring across applications and data

- Business Context Integration: Technical metrics tied directly to business processes

- End-User Experience Correlation: Connecting data quality to application performance

- Shared Observability Platforms: Unified tooling for all technical teams

This convergence creates more comprehensive visibility into technical systems.

Organizations achieving the greatest success with Databand follow several key practices:

Focus initial implementation on business-critical data flows:

- Identify pipelines directly supporting key business operations

- Prioritize processes with known reliability challenges

- Focus on high-visibility analytics and reporting pipelines

- Consider data feeding machine learning models and AI systems

- Target pipelines with cross-team dependencies

This focused approach delivers maximum initial value and builds momentum.

Establish explicit responsibility for pipeline reliability:

- Assign ownership for key pipeline components

- Create standardized incident response workflows

- Define escalation paths for critical failures

- Establish communication templates for stakeholders

- Implement post-incident review processes

These operational elements ensure technical capabilities translate to business outcomes.

Implement monitoring that balances breadth and detail:

- Establish basic execution monitoring across all pipelines

- Implement detailed data quality checks for critical datasets

- Define appropriate alerting thresholds to minimize noise

- Focus advanced monitoring on highest-business-impact areas

- Gradually increase monitoring sophistication as teams mature

This balanced approach maximizes value while avoiding monitoring overload.

Support technical capabilities with organizational practices:

- Include pipeline reliability metrics in team objectives

- Celebrate reliability improvements and issue prevention

- Create shared understanding of pipeline health across teams

- Implement “reliability champions” within data organizations

- Establish regular pipeline health reviews

This cultural dimension ensures sustainable, long-term impact.

As organizations increasingly depend on data for critical business operations, the reliability of data pipelines has become a strategic imperative. Pipeline failures, quality issues, and performance problems can silently undermine the data assets that support key business decisions and customer experiences. Databand addresses this challenge by providing specialized observability designed specifically for modern data pipelines.

By combining comprehensive execution tracking with data-aware monitoring and powerful troubleshooting capabilities, Databand enables data teams to shift from reactive firefighting to proactive reliability management. This transformation yields quantifiable benefits through reduced downtime, improved efficiency, and enhanced trust in data assets.

The most successful implementations of Databand balance technical capabilities with organizational practices, creating not just better monitoring but a fundamentally different approach to pipeline reliability. In a world where data increasingly drives business success, the ability to ensure that data pipelines operate reliably isn’t just an engineering concern—it’s a business necessity.

As data ecosystems continue to grow in complexity, platforms like Databand will play an increasingly vital role in ensuring that organizations can depend on their most critical data flows. The future of data engineering lies not just in building more powerful pipelines, but in creating reliable, observable data systems that organizations can trust to deliver consistent business value.

#Databand #DataPipelines #DataObservability #DataEngineering #DataOps #IBMDataband #PipelineMonitoring #DataQuality #DataReliability #ETLMonitoring #AirflowMonitoring #DataLineage #AnomalyDetection #DataGovernance #CloudData #BigData #DataScience #DataPlatform #MachineLearning #DataStack

Why should I use Databand?

Choosing Databand as your data observability platform can significantly enhance your ability to manage and optimize data workflows within your data infrastructure. Databand is a proactive data monitoring and observability tool designed to detect, diagnose, and resolve data quality issues in data pipelines before they impact your business operations. Here are several compelling reasons why selecting Databand could be beneficial for your organization:

### 1. **Proactive Data Quality Monitoring**

Databand offers proactive monitoring capabilities that allow teams to detect issues in data quality and pipeline performance early. By providing alerts on anomalies, failures, and performance degradations, Databand helps prevent the costly consequences of data downtime and errors in analytics and operational systems.

### 2. **End-to-End Data Pipeline Observability**

Databand provides comprehensive visibility into your data pipelines from end to end. It tracks data lineage, metadata, and the health of data processes, enabling you to understand dependencies and the impact of data issues across your systems. This visibility is crucial for complex environments where changes in one part of the system can affect multiple downstream processes.

### 3. **Root Cause Analysis**

With features designed to facilitate quick and accurate root cause analysis, Databand reduces the time and effort required to diagnose and fix data issues. It allows you to drill down into specific incidents to understand why they occurred, examining everything from changes in data volume to shifts in data quality metrics.

### 4. **Integration with Popular Data Processing Tools**

Databand integrates seamlessly with a wide range of data processing frameworks and orchestration tools such as Apache Airflow, Apache Spark, and Kubernetes, among others. This integration capability ensures that you can easily implement Databand within your existing data infrastructure without significant changes or disruptions.

### 5. **Customizable Alerts and Notifications**

You can customize alerts and notifications based on specific thresholds for data quality, volume, and processing metrics. This customization ensures that the right team members receive relevant alerts at the right time, enabling swift action to mitigate potential issues.

### 6. **Enhanced Collaboration**

Databand fosters collaboration among data engineers, scientists, and analysts by providing a shared platform for monitoring data health and pipeline performance. This shared view helps align teams on data quality goals and facilitates more efficient troubleshooting and decision-making.

### 7. **Cost Reduction**

By enabling faster detection and resolution of data issues, Databand helps reduce the costs associated with data downtime and erroneous data-driven decisions. It also optimizes the performance of data operations, which can lead to cost savings in data processing and storage.

### 8. **Regulatory Compliance and Governance**

For organizations subject to strict data governance and regulatory compliance, Databand’s tracking and auditing capabilities ensure transparency and accountability in data handling and processing. This feature supports compliance with regulations such as GDPR, HIPAA, and others by providing historical records of data quality and incidents.

### Conclusion

If your organization relies heavily on data and requires robust mechanisms to ensure the integrity, reliability, and timeliness of your data products, Databand is an excellent choice. Its proactive monitoring, deep integrations with existing tools, and comprehensive analytics features make it a powerful ally in maintaining the health of your data ecosystem and supporting your data-driven business objectives.

When should I use Databand?

Databand is a data observability platform designed to help organizations proactively monitor, manage, and resolve issues in their data pipelines. Choosing to implement Databand is particularly beneficial in specific scenarios where data quality, pipeline efficiency, and system reliability are critical. Here are several situations where using Databand would be especially advantageous:

### 1. **Complex Data Environments**

If your organization manages complex data workflows that involve multiple sources and destinations, Databand can provide the necessary visibility to ensure smooth operations. It is particularly useful in environments where data is constantly moving and changing, such as in big data platforms, data lakes, and data warehouses.

### 2. **High Dependency on Data Accuracy**

Industries such as finance, healthcare, and logistics, where decisions heavily rely on the accuracy and timeliness of data, will find Databand indispensable. It helps ensure that the data feeding into critical decision-making processes is correct, complete, and delivered on time.

### 3. **Frequent Data Pipeline Failures**

If your data pipelines frequently encounter failures, slowdowns, or disruptions, Databand can help identify and diagnose these issues quickly. By providing tools for root cause analysis and real-time monitoring, it helps reduce downtime and improve the reliability of data operations.

### 4. **Scaling Data Operations**

As organizations scale their data operations, the complexity and volume of data tend to increase. Databand aids in managing this complexity by providing scalable observability solutions that grow with your infrastructure, helping to maintain oversight and control as your data environment expands.

### 5. **Regulatory Compliance and Data Governance**

For organizations subject to regulatory requirements related to data handling and processing (such as GDPR in Europe or HIPAA in the U.S.), Databand supports compliance efforts by tracking data quality, lineage, and processing activities. This is critical for maintaining data governance standards and providing audit trails.

### 6. **Optimizing Cloud Data Management Costs**

Organizations looking to optimize cloud costs related to data processing and storage will benefit from Databand’s ability to monitor and report on resource usage. It can help identify inefficiencies and unnecessary expenses in data operations, allowing for more cost-effective management of cloud resources.

### 7. **Collaborative Data Teams**

In environments where multiple teams (data engineers, data scientists, and analysts) need to collaborate on data projects, Databand provides a common platform for monitoring and communicating about data health. This improves collaboration and speeds up problem resolution.

### 8. **Preventing Data Incidents**

Organizations that have experienced significant setbacks due to data incidents—such as data corruption, loss, or breaches—will find Databand’s proactive monitoring and alerting capabilities crucial in preventing future incidents. It ensures that potential issues are identified and addressed before they can impact the business.

### Conclusion

Databand is ideal for any organization that seeks to maintain high standards of data quality, ensure the reliability of data workflows, and foster a proactive approach to data management. By implementing Databand, you can significantly reduce the risks associated with data quality issues, improve operational efficiency, and support more informed decision-making across your organization.