Great Expectations: Transforming Data Reliability Through Automated Validation and Documentation

In the data-driven landscape of modern business, the reliability of data has never been more critical. As organizations increasingly base strategic decisions on their data assets, the consequences of poor data quality—inaccurate analyses, flawed predictions, and misguided business strategies—can be severe. Enter Great Expectations, an open-source Python framework that has revolutionized how data teams ensure data quality through automated validation and comprehensive documentation.

Great Expectations brings software engineering best practices to data engineering and data science, tackling the persistent challenge of data quality at scale. By providing a framework for expressing what you “expect” from your data, validating those expectations, and documenting them automatically, Great Expectations helps organizations build trust in their data and the processes that transform it.

This article explores how Great Expectations works, its key capabilities, implementation strategies, and real-world applications that can transform your approach to data quality management.

Before diving into Great Expectations, it’s worth understanding the fundamental data quality challenges it addresses:

Most organizations struggle with a persistent “trust gap” in their data:

- Data scientists spend 60-80% of their time cleaning and validating data rather than generating insights

- Unexpected schema changes or data anomalies break downstream processes

- Teams develop redundant validation logic across different systems

- Documentation about data constraints and quality becomes outdated or remains tribal knowledge

- Data issues are often discovered only after they’ve impacted business operations

These challenges grow exponentially as data volumes increase and data ecosystems become more complex.

The business impact of data quality issues is substantial:

- IBM estimates that poor data quality costs the US economy $3.1 trillion annually

- Gartner research shows the average financial impact of poor data quality on organizations is $12.9 million per year

- Data scientists waste approximately 45% of their time on data quality issues rather than analysis

- According to Harvard Business Review, only 3% of companies’ data meets basic quality standards

These statistics highlight why data quality has become a top priority for data-driven organizations.

Great Expectations is an open-source Python framework that helps data teams validate, document, and profile their data to eliminate pipeline debt, collaborate effectively, and manage data quality throughout the data lifecycle.

Great Expectations is built around several key principles:

- Expectations as a First-Class Citizen: Formalize what you expect from your data

- Test-Driven Data Development: Apply software testing principles to data

- Automated Documentation: Generate human-readable documentation automatically

- Data Profiling for Discovery: Use statistics to understand and validate data

- Integration-First Design: Work within existing data ecosystems rather than replacing them

These principles align with modern data engineering practices and complement existing tools and workflows.

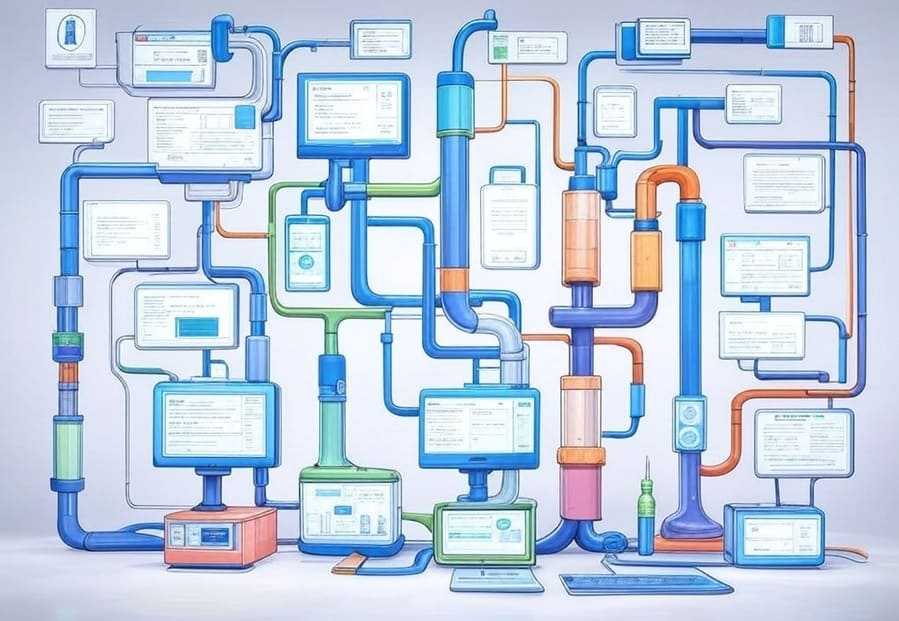

Great Expectations has several core components that work together to provide comprehensive data quality management:

At the heart of Great Expectations are “expectations” – declarative statements about what you expect from your data:

# Example: Creating basic expectations

import great_expectations as ge

# Load your data

df = ge.read_csv("customer_data.csv")

# Define expectations

df.expect_column_values_to_not_be_null("customer_id")

df.expect_column_values_to_be_between("age", min_value=0, max_value=120)

df.expect_column_values_to_match_regex("email", r"[^@]+@[^@]+\.[^@]+")

df.expect_column_mean_to_be_between("purchase_amount", min_value=50, max_value=200)

These expectations are readable, declarative statements that express data constraints, patterns, and statistical properties. Great Expectations provides over 50 built-in expectations and supports custom expectations for organization-specific requirements.

Validators are the execution engines that check if data meets your expectations:

- Dataset Validators: Check data in memory (Pandas DataFrames, Spark DataFrames)

- Batch Validators: Validate data from databases, files, or other sources

- Checkpoint Validators: Run expectations in production data pipelines

Validators provide a consistent interface for validating data regardless of where it resides.

The Data Context manages the configuration and execution environment for Great Expectations:

# Initializing a Data Context

import great_expectations as ge

# Create or load a Data Context

context = ge.get_context()

# Use the context to create and run validation

batch = context.get_batch(

datasource_name="my_datasource",

data_connector_name="default_inferred_data_connector_name",

data_asset_name="customer_data"

)

# Run a checkpoint

results = context.run_checkpoint(

checkpoint_name="my_checkpoint",

batch_request=batch

)

This centralized configuration enables consistent validation across environments from development to production.

One of Great Expectations’ most powerful features is automatic documentation generation:

- Expectation Suite Documentation: Human-readable documentation of all expectations

- Validation Results: Visual reports showing which expectations passed or failed

- Data Profiling Reports: Statistical summaries of your data

These HTML documents provide transparency into data quality and serve as a communication tool between data producers and consumers.

Successfully implementing Great Expectations requires a thoughtful approach tailored to your organization’s needs:

Most successful implementations follow an iterative pattern:

- Start Small: Begin with one critical dataset and a few key expectations

- Profile Data: Use the profiling capabilities to understand current data patterns

- Define Expectations: Create initial expectations based on business rules and data understanding

- Integrate Validation: Add validation to existing pipelines or workflows

- Generate Documentation: Create and share Data Docs with stakeholders

- Expand Coverage: Gradually add more datasets and expectations

- Automate Response: Implement actions based on validation results

This approach delivers value quickly while building toward comprehensive quality management.

Great Expectations is designed to integrate with existing data stacks:

# Example: Integrating with Airflow

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime, timedelta

import great_expectations as ge

def validate_data():

context = ge.get_context()

results = context.run_checkpoint(

checkpoint_name="daily_customer_validation"

)

if not results["success"]:

raise Exception("Data validation failed!")

return results

default_args = {

'owner': 'data_team',

'depends_on_past': False,

'start_date': datetime(2023, 1, 1),

'email_on_failure': True,

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

dag = DAG(

'daily_data_validation',

default_args=default_args,

schedule_interval='0 0 * * *',

)

validate_task = PythonOperator(

task_id='validate_customer_data',

python_callable=validate_data,

dag=dag,

)

Similar integrations can be built for other orchestration tools like Prefect, Dagster, or Apache Beam.

Great Expectations connects with various data sources:

- Relational Databases: SQL Server, PostgreSQL, MySQL, Oracle, etc.

- Data Warehouses: Snowflake, BigQuery, Redshift, etc.

- File Systems: Local files, S3, GCS, HDFS, etc.

- Processing Frameworks: Spark, Pandas, Dask, etc.

This flexibility allows validation to happen where your data resides.

Incorporating data validation into CI/CD pipelines:

# Example: GitHub Actions workflow for data validation

name: Data Validation

on:

schedule:

- cron: '0 0 * * *' # Daily at midnight

workflow_dispatch: # Allow manual triggers

jobs:

validate:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.8'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install great_expectations

- name: Run validation

run: |

python -m great_expectations checkpoint run daily_validation

- name: Publish Data Docs

if: always()

run: |

python -m great_expectations docs build

# Additional steps to publish docs

This approach enables data validation as part of the software development lifecycle.

As your Great Expectations implementation matures, consider these advanced patterns:

Implement multiple validation levels with different consequences:

- Warning Level: Alert but don’t fail pipelines

- Error Level: Block data from proceeding to production

- Critical Level: Trigger incident response procedures

This nuanced approach balances quality requirements with operational needs.

Create hierarchical expectations for different data contexts:

- Base Expectations: Apply to all data of a certain type

- Domain-Specific Expectations: Add rules for specific business domains

- Pipeline-Specific Expectations: Include transformation-specific validations

This approach promotes reuse while allowing for specialization.

For large-scale implementations, automate expectation creation:

- Use data profiling to generate initial expectations

- Leverage historical data patterns to define statistical thresholds

- Extract business rules from documentation or code

- Apply machine learning to identify anomaly detection rules

This automation accelerates implementation across large data estates.

Great Expectations is being used across industries to solve various data quality challenges:

A global bank implemented Great Expectations to address regulatory reporting requirements:

- Challenge: Ensuring accurate regulatory submissions with complete audit trails

- Implementation:

- Defined expectations based on regulatory requirements

- Integrated validation into the reporting pipeline

- Generated documentation for auditors

- Established quality gates before submission

- Results:

- 90% reduction in reporting errors

- 60% faster response to regulatory inquiries

- Comprehensive documentation for auditors

- Early detection of data issues before regulatory submission

A healthcare provider used Great Expectations to ensure reliable patient analytics:

- Challenge: Maintaining accurate patient records across multiple systems

- Implementation:

- Created expectations for demographic data completeness

- Implemented validation during data integration processes

- Generated data quality scorecards for clinical departments

- Built expectations for clinical measurements and observations

- Results:

- Improved trust in patient analytics

- Reduced duplicate patient records by 45%

- Enhanced clinical decision support through reliable data

- Better regulatory compliance for protected health information

An online retailer implemented Great Expectations for their customer data platform:

- Challenge: Creating reliable customer profiles from diverse data sources

- Implementation:

- Defined cross-source consistency expectations

- Implemented validation during the ETL process

- Created expectations for customer behavior patterns

- Generated documentation for marketing analysts

- Results:

- 30% improvement in campaign targeting accuracy

- Reduced customer data integration errors by 70%

- Faster time-to-insight for customer analytics

- Improved trust in personalization algorithms

Beyond basic validation, Great Expectations offers several advanced capabilities:

Great Expectations can analyze your data and suggest appropriate expectations:

# Profiling example

import great_expectations as ge

df = ge.read_csv("customer_data.csv")

profile = df.profile()

# Generate expectations based on profile

expectation_suite = profile.generate_expectation_suite(

suite_name="auto_generated_suite",

profile_dataset=True,

excluded_expectations=None,

ignored_columns=None

)

# View suggested expectations

print(expectation_suite.expectations)

This capability accelerates implementation by learning from your data’s actual patterns.

For time-series or statistical data, Great Expectations supports anomaly detection:

- Distribution-Based Expectations: Validate that data follows expected distributions

- Moving Window Validations: Compare current data to recent historical patterns

- Seasonality Awareness: Account for expected temporal variations

- Statistical Significance Tests: Determine if changes are meaningful

These capabilities extend validation beyond simple rules to complex statistical patterns.

Great Expectations can integrate with metadata repositories and data catalogs:

- Record validation results as metadata

- Link expectations to business glossary terms

- Capture data quality scores in data catalogs

- Track quality metrics over time

This integration enhances data governance and discovery processes.

As Great Expectations continues to evolve, several emerging trends are worth noting:

Moving beyond point-in-time validation to continuous monitoring:

- Real-time quality metrics and dashboards

- Anomaly detection across the entire data lifecycle

- Correlation of data quality with business impacts

- Predictive quality monitoring to anticipate issues

This observability approach treats data quality as an operational concern rather than just a development checkpoint.

Machine learning is enhancing validation capabilities:

- Automatically discovering complex data patterns

- Detecting subtle anomalies that rule-based approaches miss

- Generating expectations based on historical patterns

- Predicting quality issues before they occur

These capabilities will make validation both more powerful and easier to implement.

As data ecosystems become more distributed, quality management must follow:

- Consistent validation across multi-cloud environments

- Local enforcement with centralized governance

- Shared expectations across organizational boundaries

- Cross-system lineage for quality issues

Great Expectations is evolving to address these distributed data challenges.

Organizations that get the most value from Great Expectations follow these best practices:

Connect validation directly to business outcomes:

- Identify the most business-critical data assets

- Determine what “quality” means for each use case

- Prioritize validation based on business impact

- Measure and communicate quality improvements in business terms

This approach ensures validation delivers tangible value.

Technology alone doesn’t solve quality challenges:

- Define clear data quality ownership

- Establish processes for handling quality issues

- Create feedback loops between data producers and consumers

- Include quality metrics in team objectives

- Celebrate quality improvements

Cultural change is often more challenging than technical implementation.

Balance automation with human judgment:

- Automate routine validation and documentation

- Reserve human review for complex quality decisions

- Create clear escalation paths for quality issues

- Document the “why” behind expectations

- Regularly review and refine expectations

This balance ensures efficiency without losing critical context.

Data quality needs change over time:

- Version control your expectations

- Implement expectation review processes

- Plan for graceful handling of schema evolution

- Build flexibility into threshold settings

- Create processes for adding new expectations

This evolutionary approach ensures validation remains relevant as data changes.

Great Expectations represents a significant advancement in how organizations approach data quality. By bringing software engineering best practices to data validation, it provides a structured, automated, and documentable approach to ensuring data reliability.

The framework’s power lies not just in its technical capabilities but in how it changes the conversation around data quality. By making expectations explicit, validation automated, and documentation comprehensive, Great Expectations helps close the trust gap that plagues many data initiatives.

Organizations that successfully implement Great Expectations gain several key advantages:

- Increased Trust: Teams can confidently use data for critical decisions

- Reduced Toil: Automated validation eliminates repetitive quality checks

- Better Collaboration: Shared documentation improves communication about data

- Faster Time-to-Insight: Less time spent cleaning data means more time generating value

- Reduced Risk: Catching quality issues early prevents downstream impacts

As data continues to grow in both volume and strategic importance, frameworks like Great Expectations will become essential infrastructure for data-driven organizations. Whether you’re building a new data platform or improving an existing one, Great Expectations provides the tools needed to ensure your data is not just big, but reliably good.

#GreatExpectations #DataValidation #DataQuality #DataEngineering #DataTesting #PythonDataTools #ETLPipeline #DataDocumentation #OpenSourceData #DataReliability #DataObservability #DataGovernance #TestDrivenDevelopment #DataOps #DataProfiling #PythonFramework #DataPipelines #QualityAssurance #DataIntegrity #MLOps

Where I should use Great Expectations?

**[Great Expectations](https://greatexpectations.io/)** is a **data quality and validation tool** that helps you ensure your data is clean, correct, and trustworthy at every step of your pipeline.

Let’s look at **where and how you’d use it in real life**, especially as a Data Engineer working with tools like **Snowflake, dbt, Airflow, and Databricks**.

—

## ✅ What Does Great Expectations Do?

It allows you to define “**expectations**” like:

– Column `user_id` **should never be null**

– Column `signup_date` **should be a date**

– Column `revenue` **should be non-negative**

– You should have **exactly 12 unique months** in a table

And then… it **automatically tests** your data for you 📊

—

## 🔧 WHERE You Should Use It (With Real-Life Examples)

—

### 1️⃣ **During Ingestion (Raw Data Validation)**

**Example: Pulling data from a CRM or billing system into S3/Snowflake**

✅ **Use GE to check:**

– Are all required fields present?

– Are timestamps in the expected format?

– Did we receive enough rows today?

🛠 Use GE in a **Glue Job, Databricks Notebook**, or **Airflow DAG** right after ingestion.

—

### 2️⃣ **Before Loading into Data Warehouse or Lakehouse**

**Example: You receive CSVs or JSON files into S3 that go into Snowflake.**

✅ Use GE to **test the files** before loading:

– File isn’t empty

– Columns match expected schema

– Values are within expected ranges

📦 Helps **catch bad files** before they pollute your DWH.

—

### 3️⃣ **After Transformation with dbt or Spark**

**Example: You build reporting tables using dbt in Snowflake.**

✅ Add GE to validate:

– No NULLs in `primary_key`

– Unique values in dimension tables

– Sum of revenue in marts matches staging layer

🛠 You can use **GE + dbt together** or run a **GE validation in a post-hook**.

—

### 4️⃣ **As a Scheduled Monitor (Data Freshness + Health Checks)**

**Example: Daily validation of pipeline output in production**

✅ Create scheduled **daily GE runs** to:

– Validate freshness (e.g., latest date is today)

– Ensure row count doesn’t drop 90% unexpectedly

– Alert if KPIs drift

📬 GE can email or Slack you alerts when something fails.

—

### 5️⃣ **During CI/CD (Testing Models Before Deployment)**

**Example: You build a new model in dbt and want to verify it before merging to production.**

✅ Run **Great Expectations in your CI pipeline**:

– Validate test data

– Check that outputs match business logic

– Prevent bad code from going live

—

## 🧠 Summary – When to Use Great Expectations

| Stage | Use GE to… | Example |

|——-|————|———|

| 🔄 Ingestion | Validate raw file shape, types, and nulls | Check S3 CSVs or API results |

| 🏗 Transformation | Validate dbt or Spark output | Enforce primary keys, totals |

| 📅 Monitoring | Daily checks on prod data | Alert on missing dates or low volume |

| 🚦 Deployment | CI/CD validation for models | Block bad data before merge |