Apache NiFi: Revolutionizing Data Flow Automation for the Modern Enterprise

In today’s data-driven landscape, organizations face an increasing challenge: how to reliably collect, process, and distribute massive volumes of data across diverse systems and environments. As data volumes explode and sources multiply, traditional integration approaches struggle to keep pace with the complexity, scale, and security requirements of modern data ecosystems. This is where Apache NiFi emerges as a game-changing solution.

Apache NiFi represents a paradigm shift in data flow automation—offering a powerful, visual platform for automating and managing the movement of data between any source and destination. Originally developed by the National Security Agency (NSA) and later donated to the Apache Software Foundation, NiFi combines enterprise-grade capabilities with the innovation of open-source development to deliver a uniquely effective approach to data logistics.

This article explores how Apache NiFi is transforming data flow management across industries, its core architecture and capabilities, implementation strategies, and real-world applications that can help your organization build more reliable, scalable, and secure data pipelines.

Before diving into NiFi’s capabilities, it’s worth understanding the fundamental challenges in modern data flow management:

Traditional data integration faces mounting challenges:

- Exploding Data Volume: From gigabytes to petabytes across thousands of sources

- Format Diversity: Structured, semi-structured, and unstructured data

- Protocol Proliferation: Different systems with varied communication methods

- Edge Processing: Need for intelligence at data collection points

- Real-Time Requirements: Demand for immediate data processing and delivery

These factors create significant barriers to effective data integration.

As data becomes more critical to business operations, reliability concerns grow:

- Data Provenance: Tracking data origin and transformation history

- Flow Monitoring: Visibility into data movement and processing

- Error Handling: Graceful management of failures and exceptions

- Security Compliance: Meeting stringent data protection requirements

- Auditability: Comprehensive logging of all data access and modification

These governance requirements often exceed the capabilities of traditional tools.

Modern data environments demand unprecedented adaptability:

- Dynamic Scaling: Adjusting to fluctuating data volumes

- Deployment Flexibility: Running across diverse infrastructure

- Evolution Support: Adapting to changing requirements

- Technology Diversity: Connecting both legacy and cutting-edge systems

- Cross-Environment Flow: Spanning on-premises and multiple clouds

This need for adaptability pushes traditional integration tools beyond their limits.

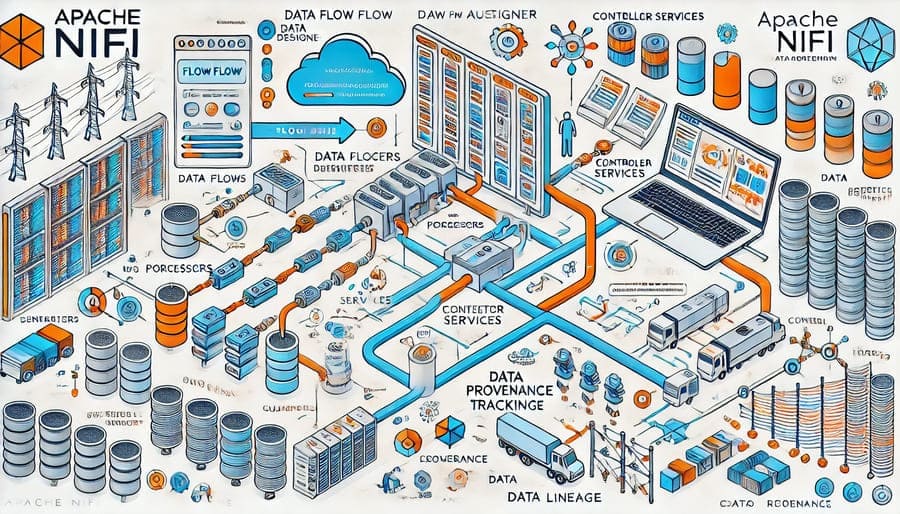

Apache NiFi is a powerful, enterprise-grade data flow automation platform designed to collect, route, transform, and process data from any source to any destination. Its fundamental approach is based on Flow-Based Programming principles, representing data flows as networks of processors connected by queues.

NiFi is built around several key principles:

- Visual Flow Management: Drag-and-drop interface for designing complex data flows

- Data Provenance: Comprehensive tracking of data lineage and transformation

- Configurable Components: Flexible processors for varied data operations

- Extensible Architecture: Ability to create custom components

- Reliability-First Design: Built to handle failure gracefully

- Security-Embedded: Authentication, authorization, and encryption throughout

These principles create a platform uniquely suited for enterprise data flow management.

NiFi implements a sophisticated architecture designed for reliability and scalability:

At the heart of NiFi is its intuitive, browser-based flow design interface:

+--------------------------------------------------+

| |

| +---------+ +------------+ +-----------+ |

| | | | | | | |

| | GetFile |--->| SplitJSON |--->| PutKafka | |

| | | | | | | |

| +---------+ +------------+ +-----------+ |

| |

| +---------+ +-------------+ +-----------+ |

| | | | | | | |

| | GetSFTP |--->| ConvertCSV |-->| PutHDFS | |

| | | | | | | |

| +---------+ +-------------+ +-----------+ |

| |

+--------------------------------------------------+

Apache NiFi Flow Designer Interface

This interface allows users to:

- Visually design complex data flows

- Configure processors through property dialogs

- Monitor flow performance in real-time

- Troubleshoot issues with integrated tools

- Manage controller services and reporting tasks

NiFi’s processing model centers on processors—modular components that perform specific actions:

- Source Processors: Fetch data from external systems (GetFile, GetHTTP, GetKafka)

- Transformation Processors: Modify data content (ConvertJSON, ExecuteScript, JoltTransformJSON)

- Routing Processors: Direct data based on content or attributes (RouteOnAttribute, RouteOnContent)

- Destination Processors: Send data to external systems (PutFile, PutHDFS, PutKafka)

- Mediation Processors: Handle data flow mechanics (MergeContent, SplitContent)

NiFi comes with 300+ built-in processors, covering a vast range of use cases.

All data in NiFi is represented as FlowFiles—objects containing:

- Content: The actual data payload

- Attributes: Metadata key-value pairs describing the data

- Provenance Information: Lineage and processing history

This unified model provides consistency and traceability throughout the data flow.

Processors are linked by connections that act as queues:

- Back Pressure Management: Limiting upstream flow when overloaded

- Prioritization: Processing higher-priority data first

- Load Balancing: Distributing work across processor instances

- Data Expiration: Setting time-based limits on queued data

- Flow Monitoring: Visualizing queue status and size

These intelligent queues provide flow control and resilience.

NiFi provides shared services accessible across the flow:

- Database Connection Pools: Shared database access

- SSL Context Services: Centralized security configuration

- Distributed Cache Services: Shared state management

- Record Readers/Writers: Common data format handling

- Reporting Tasks: System-wide monitoring and metrics

These shared services promote consistency and efficiency.

NiFi provides several core capabilities that make it particularly valuable for enterprise data flow management:

NiFi’s web-based interface transforms flow creation:

- Drag-and-Drop Design: Intuitive flow creation without coding

- Real-Time Monitoring: Visual indication of flow performance

- Versioned Flows: Change tracking and version control

- Template Support: Reusable flow components

- Process Group Nesting: Hierarchical organization of flows

This visual approach makes complex flows more accessible and manageable:

+-------------------------------------------------------------+

| |

| +---------------------+ +------------------------+ |

| | | | | |

| | Ingest Process | | Transformation | |

| | Group | | Process Group | |

| | | | | |

| | +--------------+ | | +-----------------+ | |

| | | GetSFTP | | | | ValidateRecord | | |

| | +--------------+ | | +-----------------+ | |

| | | | | | | |

| | v | | v | |

| | +--------------+ | | +-----------------+ | |

| | | ValidateXML | | | | NormalizeFields | | |

| | +--------------+ | | +-----------------+ | |

| | | | | | | |

| +---------------------+ +------------------------+ |

| | | |

| v v |

| +----------------+ +-------------------+ |

| | | | | |

| | Data Quality | | Delivery | |

| | Process Group | | Process Group | |

| | | | | |

| +----------------+ +-------------------+ |

| |

+-------------------------------------------------------------+

NiFi Process Group Hierarchy Example

NiFi tracks detailed lineage for all data:

- Complete History: Tracking of all processing events

- Visualization: Graphical view of data lineage

- Replay Capability: Reprocessing data from any point

- Forensic Analysis: Investigation of data issues

- Compliance Support: Auditable record of data handling

This provenance creates unmatched transparency and traceability:

DATA PROVENANCE EXAMPLE:

FlowFile ID: 1234-5678-90ab-cdef

Origin: GetSFTP @ 2023-05-15 10:30:22

→ Attributes Added: filename, path, sftp.remote.host

→ Content Size: 1.2 MB

Event 1: SplitXML @ 2023-05-15 10:30:24

→ Split into 28 child FlowFiles

→ Attributes Added: fragment.index, fragment.count

Event 2: ValidateXML @ 2023-05-15 10:30:25

→ Attributes Added: validation.result, schema.version

→ Content Size unchanged

Event 3: TransformXML @ 2023-05-15 10:30:26

→ Attributes Added: transform.result

→ Content Size: 980 KB

Event 4: PutKafka @ 2023-05-15 10:30:27

→ Attributes Added: kafka.topic, kafka.key

→ Transferred to external system

NiFi connects with virtually any data source or destination:

- File Systems: Local, HDFS, S3, Azure Blob, Google Cloud Storage

- Messaging Systems: Kafka, JMS, MQTT, AMQP, RabbitMQ

- Databases: RDBMS, NoSQL, NewSQL, Time-Series databases

- REST APIs: Both consuming and providing web services

- Big Data Systems: Hadoop, Spark, Hive, HBase

- Cloud Services: AWS, Azure, Google Cloud Platform services

- IoT Protocols: MQTT, CoAP, OPC-UA

This connectivity creates a universal data integration fabric:

CONNECTIVITY EXAMPLES:

SOURCE SYSTEMS:

- SFTP servers (GetSFTP)

- HTTP/HTTPS APIs (InvokeHTTP)

- Kafka topics (ConsumeKafka)

- Databases (ExecuteSQL, QueryDatabaseTable)

- Cloud storage (ListS3, FetchAzureBlobStorage)

- Message queues (ConsumeJMS, ConsumeMQTT)

- Directory monitoring (ListFile, GetFile)

- Twitter feeds (GetTwitter)

- UDP/TCP sockets (ListenUDP, ListenTCP)

DESTINATION SYSTEMS:

- HDFS clusters (PutHDFS)

- Elasticsearch (PutElasticsearchHttp)

- Kafka topics (PublishKafka)

- Email (PutEmail)

- Slack (PutSlack)

- Databases (PutDatabaseRecord)

- Cloud storage (PutS3Object, PutAzureBlobStorage)

- MQTT brokers (PublishMQTT)

NiFi offers rich options for manipulating data:

- Content Transformation: Conversion between formats (JSON, XML, CSV, Avro)

- Attribute Manipulation: Extraction and modification of metadata

- Routing Logic: Directing data based on content or attributes

- Aggregation/Splitting: Combining or separating data streams

- Scripting Support: Custom logic in Python, Groovy, JavaScript

- Record-Oriented Processing: Schema-aware data handling

These transformation capabilities handle diverse processing requirements:

TRANSFORMATION EXAMPLES:

Content Transformation:

- ConvertRecord: Convert between JSON, CSV, Avro formats

- JoltTransformJSON: Apply JOLT specifications to JSON

- XsltTransform: Apply XSLT stylesheets to XML

- ExecuteScript: Custom transformations in Python/Groovy/JS

Attribute Manipulation:

- UpdateAttribute: Set attributes based on rules

- ExtractText: Extract data using regular expressions

- AttributesToJSON: Convert attributes to JSON

- EvaluateJsonPath: Extract JSON values to attributes

Content Routing:

- RouteOnAttribute: Direct based on attribute values

- RouteOnContent: Direct based on content patterns

- ControlRate: Throttle flow by attribute values

- DetectDuplicate: Identify repeated content

NiFi is built for mission-critical data flows:

- Guaranteed Delivery: Data persistence until successful processing

- Back-Pressure Mechanisms: Preventing system overload

- Data Buffering: Handling source/destination speed mismatches

- Graceful Failure Handling: Automatic retry and error routing

- Zero-Loss Deployment: Updates without data interruption

- High Availability: Clustered operation for reliability

These reliability features ensure data is never lost:

RELIABILITY MECHANISMS:

Content Repository:

- Persistent storage of all FlowFile content

- Configurable storage locations and retention

- Write-ahead logging for crash recovery

FlowFile Repository:

- Tracking of all in-flight FlowFiles

- Transaction support for atomic operations

- Checkpointing for recovery

Provenance Repository:

- Complete history of all data processing

- Configurable storage and retention policies

- Searchable audit trail for all data

Clustering:

- Multi-node operation with automatic failover

- Primary node election and cluster coordination

- Zero-master architecture for resilience

NiFi scales from edge devices to enterprise clusters:

- Horizontal Scaling: Adding nodes to increase capacity

- Vertical Scaling: Utilizing multiple cores efficiently

- MiNiFi: Lightweight edge deployment for IoT

- Site-to-Site Protocol: Efficient node-to-node communication

- Resource-Aware Scheduling: Optimizing processor placement

This scalability supports diverse deployment scenarios:

DEPLOYMENT SCENARIOS:

Edge Deployment:

- MiNiFi Java or C++ agents

- Lightweight data collection and filtering

- Secure transmission to central NiFi instance

Departmental NiFi:

- Single server deployment

- Handling moderate data volumes

- Supporting specific business unit needs

Enterprise Cluster:

- Multi-node NiFi deployment

- Load balancing across servers

- High availability configuration

- Handling terabytes of daily data flow

Hybrid Architecture:

- Edge collection with MiNiFi

- Departmental processing with NiFi clusters

- Cross-environment data movement

- Cloud integration for elastic processing

NiFi implements security throughout its architecture:

- Multi-Tenant Authorization: Fine-grained access control

- Multiple Authentication Methods: LDAP, Kerberos, certificates

- Data Encryption: Both at rest and in transit

- Sensitive Property Encryption: Protection of configuration values

- Security Automation: Secure data handling within flows

These security capabilities meet stringent enterprise requirements:

SECURITY FRAMEWORK:

Authentication Options:

- Username/Password with LDAP integration

- Kerberos Single Sign-On

- Certificate-based (X.509)

- OpenID Connect

- Custom authentication providers

Authorization Model:

- Policy-based access control

- Resource-level permissions

- User and group-based policies

- Access to specific processors/process groups

- Fine-grained component permissions

Secure Communications:

- TLS encryption for web UI

- TLS for site-to-site communications

- Encrypted connection to external services

- Automatic certificate management

Data Protection:

- Encrypted flowfile repositories

- Encrypted content repositories

- Secure sensitive properties

- Protected provenance information

Successfully implementing Apache NiFi requires thoughtful planning and execution:

NiFi supports multiple deployment models:

For smaller use cases or testing:

# Example: Basic NiFi startup

bin/nifi.sh start

# Configure memory allocation

export JAVA_ARGS="-Xms4g -Xmx8g"

bin/nifi.sh start

For production environments requiring scalability and reliability:

# Example clustered architecture

+------------------+ +------------------+

| | | |

| NiFi Node 1 | | NiFi Node 2 |

| - Processors | | - Processors |

| - Repositories | | - Repositories |

| - Flow State | | - Flow State |

| | | |

+------------------+ +------------------+

^ ^

| |

v v

+--------------------------------------------------+

| |

| ZooKeeper Ensemble |

| (Cluster Coordination) |

| |

+--------------------------------------------------+

^ ^

| |

v v

+------------------+ +------------------+

| | | |

| NiFi Node 3 | | NiFi Node 4 |

| - Processors | | - Processors |

| - Repositories | | - Repositories |

| - Flow State | | - Flow State |

| | | |

+------------------+ +------------------+

For IoT and distributed scenarios:

+-------------+ +-------------+ +-------------+

| | | | | |

| MiNiFi Edge | | MiNiFi Edge | | MiNiFi Edge |

| Device 1 | | Device 2 | | Device 3 |

| | | | | |

+-------------+ +-------------+ +-------------+

| | |

v v v

+------------------------------------------+

| |

| NiFi Edge Gateway |

| (Aggregation and Filtering) |

| |

+------------------------------------------+

|

v

+------------------------------------------+

| |

| NiFi Central Cluster |

| (Processing and Distribution) |

| |

+------------------------------------------+

| | |

v v v

+-----------+ +-----------+ +-----------+

| | | | | |

| Data Lake | | Analytics | | Data |

| | | Platform | | Warehouse |

+-----------+ +-----------+ +-----------+

Most successful NiFi deployments follow a phased approach:

- Discovery Phase

- Inventory key data flows and requirements

- Evaluate current integration challenges

- Define success criteria and metrics

- Identify initial use case for proof of concept

- Assess infrastructure needs

- Pilot Implementation

- Deploy NiFi in a controlled environment

- Implement initial data flows for selected use case

- Validate performance and functionality

- Document patterns and best practices

- Train initial team members

- Scaled Deployment

- Expand to additional use cases

- Implement proper production architecture

- Develop monitoring and management processes

- Create reusable templates and components

- Establish governance and security frameworks

- Operational Integration

- Integrate with broader IT monitoring systems

- Implement DevOps practices for flow management

- Establish continuous improvement processes

- Create comprehensive documentation

- Expand training across the organization

This incremental approach balances quick wins with sustainable implementation.

Effective NiFi implementations follow design patterns that promote maintainability and performance:

- Modular Design with Process Groups

- Organize flows in logical, reusable components

- Create clear boundaries between functional areas

- Enable independent testing and deployment

- Improve flow management and understanding

- Support team-based development

- Template and Controller Service Standardization

- Create standard templates for common patterns

- Centralize configuration through controller services

- Enforce consistent error handling

- Standardize monitoring and alerting

- Implement security patterns consistently

- Performance Optimization

- Configure appropriate concurrency for processors

- Implement batch processing where suitable

- Use back pressure to manage flow dynamics

- Configure adequate resources for critical components

- Monitor and tune based on empirical metrics

Comprehensive monitoring ensures reliable operation:

- NiFi-Native Monitoring

- Leverage built-in status history

- Configure reporting tasks for metrics collection

- Use bulletins for issue notification

- Implement data provenance monitoring

- Utilize component status snapshots

- External Monitoring Integration

- Connect to enterprise monitoring platforms

- Implement JMX metrics collection

- Configure log aggregation and analysis

- Set up alerting for critical conditions

- Create operational dashboards

- Management Processes

- Establish flow change management procedures

- Implement version control for flows

- Create backup and recovery processes

- Define scaling and capacity planning methods

- Document operational playbooks for common issues

Apache NiFi has been successfully applied across industries to solve diverse data flow challenges:

A global bank implemented NiFi for fraud detection:

- Challenge: Processing millions of transactions in real-time for fraud analysis

- Implementation:

- Deployed NiFi cluster to ingest transaction streams

- Implemented real-time enrichment with customer data

- Created rules-based routing for suspicious transactions

- Built comprehensive data provenance for compliance

- Integrated with machine learning fraud detection system

- Results:

- 70% faster fraud detection compared to previous system

- Complete transaction lineage for regulatory compliance

- Ability to process 20,000+ transactions per second

- Significant reduction in false positives through better data enrichment

A healthcare system used NiFi to unify patient data:

- Challenge: Integrating diverse clinical systems with varying formats and protocols

- Implementation:

- Deployed NiFi to connect 20+ clinical systems

- Implemented HL7 and FHIR processing for clinical data

- Created data normalization flows for consistent formats

- Built privacy-enhancing transformations for HIPAA compliance

- Established alerting for critical data delivery failures

- Results:

- Unified patient view across previously siloed systems

- Reduced integration development time by 60%

- Complete audit trail for sensitive patient data

- Enhanced clinical decision support through timely data integration

A manufacturing company deployed NiFi for industrial IoT:

- Challenge: Collecting and processing data from thousands of factory sensors

- Implementation:

- Implemented MiNiFi agents at edge locations

- Created hierarchical data collection architecture

- Built real-time analytics for predictive maintenance

- Implemented data quality validation for sensor readings

- Integrated with cloud-based machine learning platform

- Results:

- 45% reduction in unplanned equipment downtime

- Ability to process 100,000+ sensor readings per second

- Enhanced production quality through real-time monitoring

- Significant cost savings through predictive maintenance

Beyond basic data flow management, NiFi offers several advanced capabilities:

NiFi provides powerful tools for dynamic flow behavior:

# Example Expression Language

${filename:startsWith('INVOICE'):and(${fileSize:toNumber():gt(1024)}):and(${lastModified:toDate('yyyy-MM-dd'):isOlderThan('2 days')})}

This expression would evaluate to true for:

- Files starting with “INVOICE”

- Files larger than 1KB

- Files older than 2 days

Record-based processing enables schema-aware operations:

# Example Record Processing

CSVReader -> FilterRecord -> JSONWriter

# With schema validation and transformation:

{

"name": "customer",

"type": "record",

"fields": [

{"name": "id", "type": "string"},

{"name": "name", "type": "string"},

{"name": "email", "type": "string"},

{"name": "signup_date", "type": "string"}

]

}

NiFi enables sophisticated stateful processing:

- Component-Level State: Persistent storage for processor state

- Distributed State: Consistent state across cluster nodes

- Stateful Processing Patterns: Implementing memory across executions

- Windowed Processing: Time-based or count-based aggregations

- Incremental Processing: Tracking and continuing from previous positions

These capabilities enable complex use cases like:

- Incremental database queries

- Session-based processing

- Change data capture

- Time-windowed analytics

- Deduplication across batches

NiFi can be extended with custom components:

// Example NiFi Processor in Java

@Tags({"example", "custom"})

@CapabilityDescription("Example processor that demonstrates custom development")

public class MyCustomProcessor extends AbstractProcessor {

private static final PropertyDescriptor MY_PROPERTY = new PropertyDescriptor.Builder()

.name("My Property")

.description("Example configuration property")

.required(true)

.addValidator(StandardValidators.NON_EMPTY_VALIDATOR)

.build();

private static final Relationship SUCCESS = new Relationship.Builder()

.name("success")

.description("Successfully processed FlowFiles")

.build();

@Override

protected void init(ProcessorInitializationContext context) {

// Initialization logic

}

@Override

public Set<Relationship> getRelationships() {

return Collections.singleton(SUCCESS);

}

@Override

protected List<PropertyDescriptor> getSupportedPropertyDescriptors() {

return Collections.singletonList(MY_PROPERTY);

}

@Override

public void onTrigger(ProcessContext context, ProcessSession session) throws ProcessException {

// Processing logic

FlowFile flowFile = session.get();

if (flowFile == null) {

return;

}

// Perform transformation

flowFile = session.write(flowFile, (in, out) -> {

// Custom transformation logic

});

// Update attributes

flowFile = session.putAttribute(flowFile, "processed.timestamp",

String.valueOf(System.currentTimeMillis()));

// Transfer to success

session.transfer(flowFile, SUCCESS);

}

}

Custom extensions include:

- Processors for specialized data handling

- Controller services for shared functionality

- Reporting tasks for custom monitoring

- Record readers/writers for proprietary formats

- Authorization providers for custom security

As Apache NiFi continues to evolve, several key trends are emerging:

NiFi is increasingly adapting to cloud-native environments:

- Kubernetes Operators: Simplified deployment and management

- Containerized Architecture: Optimized for container platforms

- Cloud Resource Integration: Dynamic scaling with cloud resources

- Service Mesh Compatibility: Integration with modern networking patterns

- Helm Chart Deployment: Standardized cloud-native deployment

These adaptations make NiFi more natural in modern infrastructure.

NiFi is expanding its capabilities for AI/ML workflows:

- ML Model Integration: Direct incorporation of models into flows

- Feature Engineering: Specialized processors for data preparation

- Model Deployment Automation: Streamlining ML operationalization

- Feedback Loop Automation: Capturing prediction results for retraining

- Distributed ML Processing: Coordinated processing for large-scale ML

These capabilities position NiFi as a valuable component in ML pipelines.

MiNiFi continues to evolve for edge use cases:

- Ultra-Lightweight Agents: Minimized footprint for constrained devices

- Edge Analytics: More processing capability at the edge

- Intermittent Connectivity Handling: Robust operation with unreliable networks

- Edge-to-Cloud Patterns: Standard flows for edge-to-cloud architectures

- IoT Protocol Support: Expanded connectivity for industrial and consumer IoT

These enhancements support the growing importance of edge computing.

NiFi is becoming more capable for event-driven systems:

- Event Mesh Integration: Connections with event mesh platforms

- Event Pattern Detection: Identifying complex event patterns

- Event Correlation: Relating events from different sources

- Event Transformation: Converting between event formats

- Event-Driven Flow Triggering: Dynamic flow behavior based on events

These capabilities align NiFi with modern event-driven architectures.

Organizations achieving the greatest success with Apache NiFi follow these best practices:

Successful NiFi implementations require operational governance:

- Define clear ownership for flows and components

- Establish standards for flow design and documentation

- Implement change management processes

- Create testing and validation procedures

- Document reliability and security requirements

This governance ensures sustainable, manageable flows.

NiFi’s capabilities are best leveraged with proper training:

- Provide foundational training for all NiFi developers

- Develop specialized expertise for complex use cases

- Create internal knowledge sharing mechanisms

- Participate in community learning opportunities

- Build centers of excellence for NiFi development

This skills focus maximizes the platform’s value.

Modern flow management benefits from DevOps approaches:

- Implement version control for flow definitions

- Create CI/CD pipelines for flow deployment

- Establish automated testing for flows

- Implement monitoring and alerting

- Practice infrastructure as code for NiFi deployment

These practices improve reliability and agility.

Effective NiFi implementation requires balancing structure and flexibility:

- Create standard templates for common patterns

- Establish reusable controller services

- Define error handling standards

- Allow innovation within controlled boundaries

- Regularly review and incorporate successful patterns

This balance ensures both consistency and adaptability.

In today’s complex data landscape, the ability to reliably collect, process, and distribute data across diverse systems has become a critical business capability. Apache NiFi addresses this need through a comprehensive approach to data flow management that combines visual design, powerful processing, and enterprise-grade reliability and security.

By enabling organizations to create manageable, scalable data flows with full provenance and governance, NiFi transforms how enterprises handle their data logistics challenges. From financial services to healthcare to manufacturing, diverse use cases demonstrate how NiFi’s capabilities can solve complex data integration problems across industries.

The most successful implementations of NiFi recognize that effective data flow management requires both technological capabilities and organizational alignment. By focusing on clear governance, team skills development, DevOps practices, and balanced standardization, these organizations are turning data integration from a technical challenge into a strategic advantage.

As NiFi continues to evolve—embracing cloud-native deployment, AI/ML integration, edge computing, and event-driven architectures—it provides a foundation for increasingly sophisticated data flow automation that can adapt to tomorrow’s integration challenges.

#ApacheNiFi #DataFlow #ETL #DataIntegration #DataPipelines #OpenSource #FlowBasedProgramming #DataProvenance #RealTimeData #BigData #MiNiFi #DataGovernance #DataAutomation #IoTData #StreamProcessing #DataEngineering #DevOps #Apache #EnterpriseDI #DataOps