Confluent Platform: Unleashing the Power of Enterprise Event Streaming

In today’s digital landscape, businesses face an unprecedented volume of data generated across countless systems and applications. The ability to effectively harness this torrent of information in real-time has become a critical competitive advantage. Enter Confluent Platform—an enterprise-ready event streaming platform that transforms how organizations capture, process, and leverage data streams at scale. This comprehensive guide explores how Confluent Platform is revolutionizing enterprise data architectures and enabling a new generation of real-time applications and analytics.

Before diving into Confluent Platform specifically, it’s essential to understand the paradigm shift that event streaming represents. Traditional data architectures relied heavily on batch processing and point-to-point integrations, creating complex webs of dependencies that were difficult to maintain and scale. Event streaming introduced a fundamentally different approach—a central nervous system for all enterprise data that enables real-time flow and processing.

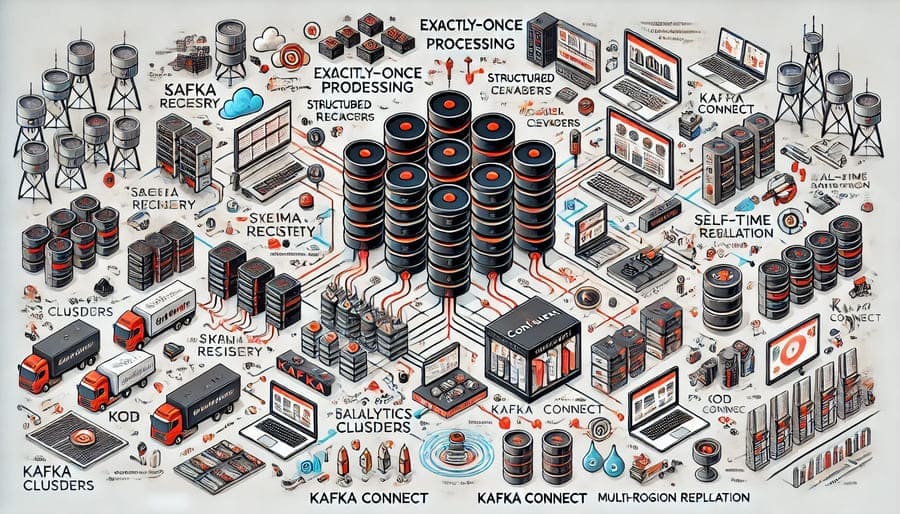

Apache Kafka emerged as the pioneering open-source technology in this space, but enterprises quickly discovered that adopting Kafka at scale required significant expertise and infrastructure investment. This is precisely the gap that Confluent Platform addresses, taking the powerful foundation of Apache Kafka and transforming it into a complete, enterprise-grade event streaming platform.

Confluent Platform is a comprehensive, enterprise-ready distribution of Apache Kafka enhanced with additional components, tools, and services designed for production deployment at scale. Founded by the original creators of Apache Kafka, Confluent has extended the core technology with features that address enterprise requirements around security, reliability, management, and ecosystem integration.

At its core, Confluent Platform enables organizations to:

- Capture and process massive volumes of real-time data streams

- Build event-driven applications that respond instantly to changes

- Create a central, persistent system of record for all enterprise events

- Connect disparate systems and applications through standardized event streams

- Implement robust data pipelines with exactly-once processing guarantees

The foundation of Confluent Platform remains Apache Kafka:

- Brokers: The distributed servers that store and serve data streams

- Topics: Categories or feed names to which records are published

- Partitions: Distributed, ordered sequences of records within topics

- Producers: Applications that publish events to Kafka topics

- Consumers: Applications that subscribe to topics and process events

Confluent extends core Kafka with enterprise features:

- Confluent Replicator: Enables multi-datacenter replication and disaster recovery

- Tiered Storage: Separates compute from storage for cost-effective retention

- Self-Balancing Clusters: Automatic partition rebalancing for optimal performance

- Confluent Metrics Reporter: Enhanced monitoring capabilities

- Auto Data Balancer: Ensures even distribution of data across the cluster

For data governance and compatibility:

- Schema Registry: Centralized schema management for event data

- Schema Validation: Ensures data compliance with defined schemas

- Schema Evolution: Manages schema changes while maintaining compatibility

- AVRO, Protobuf, and JSON Schema support: Multiple serialization formats

To connect with external systems:

- Kafka Connect: Framework for streaming data between Kafka and other systems

- Pre-built Connectors: 100+ ready-to-use connectors for popular data sources and sinks

- Connector Hub: Central repository of validated connectors

- API Management: Tools for exposing and consuming event streams as APIs

For real-time data transformation:

- ksqlDB: SQL interface for stream processing

- Kafka Streams: Library for building stream processing applications

- Exactly-once semantics: Guarantees for reliable processing

- Stream processing patterns: Templates for common use cases

For enterprise operations:

- Confluent Control Center: Centralized UI for managing and monitoring

- Cluster Linking: Connect and mirror topics across clusters and regions

- Health+: Advanced monitoring and proactive support

- Audit logging: Comprehensive tracking of platform activities

- Role-Based Access Control: Granular security permissions

Confluent Platform enables organizations to handle massive data volumes:

- Linear scalability: Add capacity by simply adding more brokers

- High throughput: Process millions of events per second

- Low latency: Deliver events with millisecond responsiveness

- Infinite storage: Tiered storage for cost-effective retention

- Global deployments: Multi-datacenter and multi-region capabilities

For mission-critical applications:

- Distributed architecture: No single point of failure

- Replication: Automatic data duplication for fault tolerance

- Disaster recovery: Cross-region replication and failover

- Data durability: Persistent storage of all events

- Exactly-once processing: Elimination of data loss or duplication

Meeting enterprise requirements:

- Authentication: Multiple mechanisms including LDAP and OAuth

- Authorization: Fine-grained access controls at all levels

- Encryption: Protect data in transit and at rest

- Audit logging: Track access and changes for compliance

- Data governance: Schema enforcement and evolution management

Reducing complexity and overhead:

- Unified management: Single interface for all components

- Automated operations: Self-balancing, auto-scaling capabilities

- Monitoring and alerting: Proactive issue detection and resolution

- Centralized configuration: Consistent settings across distributed components

- Streamlined upgrades: Simplified version management

Organizations use Confluent Platform to build responsive architectures:

- Decoupling services through event streams

- Implementing CQRS (Command Query Responsibility Segregation) patterns

- Creating event-sourced systems with complete historical state

- Enabling asynchronous communication between services

- Supporting domain-driven design through event collaboration

For immediate business insights:

- Streaming ETL for continuously updated data warehouses

- Real-time dashboards and visualizations

- Anomaly detection and alerting

- Customer 360 views with instant updates

- Operational metrics and KPIs with minimal latency

As a central nervous system for enterprise data:

- Consolidating data streams from multiple sources

- Breaking down data silos across the organization

- Enabling consistent access to enterprise data

- Supporting polyglot persistence strategies

- Creating a single source of truth for business events

For distributed sensing and processing:

- Ingesting telemetry from millions of connected devices

- Processing sensor data in real-time

- Enabling edge-to-cloud data flows

- Supporting digital twin implementations

- Driving predictive maintenance applications

Success with Confluent Platform starts with proper planning:

- Domain identification: Identify key event domains and data models

- Topic design: Create a logical topic structure and naming convention

- Cluster sizing: Determine capacity requirements based on volume and retention

- Network architecture: Design for appropriate bandwidth and security

- Disaster recovery strategy: Plan for business continuity requirements

Confluent Platform offers flexible deployment models:

- Self-managed: On-premises or cloud infrastructure you manage

- Confluent Cloud: Fully-managed service on AWS, Azure, or Google Cloud

- Hybrid deployments: Combination of self-managed and cloud services

- Multi-cloud strategies: Span multiple cloud providers

- Edge deployments: Extend to remote or edge locations

For maximum performance and efficiency:

- Partition planning: Design appropriate partitioning for parallelism

- Consumer group design: Organize consumers for optimal workload distribution

- Hardware optimization: Tune storage, network, and memory configurations

- Compaction policies: Implement effective data retention strategies

- Resource isolation: Separate critical workloads for reliable performance

While built on Apache Kafka, Confluent Platform adds:

- Enterprise security features: More robust authentication and authorization

- Operational tools: Simplified management and monitoring

- Extended ecosystem: Additional connectors and integrations

- Advanced features: Tiered storage, self-balancing, global clusters

- Commercial support: Enterprise SLAs and expert assistance

Compared to cloud-native messaging services:

- Consistency: Same platform across on-premises and multiple clouds

- Advanced capabilities: Richer feature set than most cloud alternatives

- Ecosystem maturity: Larger community and connector library

- Hybrid flexibility: Seamless operation across environments

- Open standards: Based on open-source technologies

Banks and financial institutions implement Confluent Platform for:

- Real-time fraud detection and prevention

- Trading platforms with microsecond latency requirements

- Risk calculation and compliance reporting

- Customer experience personalization

- Payment processing and settlement systems

Retailers leverage event streaming for:

- Omnichannel inventory management

- Personalized customer recommendations

- Supply chain optimization

- Price and promotion management

- Real-time order processing and fulfillment

Healthcare organizations utilize Confluent Platform for:

- Patient monitoring and alerting

- Healthcare interoperability (FHIR, HL7)

- Clinical decision support systems

- Drug discovery and research data pipelines

- Healthcare compliance and audit tracking

The platform continues to evolve with:

- Enhanced cloud-native features: Better integration with Kubernetes and serverless

- Expanded governance capabilities: More sophisticated data lineage and catalog features

- Advanced stream processing: Richer SQL capabilities in ksqlDB

- Edge computing integration: Seamless data flow from edge to core

- AI/ML enablement: Native support for machine learning workflows

For organizations exploring Confluent Platform:

- Start with Confluent Cloud: Begin with managed services to reduce complexity

- Identify pilot use cases: Select high-value, manageable initial projects

- Build internal expertise: Invest in team training and certification

- Establish patterns and standards: Create reusable approaches for consistency

- Scale incrementally: Expand to additional use cases methodically

Confluent provides comprehensive support:

- Confluent Developer: Educational resources and tutorials

- Confluent Professional Services: Expert implementation assistance

- Confluent Training: Formal education and certification programs

- Kafka Summit: Industry conferences and networking

- Community forums: Peer support and knowledge sharing

Confluent Platform represents a transformative approach to handling enterprise data that aligns perfectly with the demands of modern digital business. By providing a robust, scalable foundation for real-time event streaming, it enables organizations to move beyond traditional batch processing and point-to-point integrations into a world of dynamic, responsive data flows.

As businesses continue to prioritize digital transformation, real-time analytics, and event-driven architectures, platforms like Confluent that enable immediate access to changing data streams become increasingly critical components of the enterprise technology stack. Whether you’re building microservices, implementing real-time analytics, connecting disparate systems, or pursuing IoT initiatives, Confluent Platform provides a comprehensive solution for enterprise event streaming that can scale with your most demanding requirements.

The shift to event streaming isn’t merely a technical change—it represents a fundamental evolution in how organizations think about and utilize their data. By embracing this paradigm with Confluent Platform, businesses can unlock new capabilities, create more responsive customer experiences, and gain the agility needed to thrive in today’s rapidly changing digital landscape.

#ConfluentPlatform #ApacheKafka #EventStreaming #DataStreaming #RealTimeData #DataArchitecture #EventDriven #Microservices #StreamProcessing #DataIntegration #ksqlDB #KafkaConnect #DistributedSystems #CloudNative #EnterpriseData