Debezium: Harnessing the Power of Change Data Capture for Real-Time Data Streaming

In today’s data-driven landscape, businesses require not just access to data, but the ability to respond to changes as they happen. Debezium has emerged as a groundbreaking technology that transforms how organizations capture and utilize database changes in real-time. This comprehensive guide explores how Debezium’s powerful Change Data Capture (CDC) capabilities are revolutionizing data integration strategies and enabling a new generation of event-driven architectures.

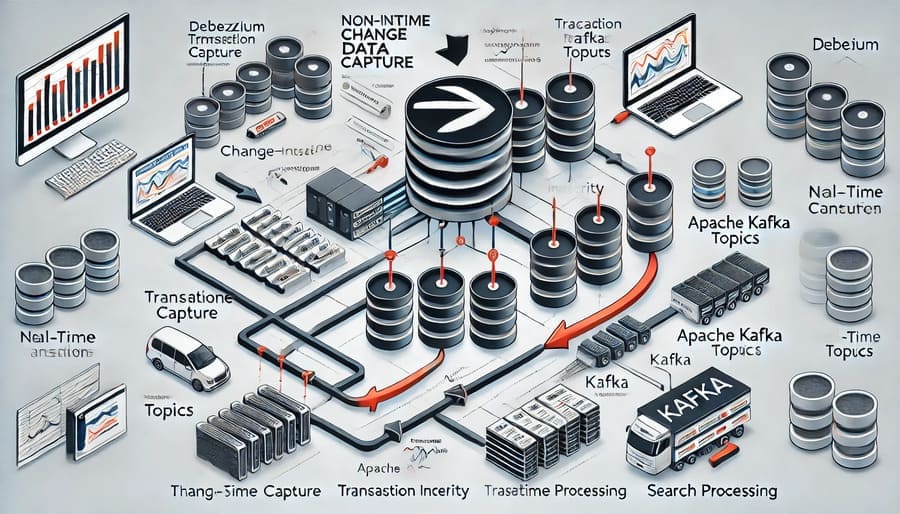

Debezium is an open-source distributed platform that implements Change Data Capture (CDC) to monitor databases and convert row-level changes into event streams. Created as part of the Red Hat Integration portfolio, Debezium captures the history of data changes by monitoring database transaction logs, providing a complete and reliable record of modifications with minimal impact on the source systems.

Unlike traditional data integration approaches that rely on periodic polling or triggers, Debezium operates by directly reading the database’s transaction log—the authoritative record of all data changes. This non-intrusive approach captures every insert, update, and delete operation without imposing additional load on the database or requiring application modifications.

At the heart of Debezium are its database-specific connectors:

- MySQL Connector: Leverages the binlog for change capture

- PostgreSQL Connector: Utilizes write-ahead logs (WAL) and logical decoding

- SQL Server Connector: Based on the SQL Server Change Data Capture framework

- MongoDB Connector: Monitors the oplog for document changes

- Oracle Connector: Reads from the LogMiner or XStream interfaces

- DB2 Connector: Captures changes from the DB2 transaction log

- Cassandra Connector: Tracks changes in Cassandra’s commit log

Debezium is typically deployed with Apache Kafka:

- Kafka Connect Framework: Provides the runtime environment for Debezium connectors

- Kafka Topics: Store change events in a durable, scalable stream

- Schema Registry: Manages event schema evolution

- Kafka Streams/ksqlDB: Enable real-time processing of change events

Debezium produces richly structured change events:

- Before and after states: Complete record state before and after the change

- Metadata: Information about the change type, timestamp, transaction context

- Schema information: Structure of the data for proper interpretation

- Source details: Originating database, table, and transaction information

Debezium ensures comprehensive change tracking:

- Historical data loading: Option to capture existing data before streaming changes

- Transaction boundary preservation: Maintains transaction context across events

- Schema evolution handling: Adapts to changing database structures

- Large object support: Efficient handling of BLOB, CLOB, and other binary data

- Exactly-once processing guarantees: Prevents data loss or duplication

For production environments, Debezium offers:

- Low impact capture: Minimal performance effect on source databases

- Fault tolerance: Resilient to network issues or system failures

- Distributed architecture: Scales horizontally for high-volume environments

- Monitoring hooks: Integration with observability platforms

- Security integration: Authentication, encryption, and access control options

Debezium’s open architecture supports customization:

- Single Message Transformations (SMTs): Modify events during processing

- Custom serialization formats: Support for Avro, JSON, Protobuf

- Filtering capabilities: Selectively capture changes from specific tables or operations

- Pluggable converters: Transform data between different representations

- Event routing: Direct different change events to appropriate destinations

Debezium enables reliable data sharing between services:

- Updating service-specific views based on shared data changes

- Implementing the Command Query Responsibility Segregation (CQRS) pattern

- Propagating reference data across distributed services

- Maintaining consistency in polyglot persistence architectures

- Facilitating service communication without direct dependencies

For analytical workloads, Debezium provides:

- Streaming updates to data warehouses for fresh analytics

- Populating search indexes with the latest data

- Feeding machine learning pipelines with current information

- Enabling real-time dashboards and visualizations

- Supporting complex event processing for business insights

Organizations implement Debezium for robust data movement:

- Synchronizing data between on-premises and cloud environments

- Creating cross-region replicas for disaster recovery

- Implementing heterogeneous database replication

- Building real-time ETL pipelines

- Feeding data lakes with change streams for historical analysis

Debezium serves as the foundation for event-sourced systems:

- Capturing domain events from database changes

- Implementing event sourcing patterns

- Building audit logs and compliance tracking

- Enabling reactive programming models

- Creating event-driven workflows and business processes

Successful Debezium deployments start with careful planning:

- Database compatibility assessment: Verify support for your specific database version

- Transaction log configuration: Ensure proper retention and access settings

- Network architecture: Design for appropriate bandwidth and security

- Kafka cluster sizing: Allocate sufficient resources for expected event volume

- Schema management strategy: Plan for handling schema evolution

For optimal operation, consider these recommendations:

- Connector tuning: Adjust batch sizes, polling intervals, and buffer settings

- Topic partitioning: Design appropriate partitioning for parallelism

- Resource allocation: Provide sufficient memory and CPU for connectors

- Monitoring setup: Implement comprehensive metrics collection

- Log management: Configure appropriate log levels and rotation

Address typical hurdles in Debezium implementations:

- Initial synchronization strategies: Manage the initial data load process

- Large transaction handling: Configure for databases with high-volume transactions

- Schema change management: Establish procedures for handling structural changes

- Network reliability: Implement appropriate retry and backoff strategies

- Monitoring and alerting: Create dashboards for system health visualization

Compared to conventional data integration, Debezium offers:

- Real-time updates: Immediate propagation versus batch processing

- Reduced database impact: Non-intrusive capture versus resource-intensive queries

- Complete change history: Every modification captured versus periodic snapshots

- Event-driven model: Push-based versus pull-based architecture

- Scalability: Distributed processing versus centralized extraction

When compared with native replication solutions, Debezium provides:

- Heterogeneous targets: Stream to diverse systems versus database-specific replication

- Transformation capabilities: Modify data during capture versus exact duplication

- Open format: Standard event structure versus proprietary formats

- Integration ecosystem: Direct connection to stream processing versus limited endpoints

- Selective replication: Table and column-level filtering versus complete replication

Organizations leverage Debezium during cloud transitions:

- Implementing hybrid architectures during migration phases

- Enabling zero-downtime migrations to cloud databases

- Synchronizing between on-premises and cloud environments

- Supporting dual-write patterns during cutover periods

- Creating cloud-based replicas for disaster recovery

For globally distributed systems, Debezium facilitates:

- Cross-region data synchronization with minimal latency

- Active-active database configurations

- Region-specific read replicas for local access

- Disaster recovery strategies across geographic boundaries

- Global event distribution for worldwide operations

When working with older systems, Debezium provides:

- Non-invasive data extraction without application changes

- Real-time integration between modern and legacy platforms

- Gradual modernization through event-driven architectures

- Reduction of direct legacy system queries

- Creating modern views of legacy data

The Debezium project continues to evolve with:

- Expanded connector ecosystem: Support for additional databases

- Enhanced cloud-native features: Better integration with Kubernetes and serverless

- Improved performance: Optimizations for high-volume environments

- Advanced filtering: More sophisticated selection mechanisms

- Standardized management: Better tooling for deployment and administration

For those ready to explore Debezium:

- Set up Kafka and Kafka Connect: Establish the streaming foundation

- Configure database prerequisites: Enable transaction logging and access

- Deploy Debezium connectors: Install and configure appropriate connectors

- Validate event streams: Verify data flow and event structure

- Implement consumers: Build applications that process the change events

Debezium offers comprehensive support materials:

- Official documentation: Detailed guides for all aspects of the platform

- Community forums: Connect with other users for tips and best practices

- Code examples: Reference implementations for common scenarios

- Tutorials and workshops: Guided learning experiences

- Regular releases: Frequent updates with new features and improvements

Debezium represents a transformative approach to data integration that aligns perfectly with modern architectural principles. By capturing database changes at their source and converting them into standardized event streams, it enables organizations to build truly reactive, real-time systems that respond immediately to data changes.

The technology addresses a fundamental challenge in distributed systems: how to efficiently and reliably share data changes across multiple applications and services. By providing a non-intrusive, scalable solution built on proven open-source technologies, Debezium has become an essential component in the modern data engineering toolkit.

Whether you’re implementing microservices, building event-driven applications, streamlining data integration, or modernizing legacy systems, Debezium’s Change Data Capture capabilities offer a powerful foundation for real-time data streaming. As organizations continue to prioritize agility and responsiveness, technologies like Debezium that enable immediate access to changing data will play an increasingly critical role in the data architecture landscape.

#Debezium #ChangeDataCapture #CDC #DataStreaming #ApacheKafka #EventDrivenArchitecture #RealTimeData #DataIntegration #MicroservicesData #DatabaseReplication #StreamProcessing #OpenSource #DataEngineering #ETLAlternative #EventStreaming