Amazon MSK: Simplifying Enterprise Kafka Deployment in the AWS Ecosystem

In today’s data-driven business landscape, the ability to process real-time streams of information has become a critical competitive advantage. Organizations are increasingly turning to event streaming platforms to handle the massive volumes of data generated across their operations. Apache Kafka has emerged as the leading solution in this space, but implementing, scaling, and maintaining Kafka clusters introduces significant operational challenges. Amazon Managed Streaming for Apache Kafka (Amazon MSK) addresses these challenges by providing a fully managed service that makes it easy to build and run applications that use Apache Kafka to process streaming data. This comprehensive guide explores how Amazon MSK is transforming how enterprises implement Kafka in the AWS ecosystem and enabling them to focus on building streaming applications rather than managing infrastructure.

Before diving into Amazon MSK specifically, it’s important to understand Apache Kafka’s role in modern data architectures and the challenges organizations face when implementing it.

Apache Kafka is an open-source distributed event streaming platform capable of handling trillions of events per day. It combines the simplicity of messaging systems with the benefits of durable storage, providing a unified platform for real-time data pipelines and streaming applications. Key capabilities include:

- Publishing and subscribing to streams of records

- Storing streams of records durably and reliably

- Processing streams as they occur or retrospectively

While Kafka provides powerful capabilities, operating it at scale presents several challenges:

- Cluster Management: Setting up, configuring, and maintaining Kafka brokers

- Scaling Complexity: Adding capacity while ensuring data balance

- Monitoring and Operations: Ensuring cluster health and performance

- Security Implementation: Configuring authentication, authorization, and encryption

- Version Management: Staying current with Kafka releases and patches

- Disaster Recovery: Implementing multi-zone or multi-region resilience

These operational burdens can distract organizations from their core business objectives and slow down the development of streaming applications. Amazon MSK was designed to eliminate these burdens, providing the full capabilities of Apache Kafka without the operational overhead.

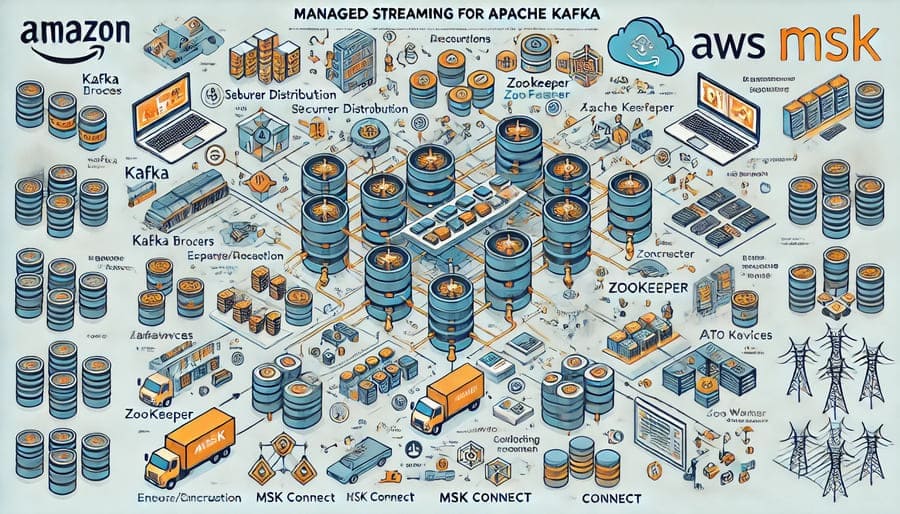

Amazon MSK is a fully managed service that makes it easy to build and run applications using Apache Kafka as a data store and streaming backbone. The service handles the provisioning, configuration, and maintenance of Apache Kafka clusters and Apache ZooKeeper nodes, providing a complete solution for streaming data processing in the AWS cloud.

Key aspects of Amazon MSK include:

- Native Kafka Compatibility: Uses standard Apache Kafka (not a proprietary implementation)

- AWS Integration: Seamless connection with other AWS services

- Managed Infrastructure: Automated deployment and maintenance

- Enhanced Security: Comprehensive security features integrated with AWS security services

- Scalability: Easily scale your cluster as your needs grow

- High Availability: Built-in redundancy across multiple Availability Zones

By abstracting away the complexity of Kafka infrastructure management, Amazon MSK allows development teams to focus on building applications that derive value from streaming data.

Amazon MSK streamlines the entire lifecycle of Kafka clusters:

- One-Click Deployment: Create production-ready clusters in minutes

- Automatic Configuration: Best-practice settings applied automatically

- Broker Replacement: Failed brokers automatically detected and replaced

- Software Patching: Automated updates with minimal disruption

- Monitoring Integration: Built-in CloudWatch metrics and dashboards

- Right-Sizing Tools: Recommendations for optimal cluster configuration

For organizations with strict security requirements:

- Encryption: Data encrypted in transit and at rest

- Authentication: Support for TLS mutual authentication

- Authorization: Apache Kafka ACLs for fine-grained access control

- AWS IAM Integration: Control access to the MSK API and resources

- Private Networking: VPC deployment for network isolation

- AWS PrivateLink: Access MSK without traversing the public internet

To handle growing workloads:

- Elastic Scaling: Add broker capacity with minimal disruption

- Storage Auto Scaling: Automatically increase storage as needed

- Provisioned Throughput: Configure the exact capacity required

- High-Performance Hardware: Optimized infrastructure for Kafka workloads

- Monitoring Insights: Identify and address performance bottlenecks

- Tiered Storage: Cost-effective storage for large volumes of data

For mission-critical applications:

- Multi-AZ Deployment: Automatic distribution across Availability Zones

- Replication: Configure topic replication factor for data durability

- Automated Backups: Continuous backup of broker storage

- ZooKeeper Management: Fully managed ZooKeeper nodes

- Health Monitoring: Proactive detection of potential issues

- Service Level Agreement: 99.9% uptime commitment

Seamless connectivity with the AWS ecosystem:

- AWS Glue Schema Registry: Centralized schema management

- Amazon MSK Connect: Fully managed Kafka Connect for data integration

- AWS Lambda: Trigger serverless functions from Kafka topics

- Amazon Kinesis Data Analytics: Process streams with SQL or Apache Flink

- AWS IAM: Unified security model across services

- Amazon S3: Archive data for long-term storage

- Amazon VPC: Deploy within your virtual private cloud

Organizations leverage MSK for immediate insights:

- Analyzing clickstream data to optimize user experiences

- Processing IoT telemetry for operational dashboards

- Monitoring financial transactions for fraud detection

- Tracking application metrics for performance optimization

- Implementing real-time business intelligence dashboards

For modern application architectures:

- Enabling event-driven communication between services

- Implementing the Command Query Responsibility Segregation (CQRS) pattern

- Creating audit logs of all system activities

- Supporting event sourcing for state management

- Facilitating service choreography without tight coupling

As a central hub for enterprise data:

- Implementing Change Data Capture (CDC) from databases

- Synchronizing data between on-premises and cloud environments

- Building real-time ETL pipelines for data warehousing

- Creating data lakes with continuously updated information

- Enabling cross-application data sharing

For advanced analytics:

- Streaming data into training and inference workflows

- Implementing online learning with continuous model updates

- Capturing feature data for real-time scoring

- Processing feedback loops for model improvement

- Distributing ML predictions to operational systems

Successful MSK implementations typically follow these principles:

- Define Clear Use Cases: Identify specific streaming requirements and patterns

- Topic Design: Create a logical organization of topics based on data domains

- Sizing Strategy: Determine appropriate broker count and instance types

- Security Architecture: Plan authentication, authorization, and encryption approaches

- Monitoring Setup: Establish key metrics and alerting thresholds

For high-throughput, low-latency operation:

- Partition Planning: Design appropriate partition counts for parallelism

- Instance Selection: Choose instance types based on workload characteristics

- Producer Tuning: Configure batching and compression settings

- Consumer Configuration: Optimize polling and processing patterns

- Networking Considerations: Account for data transfer requirements

Address typical hurdles in MSK implementations:

- Migration Strategies: Approaches for moving existing Kafka workloads to MSK

- Schema Evolution: Managing data format changes over time

- Cost Optimization: Balancing performance needs with resource efficiency

- Multi-Region Architectures: Implementing global data distribution

- Disaster Recovery Planning: Ensuring business continuity in failure scenarios

The standard deployment model:

- Customizable Configuration: Control broker settings and cluster parameters

- Predictable Pricing: Based on broker hours and storage

- Performance Tuning: Select specific instance types for workloads

- Storage Flexibility: Configure storage per broker

- Multi-AZ Distribution: Automatic distribution for high availability

For variable or unpredictable workloads:

- Automatic Scaling: Adjusts capacity based on workload

- Pay-per-Use: Charged based on actual resource consumption

- Zero Operations: Even further reduced management overhead

- Simplified Design: Focus entirely on application logic

- Starter-Friendly: Easier entry point for Kafka adoption

When weighing managed versus self-deployed options:

- Operational Overhead: MSK eliminates cluster management tasks

- Cost Considerations: Different economic models for infrastructure

- Control vs. Convenience: Trade-offs in configuration flexibility

- Integration Aspects: Native AWS service connections with MSK

- Expertise Requirements: Reduced need for specialized Kafka knowledge

In the competitive landscape:

- AWS Ecosystem Integration: Tighter connection with AWS services

- Security Model: Unified with existing AWS security practices

- Deployment Flexibility: Various configuration options for different needs

- Support Structure: AWS support and service maturity

- Pricing Model: Potential advantages for existing AWS customers

For those ready to explore MSK:

- Create a Cluster: Use the AWS Console, CLI, or CloudFormation

- Configure Security: Set up encryption, authentication, and network controls

- Create Topics: Establish your event streaming structure

- Implement Clients: Develop producer and consumer applications

- Monitor Performance: Set up dashboards and alerts

AWS provides comprehensive support for MSK adoption:

- Documentation: Detailed guides and best practices

- Sample Code: Example applications for common patterns

- AWS Workshops: Guided learning experiences

- Reference Architectures: Proven implementation patterns

- Partner Solutions: Third-party tools and integrations

The streaming data ecosystem continues to advance with:

- Increased Automation: More sophisticated self-tuning capabilities

- Enhanced Analytics Integration: Tighter connections with real-time processing

- Serverless Expansion: Growing serverless options for Kafka workloads

- Cross-Region Capabilities: Better support for global applications

- Industry-Specific Solutions: Tailored implementations for vertical markets

Amazon MSK represents a significant step forward in making Apache Kafka accessible and manageable for organizations of all sizes. By eliminating the operational complexity of Kafka while preserving its powerful capabilities, MSK enables businesses to focus on deriving value from their streaming data rather than maintaining infrastructure.

As real-time data processing becomes increasingly central to business operations, services like Amazon MSK will play a vital role in helping organizations build and scale their streaming applications. Whether you’re implementing microservices communication, real-time analytics, data integration, or machine learning pipelines, MSK provides a robust foundation that aligns with AWS best practices and integrates seamlessly with the broader AWS ecosystem.

By understanding the features, implementation patterns, and best practices described in this article, organizations can leverage Amazon MSK to build scalable, reliable streaming architectures that support their business objectives—ultimately turning the challenge of real-time data processing into a competitive advantage.

#AmazonMSK #ApacheKafka #ManagedKafka #EventStreaming #AWSServices #RealTimeData #StreamProcessing #CloudNative #Microservices #DataIntegration #ServerlessKafka #DataStreaming #EventDrivenArchitecture #CloudComputing #StreamAnalytics