Apache Kafka: The Backbone of Modern Event-Driven Architectures

In today’s digital landscape, organizations face unprecedented challenges in managing the explosive growth of data generated across their systems. Traditional data processing approaches struggle to handle the volume, velocity, and variety of information flowing through modern enterprises. Apache Kafka has emerged as a revolutionary solution to this challenge, providing a distributed event streaming platform that has transformed how businesses capture, process, and respond to data in real-time. This comprehensive guide explores the architecture, capabilities, and applications of Apache Kafka, helping you understand why it has become the foundation of event-driven systems worldwide.

Apache Kafka is an open-source distributed event streaming platform designed to handle high-throughput, fault-tolerant real-time data feeds. Created by LinkedIn engineers and later donated to the Apache Software Foundation, Kafka was built to address the need for processing massive streams of data with minimal latency while ensuring durability and scalability.

At its core, Kafka serves as a central nervous system for data, enabling organizations to:

- Publish and subscribe to streams of records (similar to a message queue)

- Store streams of records in a fault-tolerant, durable manner

- Process streams of records as they occur or retrospectively

- Integrate data from multiple sources into a unified platform

- Build real-time applications that transform or react to streams of data

Unlike traditional messaging systems, Kafka maintains a distributed commit log that serves as a source of truth, allowing consumers to process data at their own pace while maintaining order and durability guarantees.

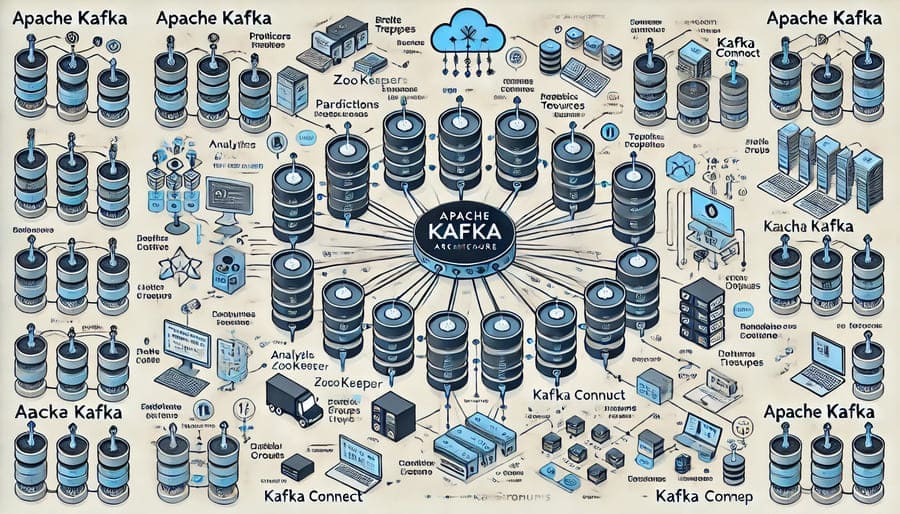

Understanding Kafka requires familiarity with its fundamental building blocks:

- Topics: Categories or feed names to which records are published, similar to tables in a database but with greater flexibility

- Partitions: Divisions of topics that enable parallel processing and horizontal scaling

- Brokers: Kafka servers that store data and serve client requests

- Producers: Applications that publish records to Kafka topics

- Consumers: Applications that subscribe to topics and process the published records

- Consumer Groups: Sets of consumers that collaborate to consume a topic, with each partition being consumed by exactly one consumer in the group

- ZooKeeper: The coordination service that maintains configuration information and provides distributed synchronization (being phased out in newer versions)

Kafka’s distributed nature provides several critical advantages:

- Horizontal Scalability: Add brokers to increase capacity without downtime

- Fault Tolerance: Replicate data across multiple brokers to prevent data loss

- High Throughput: Process millions of messages per second on modest hardware

- Low Latency: Deliver messages with millisecond-level delay

- Durability: Persist messages to disk for reliability and recovery

The architecture employs a distributed commit log model where each partition is an ordered, immutable sequence of records that is continually appended to. This design enables both streaming and batch consumers to process data at their own pace without affecting other systems.

Kafka isn’t just a messaging system—it provides comprehensive stream processing capabilities:

- Kafka Streams: A client library for building applications and microservices that process and analyze data stored in Kafka

- Exactly-Once Semantics: Guarantees that each record is processed precisely once, even in the event of failures

- Stateful Processing: Maintain application state for complex event processing like windowing and aggregations

- Interactive Queries: Access state stores directly for point queries without additional database dependencies

- Event-Time Processing: Process records based on when events actually occurred rather than when they arrived

Kafka Connect provides a framework for integrating Kafka with external systems:

- Source Connectors: Import data from external systems into Kafka topics

- Sink Connectors: Export data from Kafka topics to external systems

- Transformation Capabilities: Modify data during ingestion or export

- Extensive Connector Library: Pre-built connectors for databases, storage systems, messaging platforms, and more

- Distributed Mode: Scale connector workloads across a cluster for high throughput

Enterprise environments require robust security, which Kafka provides through:

- Authentication: SSL/TLS, SASL, and Kerberos support

- Authorization: Access control lists (ACLs) for fine-grained permissions

- Encryption: Data encryption both in transit and at rest

- Quotas: Limit resource usage by clients to prevent abuse

- Audit Logging: Track operations for compliance and troubleshooting

Organizations leverage Kafka as the backbone for event-driven architectures:

- Decoupling services through event streams instead of direct API calls

- Implementing the Command Query Responsibility Segregation (CQRS) pattern

- Enabling Event Sourcing for complete audit trails and state reconstruction

- Supporting domain-driven design with event collaboration

- Facilitating asynchronous communication between services

For immediate insights from streaming data:

- Capturing and processing clickstream data for website analytics

- Monitoring IoT device telemetry for operational insights

- Detecting fraud patterns in financial transactions as they occur

- Analyzing social media sentiment in real-time

- Building dynamic dashboards with instantly updated metrics

Kafka excels as a central hub for enterprise data:

- Creating a single source of truth for critical business events

- Implementing Change Data Capture (CDC) from operational databases

- Feeding data warehouses and lakes with consistent, ordered data streams

- Synchronizing data across hybrid and multi-cloud environments

- Breaking down data silos across organizational boundaries

For large-scale observability:

- Centralizing logs from distributed applications and services

- Processing and analyzing metrics from infrastructure components

- Implementing real-time alerting based on event patterns

- Creating audit trails for security and compliance

- Supporting forensic analysis of system behavior

Successful Kafka deployments typically follow these principles:

- Topic Design: Structure topics based on business domains and event types

- Partitioning Strategy: Choose partition counts and keys to enable parallelism while maintaining order guarantees

- Replication Configuration: Balance durability requirements with resource constraints

- Hardware Sizing: Allocate appropriate CPU, memory, network, and disk resources

- Cluster Topology: Design for failure domains and geographic distribution

For high-throughput, low-latency performance:

- Batch Processing: Configure producers to send messages in batches

- Compression: Enable compression for efficient network utilization

- Retention Policies: Set appropriate retention periods based on consumer needs

- Consumer Tuning: Optimize fetch sizes and buffer management

- Monitoring Setup: Implement comprehensive metrics collection for performance analysis

Address typical hurdles in Kafka implementations:

- Schema Evolution: Implement schema registry for managing compatible changes

- Message Ordering: Design carefully for scenarios requiring global ordering

- Rebalancing Impacts: Minimize disruption during consumer group rebalances

- Disk Space Management: Implement appropriate monitoring and alerting for storage

- Operational Complexity: Consider managed services for reducing administrative overhead

The Apache Kafka project includes several integrated components:

- Kafka Brokers: The core servers that store and serve data

- Kafka Connect: Framework for scalable, reliable data integration

- Kafka Streams: Client library for stream processing applications

- ksqlDB: SQL interface for stream processing

- Admin Tools: Command-line utilities and APIs for management

A rich ecosystem has developed around Kafka:

- Confluent Platform: Enhanced Kafka distribution with additional enterprise features

- Kafdrop/AKHQ: Web UIs for browsing and managing Kafka clusters

- Conduktor: Desktop client for Kafka management

- Lenses.io: Data operations platform for Kafka

- Kafka Manager/Cruise Control: Cluster management and optimization tools

When compared to systems like RabbitMQ or ActiveMQ:

- Durability Model: Kafka’s log-based storage versus in-memory message queues

- Throughput Capacity: Orders of magnitude higher message rates

- Consumer Model: Multiple consumers can read the same messages

- Retention: Configurable persistence versus immediate message deletion after consumption

- Processing Guarantees: Strong ordering and exactly-once capability

In comparison to platforms like Apache Flink or Spark Streaming:

- Focus: Kafka centers on reliable message delivery with processing capabilities, while others focus primarily on computation

- State Management: Different approaches to stateful processing

- Latency Profiles: Varying trade-offs between throughput and processing delay

- Integration Capabilities: Kafka’s extensive connector ecosystem

- Operational Complexity: Different deployment and management considerations

Kafka continues to evolve with enhancements like:

- KRaft Mode: Replacing ZooKeeper with a self-managed quorum

- Tiered Storage: Separating storage from compute for improved economics

- Exactly-Once Semantics Improvements: Enhanced transaction support

- Enhanced Security: More sophisticated authentication and authorization options

- Improved Client Libraries: Better support for various programming languages

The community is driving innovation in several areas:

- Kafka-Native Applications: Building entire applications around Kafka’s event-driven model

- Multi-Tenancy: Supporting multiple applications on shared Kafka infrastructure

- Global Kafka: Spanning geographic regions for worldwide event streaming

- Cloud-Native Deployments: Optimizing for Kubernetes and serverless environments

- Edge Computing Integration: Extending Kafka’s reach to edge devices and gateways

For organizations exploring Kafka, a typical approach includes:

- Education Phase: Build understanding of event streaming concepts

- Proof of Concept: Implement a focused use case to demonstrate value

- First Production Deployment: Start with a non-critical application

- Platform Establishment: Create standards and governance for wider adoption

- Organizational Transformation: Shift toward event-driven thinking

Kafka offers extensive learning materials:

- Official Documentation: Comprehensive guides and reference materials

- Apache Kafka Community: Forums and mailing lists for peer support

- Confluent Developer Center: Tutorials and best practices

- Kafka Summit: Industry conferences featuring real-world implementation stories

- Books and Courses: Dedicated educational resources for various experience levels

Apache Kafka has fundamentally transformed how organizations manage and process streaming data. By providing a distributed, scalable, and fault-tolerant event streaming platform, it enables businesses to build real-time applications that respond immediately to the constant flow of events across the enterprise.

As data volumes continue to grow and business requirements increasingly demand real-time capabilities, Kafka’s importance as a foundational technology will only increase. Whether you’re implementing microservices architectures, building real-time analytics, integrating disparate systems, or creating entirely new event-driven applications, Kafka provides the robust, scalable infrastructure needed to succeed in today’s data-intensive world.

By embracing Kafka’s event streaming paradigm, organizations can move beyond traditional batch-oriented thinking toward a more dynamic, responsive approach to data—ultimately enabling faster decisions, more personalized customer experiences, and the agility to adapt quickly to changing market conditions.

#ApacheKafka #EventStreaming #DistributedSystems #RealTimeData #DataEngineering #Microservices #StreamProcessing #EventDriven #DataArchitecture #KafkaStreams #DataIntegration #MessageQueue #OpenSource #BigData #CloudNative