Azure Event Hubs: Unlocking the Power of Real-Time Big Data Streaming

In today’s digital landscape, organizations face an unprecedented flood of data generated at ever-increasing velocity and volume. From IoT devices and mobile applications to enterprise systems and cloud services, the ability to capture, process, and derive insights from this torrent of information in real-time has become a critical competitive advantage. Microsoft’s Azure Event Hubs has emerged as a powerful solution to this challenge, offering a scalable, fully-managed big data streaming platform that enables organizations to harness the full potential of their streaming data. This comprehensive guide explores how Azure Event Hubs works, its key capabilities, real-world applications, and implementation strategies to help you leverage this powerful platform for your big data initiatives.

Before diving into Azure Event Hubs specifically, it’s important to understand the broader context of big data streaming and event processing.

Traditional data processing often relied on batch methods—collecting data over time and processing it at scheduled intervals. While effective for certain use cases, this approach creates inevitable delays between data generation and actionable insights. In contrast, stream processing enables organizations to analyze and act on data as it’s created, opening up possibilities for real-time decision making, immediate customer engagement, and proactive system monitoring.

Event processing focuses on the individual units of information—events—that represent something happening in the world or within a system. These discrete pieces of data might include user clicks on a website, temperature readings from a sensor, stock price changes, or system log entries. By capturing, routing, and analyzing these events as they occur, organizations can respond to changing conditions with unprecedented speed and agility.

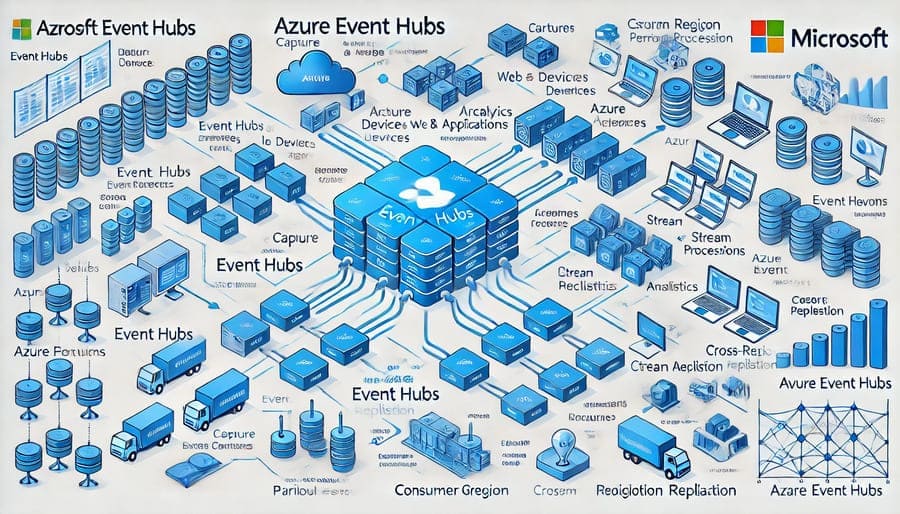

Azure Event Hubs is Microsoft’s cloud-native event ingestion service designed specifically for massive scale data streaming. It functions as a distributed streaming platform capable of receiving and processing millions of events per second with low latency. As part of the Azure ecosystem, Event Hubs integrates seamlessly with other Azure services while providing the performance, reliability, and security features required for enterprise-grade applications.

At its core, Event Hubs serves as the “front door” for an event pipeline—acting as an event ingestor that decouples the production of an event stream from the consumption of those events. This separation enables independent scaling of event publishers and consumers while providing durable storage for received events, allowing for flexible processing models.

Understanding Event Hubs requires familiarity with its fundamental building blocks:

- Event Hubs Namespace: A management container for one or more Event Hub instances that provides a DNS-integrated network endpoint

- Event Hub: A specific streaming endpoint within a namespace that receives and organizes events

- Partitions: Ordered sequences of events that enable parallel processing and scale-out consumption

- Publisher: Any entity that sends data to an Event Hub

- Consumer Group: A view of an entire Event Hub that enables multiple consuming applications to each have their own view of the event stream

- Throughput Units/Processing Units: Pre-purchased units of capacity that control available ingress and egress bandwidth

The typical flow of data through Event Hubs follows these stages:

- Event Production: Publishers send events to the Event Hub through HTTPS, AMQP, or Apache Kafka protocols

- Ingestion and Partitioning: Events are distributed across partitions using either explicit partition keys or round-robin assignment

- Storage: Events are temporarily stored within partitions based on configurable retention periods

- Consumption: Consumer applications read events from specific partitions, tracking their position within the event sequence

- Processing: Downstream systems analyze, transform, or react to the consumed events

This architecture enables massive scale while maintaining order guarantees within each partition, providing the foundation for sophisticated event processing pipelines.

Event Hubs is built from the ground up for high-throughput event ingestion:

- Elastic Scale: Automatically scale to handle millions of events per second

- Partition-Based Design: Horizontal scaling through independent, ordered event sequences

- Auto-Inflate: Dynamically adjust throughput capacity based on usage

- Dedicated Clusters: Provision single-tenant deployments for the most demanding workloads

- Global Distribution: Replicate events across geographic regions for worldwide availability

The platform supports multiple industry-standard protocols:

- AMQP 1.0: Advanced Message Queuing Protocol for efficient, reliable communication

- HTTP/REST: Simple HTTP-based publishing for broad client compatibility

- Apache Kafka: Native compatibility with Kafka client applications without code changes

- Event Hubs SDKs: Libraries for .NET, Java, Python, JavaScript and more

Beyond basic event ingestion, Event Hubs offers sophisticated capabilities:

- Capture: Automatically archive streaming data to Azure Blob Storage or Data Lake

- Schema Registry: Centralized repository for managing and enforcing event schemas

- Geo-Disaster Recovery: Metadata replication between regions for business continuity

- RBAC and Security: Granular access control and comprehensive security features

- Event Processing: Integration with Azure Stream Analytics, Functions, and other services

For operational excellence, Event Hubs provides:

- Metrics and Diagnostics: Built-in monitoring through Azure Monitor

- Distributed Tracing: End-to-end visibility across the event processing pipeline

- Alerts and Notifications: Proactive notification of anomalous conditions

- Resource Management: Comprehensive control through Azure Portal, CLI, PowerShell, or ARM templates

- Cost Management: Usage tracking and optimization recommendations

Organizations leverage Event Hubs for Internet of Things scenarios:

- Ingesting telemetry from millions of connected devices

- Monitoring equipment health in manufacturing environments

- Analyzing smart city sensor data for urban optimization

- Processing connected vehicle data for fleet management

- Enabling predictive maintenance through real-time equipment monitoring

For digital experience optimization:

- Capturing website clickstreams for user behavior analysis

- Monitoring mobile app usage patterns and performance

- Collecting and analyzing application logs at scale

- Implementing real-time dashboards for system health

- Detecting anomalies in application performance metrics

The financial sector uses Event Hubs for time-sensitive applications:

- Processing trading platform events and market data feeds

- Implementing real-time fraud detection systems

- Analyzing transaction patterns for risk assessment

- Triggering automated trading algorithms

- Meeting regulatory requirements for data capture and analysis

For immediate business insights:

- Feeding data warehouses with continuous, fresh data

- Powering real-time dashboards and visualizations

- Implementing complex event processing for pattern detection

- Enabling real-time machine learning scoring

- Supporting business process automation through event triggers

Successful Event Hubs implementations typically follow these principles:

- Partition Strategy: Design partition schemes based on event ordering requirements

- Throughput Planning: Determine appropriate throughput units based on expected volume

- Retention Configuration: Set retention periods according to processing requirements

- Consumer Group Design: Create dedicated consumer groups for each consuming application

- Schema Management: Establish event format standards and evolution strategies

For high-throughput, low-latency event processing:

- Batching: Configure publishers to send events in batches rather than individually

- Partition Keys: Distribute events evenly across partitions to avoid “hot partitions”

- AMQP Connections: Use persistent AMQP connections for efficient publishing

- Prefetch Settings: Tune consumer prefetch counts for optimal throughput

- Parallel Processing: Implement concurrent consumption from multiple partitions

Common integration approaches with the broader Azure ecosystem:

- Event Hubs + Stream Analytics: Real-time analytics on streaming data

- Event Hubs + Functions: Serverless event processing with automatic scaling

- Event Hubs + Databricks: Advanced analytics and machine learning on streaming data

- Event Hubs + Event Grid: Comprehensive event routing and handling

- Event Hubs + Power BI: Real-time dashboards and visualizations

While Event Hubs offers Kafka compatibility, the platforms differ in several ways:

- Management Model: Fully-managed PaaS vs. self-managed or third-party managed

- Operational Complexity: Simplified operations vs. more complex administration

- Ecosystem Integration: Native Azure integration vs. broader open-source ecosystem

- Cost Structure: Consumption-based pricing vs. infrastructure-based costs

- Feature Parity: Some differences in partitioning models and client features

Within the Microsoft ecosystem, different services address specific needs:

- Event Grid vs. Event Hubs: Event routing vs. high-volume data streaming

- Service Bus vs. Event Hubs: Message queuing vs. event streaming

- IoT Hub vs. Event Hubs: IoT-specific features vs. general-purpose streaming

- Stream Analytics vs. Event Hubs: Processing vs. ingestion (complementary services)

For those ready to explore Event Hubs:

- Set Up Azure Resources: Create an Event Hubs namespace and hub instances

- Configure Access Control: Set up Shared Access Signatures or Azure AD integration

- Implement Publishers: Develop applications to send events to your hub

- Set Up Consumers: Create consumer applications using the Event Processor Client

- Monitor Operations: Configure diagnostics and alerts for operational visibility

Microsoft provides comprehensive support for Event Hubs development:

- Azure SDK Libraries: Official client libraries for multiple programming languages

- Samples and Quickstarts: Ready-to-use code examples for common scenarios

- Documentation: Detailed conceptual and reference documentation

- Learning Paths: Structured learning resources for different skill levels

- Community Support: Forums and community resources for troubleshooting

The event streaming ecosystem continues to advance with:

- Serverless Event Processing: More sophisticated event-triggered computation models

- AI-Powered Stream Processing: Machine learning integrated directly into streaming pipelines

- Edge-to-Cloud Streaming: Distributed processing across edge devices and cloud services

- Event Mesh Architectures: Interconnected event brokers spanning hybrid environments

- Streaming SQL Standards: Evolving query capabilities for stream processing

Azure Event Hubs represents a powerful foundation for organizations looking to harness the value of real-time data streams. By providing a scalable, reliable platform for high-volume event ingestion, it enables a wide range of use cases from IoT telemetry processing to real-time analytics and application monitoring.

As businesses continue to recognize the competitive advantage of responding immediately to events as they happen, platforms like Event Hubs become increasingly essential components of modern data architectures. Whether you’re building IoT solutions, implementing real-time analytics, or modernizing your application monitoring, Azure Event Hubs provides the robust infrastructure needed to handle massive data streams without the operational complexity of managing distributed streaming systems.

By understanding the capabilities, patterns, and best practices described in this article, you can leverage Azure Event Hubs to build scalable, resilient event streaming pipelines that transform raw data streams into valuable business insights and actions—ultimately accelerating your organization’s journey toward becoming truly data-driven in real time.

#AzureEventHubs #BigDataStreaming #RealTimeAnalytics #EventProcessing #IoTData #CloudComputing #DataStreaming #MicrosoftAzure #StreamAnalytics #EventDriven #DataPipelines #KafkaCompatible #CloudNative #DistributedSystems #DataIngestion