IBM Event Streams: Powering Enterprise Event Streaming in the Modern Data Landscape

In today’s rapidly evolving digital ecosystem, organizations face the constant challenge of processing, analyzing, and responding to massive volumes of real-time data. From customer interactions and IoT sensor readings to application logs and transaction records, the ability to harness this continuous flow of events has become a critical differentiator for businesses across industries. IBM Event Streams has emerged as a powerful solution to this challenge, offering an enterprise-grade event-streaming platform built on open standards with the reliability, security, and scalability that large organizations demand. This comprehensive guide explores how IBM Event Streams is transforming how enterprises implement event-driven architectures and enabling a new generation of real-time applications and analytics.

Before diving into IBM Event Streams specifically, it’s important to understand why event streaming has become so crucial for modern enterprises.

Traditional data processing often relied on batch methods—collecting data over time and processing it at scheduled intervals. While effective for certain use cases, this approach creates inevitable delays between data generation and business action. In contrast, event streaming enables organizations to process and react to data as it’s created, opening up possibilities for real-time decision making, immediate customer engagement, and proactive system monitoring.

Enterprise environments, however, introduce additional complexities:

- Scale requirements: Handling billions of events across global operations

- Security mandates: Meeting strict compliance and data protection standards

- Reliability demands: Ensuring zero data loss for business-critical applications

- Integration needs: Connecting with diverse legacy and modern systems

- Operational considerations: Providing comprehensive monitoring and management

IBM Event Streams was designed specifically to address these enterprise requirements while leveraging the power of open-source technology.

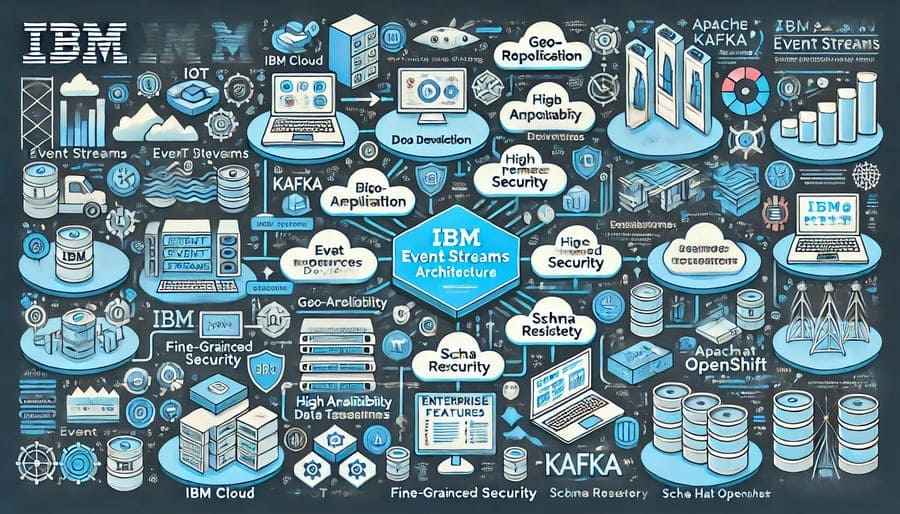

IBM Event Streams is an enterprise-ready event-streaming platform based on Apache Kafka. It provides a fully managed, highly available, and secure implementation of Kafka enhanced with enterprise features for production deployment at scale. Available on IBM Cloud, IBM Cloud Pak for Integration, and Red Hat OpenShift, Event Streams delivers consistent capabilities across cloud, on-premises, and hybrid environments.

At its core, IBM Event Streams enables organizations to:

- Build real-time data pipelines connecting diverse systems

- Implement event-driven architectures for responsive applications

- Stream data to analytics platforms for immediate insights

- Create resilient microservices communication channels

- Establish a central, governed event backbone for the enterprise

IBM Event Streams provides the dependability required for mission-critical applications:

- High Availability: Distributed architecture with no single point of failure

- Data Replication: Configurable topic replication for durability

- Geo-Replication: Replicate data across regions for disaster recovery

- Persistent Storage: Reliable message persistence and retention

- Transactional Capabilities: Support for exactly-once processing semantics

For organizations with strict security requirements:

- Authentication: Multi-factor authentication and LDAP/Active Directory integration

- Authorization: Fine-grained access control at topic and consumer group levels

- Encryption: Data encryption in transit and at rest

- Audit Logging: Detailed tracking of system access and operations

- Compliance: Support for regulatory requirements like GDPR, HIPAA, and PCI DSS

To handle enterprise workloads:

- Horizontal Scaling: Add brokers to increase capacity linearly

- Throughput Optimization: Handle millions of messages per second

- Partition Management: Distribute topic partitions for parallel processing

- Resource Controls: Quotas and throttling to prevent resource contention

- Performance Monitoring: Real-time metrics on throughput and latency

For productive application development:

- Schema Registry: Centralized schema management and evolution

- REST API: Simple HTTP interface for producing and consuming messages

- Native Kafka API Compatibility: Support for existing Kafka applications

- Event Streams Toolkit: Integration with IBM Cloud Pak for Integration

- Starter Applications: Sample code and tutorials for common patterns

For IT operations and platform teams:

- Management UI: Comprehensive web console for administration

- Monitoring Integration: Prometheus and Grafana metrics

- Health Checks: Automated system health verification

- Alerting: Proactive notification of potential issues

- Capacity Planning: Tools for workload analysis and growth planning

For seamless data integration:

- Pre-built Connectors: Ready-to-use connectors for common systems

- Custom Connector Support: Framework for developing specialized connectors

- Connector Management: GUI and API for configuring and monitoring connectors

- Transformation Support: Modify data during ingestion or export

- Distributed Operation: Scale connector processing across multiple workers

Banks and financial institutions implement IBM Event Streams for:

- Real-time fraud detection by analyzing transaction patterns as they occur

- Trading platforms requiring low-latency market data distribution

- Payment processing systems with guaranteed message delivery

- Customer 360 views combining data from multiple systems

- Regulatory reporting with complete audit trails

Retailers leverage event streaming for:

- Personalized customer experiences based on real-time behavior

- Inventory management with immediate updates across channels

- Dynamic pricing adjustments responding to market conditions

- Supply chain optimization through event-driven coordination

- Cross-channel customer journey tracking

Industrial applications include:

- IoT sensor data integration from factory equipment

- Predictive maintenance using real-time condition monitoring

- Quality control through continuous process analysis

- Supply chain visibility with event-based tracking

- Energy optimization through immediate consumption feedback

Medical organizations utilize Event Streams for:

- Patient monitoring with real-time alerts for critical conditions

- Healthcare interoperability connecting diverse clinical systems

- Medical device data integration and analysis

- Pharmaceutical supply chain tracking for compliance

- Population health management through event processing

Successful Event Streams implementations typically follow these principles:

- Topic Design: Create a logical topic structure based on business domains

- Partitioning Strategy: Determine partition counts based on throughput and ordering requirements

- Retention Policies: Set appropriate retention periods for different data types

- Deployment Model: Choose between cloud, on-premises, or hybrid approaches

- Integration Architecture: Plan how Event Streams fits in your overall data landscape

For high-throughput, low-latency streaming:

- Producer Configuration: Optimize batch sizes and compression settings

- Consumer Tuning: Configure appropriate fetch sizes and processing patterns

- Topic Settings: Adjust replication factors and partition counts for workloads

- JVM Configuration: Tune garbage collection and memory allocation

- Network Considerations: Address bandwidth and latency requirements

For smooth day-to-day operations:

- Monitoring Setup: Implement comprehensive metrics collection

- Capacity Management: Regularly review resource utilization and growth

- Backup Strategies: Establish procedures for configuration and data backups

- Upgrade Planning: Create processes for non-disruptive version upgrades

- Disaster Recovery: Test recovery procedures regularly

While built on Kafka, IBM Event Streams adds several enterprise capabilities:

- Management Interface: Comprehensive UI versus command-line focus

- Security Enhancements: Advanced authentication and authorization features

- Operational Tools: Simplified administration and monitoring

- Support Services: Enterprise-grade support and SLAs

- Integration Ecosystem: Pre-built connections with IBM and partner solutions

In the commercial Kafka space:

- Deployment Flexibility: Options for cloud, on-premises, and hybrid environments

- Enterprise Integration: Particularly strong integration with existing IBM ecosystems

- Security Depth: Enhanced security features for regulated industries

- Cloud Pak Architecture: Part of IBM’s containerized software strategy

- Global Support: IBM’s worldwide support infrastructure

IBM Event Streams offers flexible deployment approaches:

- IBM Cloud: Fully managed service with pay-as-you-go pricing

- Cloud Pak for Integration: Part of IBM’s comprehensive integration platform

- OpenShift Deployment: Run on Red Hat’s Kubernetes platform on any infrastructure

- Hybrid Implementations: Connect cloud and on-premises instances

For organizations implementing Event Streams, a typical approach includes:

- Use Case Identification: Define specific event streaming requirements

- Proof of Concept: Implement a focused solution for validation

- Architecture Design: Create a comprehensive design for production

- Initial Deployment: Roll out the platform with core functionality

- Expansion Plan: Gradually bring additional systems and applications onboard

IBM provides comprehensive support for Event Streams adoption:

- Official Documentation: Detailed technical guides and references

- Developer Centers: Resources specific to application development

- Training Programs: Courses covering implementation and administration

- Community Forums: Connect with other users and experts

- IBM Support: Enterprise-level technical assistance

The event streaming ecosystem continues to advance with:

- AI Integration: Combining streaming data with machine learning for real-time intelligence

- Edge Processing: Moving event processing closer to data sources

- Event Mesh Architectures: Creating global event networks spanning diverse environments

- Streaming SQL: More sophisticated query capabilities for event processing

- Industry Standards: Evolving open standards for event-driven systems

IBM Event Streams represents a powerful platform for organizations looking to implement enterprise-grade event streaming capabilities. By combining the innovation of Apache Kafka with IBM’s enterprise expertise in security, reliability, and integration, it provides a robust foundation for building real-time data pipelines and event-driven applications.

As businesses continue to prioritize agility, responsiveness, and data-driven decision making, the ability to process and act on events as they happen becomes increasingly critical. Whether you’re modernizing legacy systems, implementing microservices architectures, analyzing IoT data, or creating responsive customer experiences, IBM Event Streams offers the scalable, secure infrastructure needed to succeed with event streaming in complex enterprise environments.

By understanding the capabilities, patterns, and best practices described in this article, organizations can leverage IBM Event Streams to build resilient, scalable event-streaming solutions that transform raw data into actionable insights and enable new levels of operational efficiency and customer engagement.

#IBMEventStreams #EventStreaming #ApacheKafka #EnterpriseStreaming #RealTimeData #EventDrivenArchitecture #IBMCloudPak #DataStreaming #Microservices #KafkaEnterprise #CloudNative #HybridCloud #StreamProcessing #DataIntegration #DigitalTransformation