Red Hat AMQ Streams: Enterprise Kafka for the Modern Containerized World

In today’s rapidly evolving digital landscape, organizations face unprecedented challenges in processing, analyzing, and responding to vast amounts of data in real-time. Event-driven architectures have emerged as a powerful approach to address these challenges, enabling businesses to build responsive systems that can handle massive data volumes while remaining agile and scalable. At the forefront of this paradigm shift stands Apache Kafka, a distributed streaming platform that has revolutionized how enterprises handle streaming data. Red Hat AMQ Streams represents a significant evolution in this space, offering a comprehensive Kafka-based messaging solution specifically engineered for enterprise Kubernetes environments. This article explores how AMQ Streams is transforming enterprise messaging and enabling organizations to build robust, scalable event-driven systems in containerized environments.

Before diving into AMQ Streams specifically, it’s important to understand the foundational technologies and architectural patterns it builds upon.

Event-driven architecture (EDA) is an approach to software design where systems respond to events as they occur, rather than following a traditional request-response pattern. This model enables loose coupling between components, enhanced scalability, and improved responsiveness—critical qualities for modern distributed applications.

Apache Kafka has emerged as the de facto standard platform for implementing EDA. Originally developed at LinkedIn and later open-sourced, Kafka provides a distributed commit log that can handle massive volumes of events with high throughput and low latency. Its key capabilities include:

- Publishing and subscribing to streams of records

- Storing streams of records in a fault-tolerant, durable manner

- Processing streams as they occur

While Kafka offers powerful capabilities, deploying and managing it at enterprise scale—particularly in containerized environments—introduces significant complexity. This is where Red Hat AMQ Streams enters the picture, providing a Kubernetes-native Kafka solution with enterprise support and integration.

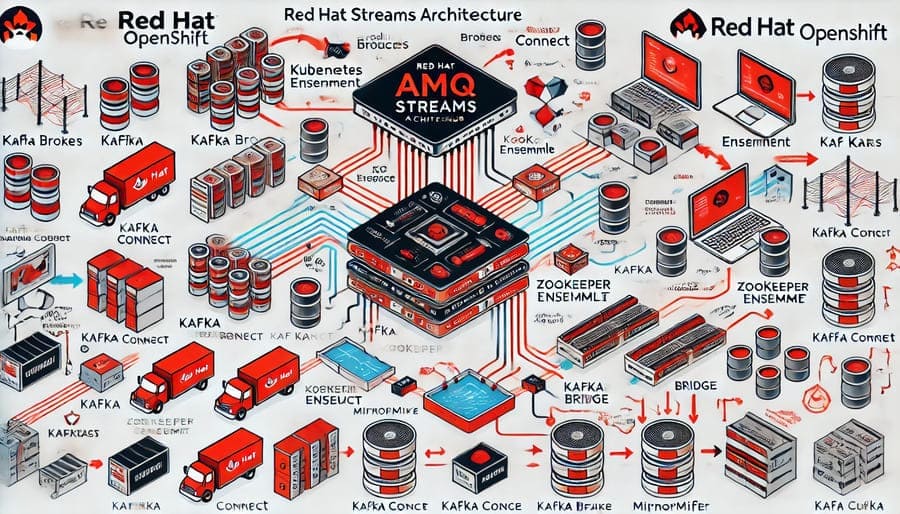

Red Hat AMQ Streams is an enterprise-grade distribution of Apache Kafka that runs natively on Red Hat OpenShift—the industry-leading enterprise Kubernetes platform. It forms part of the broader Red Hat AMQ family, which provides messaging solutions for a variety of use cases.

At its core, AMQ Streams leverages the Strimzi operator to provide Kubernetes-native lifecycle management for Apache Kafka. This operator-based approach automates many complex operational tasks, making it significantly easier to deploy, configure, upgrade, and manage Kafka clusters in containerized environments.

Key aspects of AMQ Streams include:

- Kubernetes-native experience: Deploy and manage Kafka using Kubernetes custom resources

- Enterprise support: Backed by Red Hat’s extensive support and certification programs

- Automated operations: Simplified cluster deployment, scaling, and management

- Comprehensive security: Integration with enterprise security frameworks

- Full Kafka ecosystem: Support for Kafka Connect, Kafka MirrorMaker, and Kafka Bridge

- Observability: Built-in monitoring and metrics integration

By combining the powerful capabilities of Apache Kafka with the operational benefits of Kubernetes operators, AMQ Streams creates a compelling solution for organizations looking to implement event streaming in enterprise environments.

Central to AMQ Streams is its adoption of the Kubernetes operator pattern. The Strimzi operator serves as the control plane that manages Kafka deployments:

- Custom Resource Definitions (CRDs): Define Kafka-specific resources in Kubernetes

- Controllers: Watch for changes to custom resources and reconcile the actual state

- Automated operations: Handle routine tasks like configuration, upgrades, and scaling

- Kafka-aware intelligence: Apply Kafka best practices automatically

This operator-based approach transforms Kafka administration from manually managing individual components to declaring the desired state in Kubernetes and letting the operator handle the implementation details.

A typical AMQ Streams deployment includes several core components:

- Kafka Brokers: The backbone of the system, handling message storage and delivery

- ZooKeeper Ensemble: Manages cluster coordination and broker metadata

- Kafka Connect: Framework for integrating with external systems

- Kafka Mirror Maker: Replicates data between Kafka clusters

- Kafka Bridge: Provides RESTful API access to Kafka topics

- Topic Operator: Manages Kafka topics as Kubernetes resources

- User Operator: Handles user authentication and authorization

These components work together to provide a complete event streaming platform that can be deployed, managed, and scaled using standard Kubernetes tooling.

AMQ Streams transforms Kafka operation through deep Kubernetes integration:

- Declarative Configuration: Define entire Kafka clusters as Kubernetes resources

- Rolling Updates: Zero-downtime upgrades and configuration changes

- Auto-Scaling: Dynamically adjust capacity based on load

- Self-Healing: Automatic recovery from failures

- Resource Management: Optimize CPU and memory utilization

- Storage Management: Leverage Kubernetes storage classes for persistence

For organizations with strict security requirements:

- TLS Encryption: Secure communication between all components

- Authentication: Support for SCRAM-SHA, OAuth, mTLS, and more

- Authorization: Fine-grained access control to topics and operations

- Kafka ACLs: Kafka-native security integrated with enterprise systems

- Secrets Management: Secure handling of credentials and certificates

- Red Hat Identity Management: Integration with enterprise identity systems

For robust production deployments:

- Monitoring Integration: Prometheus metrics and Grafana dashboards

- Health Checks: Proactive monitoring of component status

- Disaster Recovery: Cross-cluster replication and failover

- Backup and Restore: Tools for data protection

- Capacity Planning: Resource requirement forecasting

- Multi-tenancy: Isolation between different teams and applications

Beyond core Kafka functionality:

- Streams API Support: For building stream processing applications

- Schema Registry Integration: Ensure data compatibility

- Connect Framework: Pre-built connectors for common systems

- REST Interface: HTTP access to Kafka topics

- MirrorMaker 2: Enhanced cross-cluster replication

- Kafka Exporter: Additional metrics and monitoring

Organizations leverage AMQ Streams as the backbone for microservices architectures:

- Enabling event-driven communication between services

- Implementing the Command Query Responsibility Segregation (CQRS) pattern

- Creating event sourcing systems for complete audit trails

- Supporting service choreography through event streams

- Building resilient, loosely-coupled service architectures

For Internet of Things implementations:

- Processing telemetry data from thousands of connected devices

- Implementing edge-to-core data pipelines

- Enabling real-time monitoring and alerting

- Supporting digital twin architectures

- Handling intermittent connectivity through message buffering

For immediate insights from streaming data:

- Feeding data warehouses with continuous, fresh data

- Powering real-time dashboards and visualizations

- Enabling complex event processing for pattern detection

- Supporting machine learning pipelines with current data

- Implementing fraud detection and anomaly detection systems

As a central nervous system for enterprise data:

- Connecting legacy systems with modern applications

- Implementing Change Data Capture (CDC) from databases

- Creating ETL/ELT pipelines for data warehousing

- Supporting hybrid cloud data architectures

- Enabling event-driven integration patterns

Successful implementations typically follow these principles:

- Define your event domain model: Create a logical organization of event types

- Size appropriately: Determine broker count and resource requirements

- Plan topic strategy: Design topic naming conventions and partition counts

- Consider storage options: Choose appropriate storage classes for performance and durability

- Establish monitoring approach: Define metrics, dashboards, and alerts

For growing workloads:

- Horizontal scaling: Add brokers to increase capacity

- Partition planning: Design for future growth

- Resource allocation: Size containers appropriately

- Network considerations: Account for increased traffic

- Storage scaling: Plan for expanding data volume

For comprehensive protection:

- Defense in depth: Implement multiple security layers

- Identity integration: Connect with enterprise authentication systems

- Network policies: Control traffic between components

- Data classification: Apply appropriate controls based on sensitivity

- Audit logging: Track access and administrative actions

When evaluating build vs. buy options:

- Operational overhead: AMQ Streams simplifies management through the operator pattern

- Kubernetes integration: Native vs. retrofitted containerization

- Support model: Enterprise support vs. community or internal support

- Total cost of ownership: Factor in operational costs beyond licensing

- Upgrade paths: Automated vs. manual approaches

For organizations considering managed services:

- Deployment flexibility: On-premises, cloud, and hybrid options

- Control and customization: Greater control with AMQ Streams

- Integration with existing systems: Often easier with on-premises deployment

- Regulatory compliance: Address data sovereignty requirements

- Cost model: Perpetual licensing vs. consumption-based pricing

Before implementing AMQ Streams:

- OpenShift environment: Running cluster (version 4.x recommended)

- Administrative access: Privileges to create custom resources

- Resource planning: Sufficient CPU, memory, and storage

- Networking: Appropriate connectivity between components

- Security planning: Certificates, credentials, and policies

For those ready to explore AMQ Streams:

- Install the AMQ Streams Operator: Deploy via OperatorHub in OpenShift

- Create a Kafka cluster: Define the cluster configuration as a Kubernetes resource

- Configure security: Set up authentication and encryption

- Create topics: Define topics as Kubernetes resources

- Implement producers and consumers: Develop applications to use your Kafka cluster

Red Hat provides comprehensive support for AMQ Streams adoption:

- Official documentation: Detailed guides and references

- Developer portal: Resources for building applications

- Sample code: Example implementations for common patterns

- Training courses: Formal education on AMQ and Kafka

- Support services: Access to Red Hat’s expert support team

The event streaming landscape continues to evolve with:

- Enhanced serverless integration: More seamless connections with serverless platforms

- Improved multi-cluster capabilities: Better support for global deployments

- Advanced stream processing: More sophisticated analytics within the platform

- Edge-optimized deployments: Better support for edge computing scenarios

- AI/ML integration: Closer ties with machine learning workflows

Red Hat AMQ Streams represents a significant advancement in how enterprises deploy, manage, and scale Apache Kafka in modern containerized environments. By combining the powerful event streaming capabilities of Kafka with the operational benefits of Kubernetes operators, AMQ Streams enables organizations to build robust, scalable event-driven architectures without the traditional operational overhead.

As businesses increasingly embrace event-driven patterns and microservices architectures, platforms like AMQ Streams become essential infrastructure components. Whether you’re building microservices communication channels, implementing IoT data pipelines, creating real-time analytics solutions, or modernizing legacy integration patterns, AMQ Streams provides a solid foundation that aligns with enterprise requirements for security, reliability, and scalability.

By understanding the capabilities, implementation patterns, and best practices described in this article, organizations can leverage AMQ Streams to build sophisticated event streaming solutions that drive digital transformation initiatives while maintaining the operational rigor expected in enterprise environments.

#RedHatAMQStreams #ApacheKafka #EventStreaming #Kubernetes #OpenShift #ContainerizedMessaging #Microservices #EventDrivenArchitecture #CloudNative #EnterpriseKafka #KafkaOperator #Strimzi #DataStreaming #MessageBroker #DistributedSystems