Cloudera Data Platform: The Enterprise Data Cloud Revolutionizing Modern Data Management

In today’s data-driven world, organizations face unprecedented challenges: exponential data growth, increasingly distributed data sources, complex governance requirements, and the need to deliver insights at speed. The Cloudera Data Platform (CDP) has emerged as a comprehensive solution to these challenges, offering a unified approach to enterprise data management that spans from the edge to AI.

Traditional data architectures often create silos between on-premises infrastructure and various cloud providers, between operational and analytical workloads, and between different processing paradigms. These silos lead to inefficiencies, governance challenges, and limited ability to extract value from data.

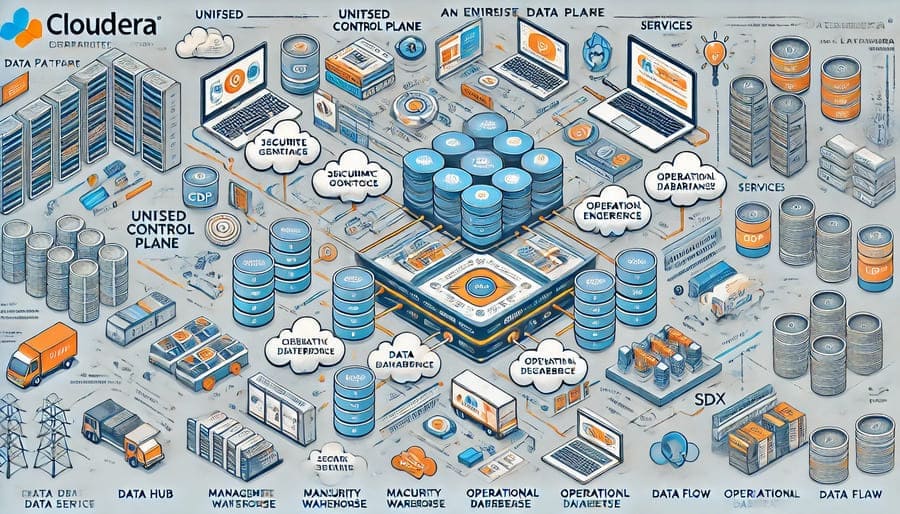

Cloudera Data Platform represents a fundamental shift in approach—an integrated data platform designed to function seamlessly across environments while supporting diverse workloads and use cases within a unified security and governance framework.

CDP is an enterprise data cloud that combines the best elements of Cloudera’s legacy Hadoop distribution (CDH) and Hortonworks Data Platform (HDP) following their merger, while reimagining the architecture for modern hybrid and multi-cloud deployments.

At its core, CDP delivers:

- Hybrid & Multi-Cloud Capability: Consistent experience across on-premises, private cloud, and public cloud environments

- Complete Data Lifecycle: Support for the entire data journey from collection and enrichment to analysis and AI

- Secure & Governed: Unified security and governance across all environments and workloads

- Open Platform: Based on 100% open source technologies with open formats and APIs

- Self-Service Experience: Enabling various data practitioners to work independently while maintaining governance

Understanding CDP’s architecture helps explain its unique position in the data management landscape:

CDP features a cloud-native control plane that provides consistent management, security, and operations across all environments:

┌─────────────────── Cloudera Control Plane ───────────────────┐

│ │

│ ┌──────────────┐ ┌──────────────┐ ┌──────────────────┐ │

│ │ Management │ │ Security │ │ Workload │ │

│ │ Console │ │ & Governance │ │ Manager │ │

│ └──────────────┘ └──────────────┘ └──────────────────┘ │

│ │

└──────────────────────────────────────────────────────────────┘

│

▼

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ │ │ │ │

│ Public │ Public │ Private │ On-Premises│

│ Cloud (AWS)│ Cloud (GCP)│ Cloud │ Data Center│

│ │ │ │ │

└─────────────┴─────────────┴─────────────┴─────────────┘

CDP organizes its functionality into purpose-built data services:

- Data Hub: Customizable clusters for specialized workloads

- Data Warehouse: SQL analytics for business intelligence

- Machine Learning: End-to-end ML workflows

- Data Engineering: Spark-based data processing

- Operational Database: Real-time database services

- Data Flow: Real-time streaming and edge processing

- Data Catalog: Data discovery and governance

Each service provides a tailored experience for specific personas while sharing the underlying data and governance framework.

Perhaps CDP’s most distinctive architectural element is the Shared Data Experience (SDX), which provides:

- Unified Security: Consistent authentication, authorization, and encryption

- Integrated Governance: Centralized audit, lineage, and policy management

- Common Metadata: Shared schema and metadata across services

- Consistent Replication: Coordinated data movement between environments

SDX ensures that security policies, data definitions, and governance controls follow the data regardless of where it’s stored or how it’s processed.

CDP supports multiple storage paradigms:

- Cloud Object Storage: Integration with S3, ADLS, GCS

- HDFS: For on-premises deployments

- Ozone: Cloudera’s scalable object store for on-premises environments

Data is typically organized in a lakehouse architecture:

┌───────────── Data Lakehouse ─────────────┐

│ │

│ ┌────────────┐ ┌────────────────────┐ │

│ │ │ │ │ │

│ │ Structured │ │ Data Warehouse │ │

│ │ Storage │───►│ Experience │ │

│ │ (Iceberg) │ │ │ │

│ │ │ └────────────────────┘ │

│ └────────────┘ │

│ │

│ ┌────────────┐ ┌────────────────────┐ │

│ │ │ │ │ │

│ │ Data Lake │───►│ Data Engineering │ │

│ │ Storage │ │ Experience │ │

│ │ │ │ │ │

│ └────────────┘ └────────────────────┘ │

│ │

└──────────────────────────────────────────┘

CDP includes multiple processing frameworks to address different workloads:

- Apache Spark: For batch and streaming analytics

- Apache Hive: For SQL-based data warehouse workloads

- Apache Impala: For interactive SQL queries

- Apache HBase/Phoenix: For operational database workloads

- Apache NiFi: For data flow and edge processing

- Apache Kafka: For real-time messaging

CDP Machine Learning provides an end-to-end environment for data science:

┌───── CDP Machine Learning Workflow ─────┐

│ │

│ ┌─────────┐ ┌───────┐ ┌──────┐ │

│ │ │ │ │ │ │ │

│ │ Explore │────►│ Model │────►│Deploy│ │

│ │ Data │ │ & Train│ │& Serve│ │

│ │ │ │ │ │ │ │

│ └─────────┘ └───────┘ └──────┘ │

│ │

│ ┌─────────────────────────────────────┐│

│ │ Governance & Security ││

│ └─────────────────────────────────────┘│

└─────────────────────────────────────────┘

The ML Workspace provides Jupyter notebooks, collaborative projects, and model management with built-in security and reproducibility.

CDP’s security model includes:

- Authentication: Integration with enterprise identity providers

- Authorization: Role-based access control at multiple levels

- Encryption: Data encryption at rest and in transit

- Audit: Comprehensive logging and monitoring

Governance capabilities feature:

- Atlas: For metadata management and lineage tracking

- Ranger: For fine-grained access policies

- Catalog: For data discovery and classification

Many organizations use CDP to implement a modern data lakehouse architecture:

# Example: Accessing data from Python in a CDP Data Engineering service

from pyspark.sql import SparkSession

# Initialize Spark session with CDP configurations

spark = SparkSession.builder \

.appName("CDP Data Processing") \

.config("spark.kerberos.access.hadoopFileSystems", "s3a://data-lake/") \

.enableHiveSupport() \

.getOrCreate()

# Read from the data lake

raw_data = spark.read.parquet("s3a://data-lake/raw/customer_data/")

# Process and transform

enriched_data = raw_data.filter("account_status = 'active'") \

.join(spark.table("reference.demographics"), "customer_id") \

.select("customer_id", "segment", "lifetime_value", "propensity_score")

# Write to the lakehouse structured zone using Iceberg for ACID transactions

enriched_data.writeTo("catalog.database.customer_enriched") \

.using("iceberg") \

.tableProperty("write.format.default", "parquet") \

.tableProperty("write.upsert.enabled", "true") \

.create()

This approach provides:

- Scalable storage with cost-effective cloud object stores

- ACID transactions through table formats like Iceberg

- SQL access for analytics users

- Unified governance across all data

Organizations with complex hybrid environments leverage CDP’s consistent architecture:

- Development and Testing: In public cloud for agility

- Production Workloads: On-premises for performance and cost control

- Disaster Recovery: In secondary cloud for resilience

- Edge Processing: At remote locations for real-time analytics

CDP’s control plane provides unified management across these environments, while SDX ensures consistent security and governance.

For real-time use cases, CDP enables end-to-end streaming pipelines:

┌─────────┐ ┌─────────┐ ┌─────────┐ ┌─────────┐

│ │ │ │ │ │ │ │

│ Edge │───►│ NiFi │───►│ Kafka │───►│ Spark │

│ Devices │ │ Flows │ │ Topics │ │ Streaming│

│ │ │ │ │ │ │ │

└─────────┘ └─────────┘ └─────────┘ └─────────┘

│

▼

┌─────────┐

│ │

│ Serving │

│ Layer │

│ │

└─────────┘

This architecture supports use cases like:

- Real-time fraud detection

- IoT analytics

- Customer experience optimization

- Operational monitoring

CDP offers flexible deployment options:

- CDP Public Cloud: Fully managed service on AWS, Azure, or Google Cloud

- CDP Private Cloud: Software deployment on customer-managed infrastructure

- CDP Base: Traditional on-premises deployment

Most organizations implement a hybrid approach, with workloads placed according to performance, cost, and data gravity considerations.

To maximize CDP performance:

- Right-sizing: Allocate appropriate resources for each workload

# Example CDP Data Hub cluster definition environments: - name: production resources: compute: instanceType: m5.2xlarge instanceCount: 10 autoscaling: enabled: true minInstances: 5 maxInstances: 20 memory: requestedSize: 8GB maxSize: 16GB - Workload Isolation: Separate conflicting workloads

- Interactive queries in dedicated clusters

- Batch processing with appropriate scheduling

- Critical workloads with reserved resources

- Data Locality: Place processing close to data

- Co-locate compute with storage where possible

- Use intelligent caching for frequent access

- Implement tiered storage policies

Effective governance requires both technical and organizational approaches:

- Data Classification: Implement automated classification

-- Example Atlas classification policy CREATE POLICY classified_data CLASSIFICATION "PII" RESOURCE DB.customer_data COLUMN (email, phone, address, ssn) MASK CUSTOM function mask_pii(); - Access Patterns: Define clear access patterns with Ranger

- Role-based policies aligned with organizational structure

- Tag-based policies for consistent protection

- Row-level filtering for multi-tenant data

- Data Quality: Implement automated quality checks

- Schema validation on ingestion

- Statistical profiling and anomaly detection

- Quality metrics and SLAs

For organizations transitioning to CDP:

- Phased Approach:

- Start with new projects on CDP

- Migrate workloads incrementally

- Decommission legacy systems gradually

- Workload Assessment:

- Catalog existing workloads and data

- Identify dependencies and integration points

- Prioritize based on business value and complexity

- Hybrid Bridge:

- Implement replication between legacy and CDP

- Use CDP’s migration tools for automated conversion

- Validate results before cutover

Many organizations consider building their data platform using native cloud services:

Advantages of CDP:

- Consistent experience across environments

- Integrated governance and security

- Reduced integration complexity

- Enterprise support and roadmap

Challenges:

- Potential higher licensing costs

- Less fine-grained control

- Dependency on Cloudera’s roadmap

For organizations evaluating different Hadoop-based platforms:

Advantages of CDP:

- Modern cloud-native architecture

- Unified security and governance

- Comprehensive workload support

- Strong enterprise features

Considerations:

- Migration effort from other distributions

- Subscription-based pricing model

- Organizational learning curve

When comparing with cloud-native data warehouses like Snowflake:

CDP Strengths:

- Support for unstructured and semi-structured data

- Hybrid deployment options

- Open formats and standards

- Diverse processing paradigms

Warehouse Strengths:

- Simpler administration for pure analytics

- Often better pure SQL performance

- Less infrastructure management

Many organizations implement both, using CDP for data lake/lakehouse and integration with specialized warehouses for specific analytical needs.

Cloudera continues to evolve CDP with several key trends:

- Containerization: Expanded Kubernetes-native deployments

- Serverless Options: More functions-as-a-service offerings

- Managed Experiences: Simplified consumption models

- MLOps Automation: Enhanced model lifecycle management

- GPU Acceleration: Optimized infrastructure for deep learning

- Foundation Models: Integration with and fine-tuning of foundation models

- Responsible AI: Governance controls for AI/ML

- Intelligent Data Movement: Policy-driven data placement

- Active Metadata: Automated enrichment and classification

- Data Mesh Support: Tools for domain-oriented ownership

Cloudera Data Platform represents a significant evolution in enterprise data management, addressing the complex challenges of modern data environments through its unified architecture, hybrid capabilities, and integrated governance.

For organizations struggling with data silos, governance challenges, or the complexity of managing diverse data workloads across environments, CDP offers a compelling solution. Its unique combination of cloud-native flexibility and enterprise-grade security and governance enables businesses to implement sophisticated data strategies while maintaining control.

As data volumes continue to grow, regulations become more stringent, and analytical requirements more diverse, platforms like CDP that can span the entire data lifecycle while adapting to changing infrastructure realities will become increasingly valuable. Whether you’re looking to modernize legacy data infrastructure, implement a hybrid cloud strategy, or build new data-driven applications, CDP provides a comprehensive foundation for enterprise data management.

Hashtags: #ClouderaDataPlatform #CDP #DataManagement #HybridCloud #DataLakehouse #BigData #DataGovernance #EnterpriseData #Hadoop #DataEngineering