Databricks Delta Lake: The Open-Source Storage Layer Transforming Data Lake Reliability

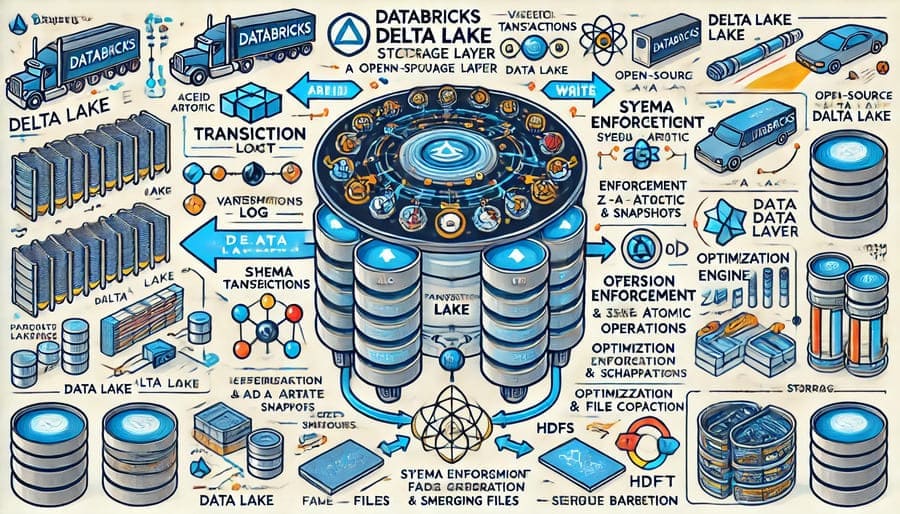

In the evolving landscape of big data, organizations face a persistent challenge: how to combine the flexibility and cost-effectiveness of data lakes with the reliability and performance traditionally associated with data warehouses. Delta Lake, an open-source project created by Databricks, emerges as a powerful solution to this dilemma by introducing a robust storage layer that brings reliability, performance, and governance to data lakes.

Traditional data lakes built on cloud storage systems like AWS S3, Azure Data Lake Storage, or Google Cloud Storage offer unparalleled scalability and cost advantages. However, they come with significant limitations that have historically prevented them from serving as a single source of truth for enterprise data:

- Lack of ACID Transactions: Without atomic operations, concurrent writes can lead to data corruption

- Schema Enforcement Challenges: No built-in mechanism to ensure data quality and consistency

- Limited Performance Optimization: Few tools for improving query speed on raw data

- Difficult Metadata Management: Tracking table history and evolution becomes complex

- No Time Travel Capabilities: Inability to access or restore previous versions of data

These challenges often force organizations to build complex ETL pipelines that move data from lakes to warehouses, creating data silos, increasing costs, and introducing latency.

Delta Lake addresses these fundamental challenges by providing an open-source storage layer that sits on top of existing data lakes, transforming them into “lakehouses” that combine the best features of data lakes and data warehouses.

┌─────────────────────────────────────────────────────┐

│ Applications │

│ (BI Tools, Machine Learning, Analytics, SQL Engines) │

└──────────────────────┬──────────────────────────────┘

│

┌──────────────────────▼──────────────────────────────┐

│ Delta Lake │

│ │

│ ┌─────────────┐ ┌───────────┐ ┌─────────────────┐ │

│ │ ACID │ │ Schema │ │ Time Travel & │ │

│ │Transactions │ │Enforcement│ │ Versioning │ │

│ └─────────────┘ └───────────┘ └─────────────────┘ │

│ │

│ ┌─────────────┐ ┌───────────┐ ┌─────────────────┐ │

│ │ Unified │ │Optimization│ │ Data Validation │ │

│ │Batch/Stream │ │ Engine │ │ & Quality │ │

│ └─────────────┘ └───────────┘ └─────────────────┘ │

└──────────────────────┬──────────────────────────────┘

│

┌──────────────────────▼──────────────────────────────┐

│ Storage Layer │

│ (S3, ADLS, GCS, HDFS, etc.) │

└─────────────────────────────────────────────────────┘

At its core, Delta Lake provides ACID (Atomicity, Consistency, Isolation, Durability) transactions through a transaction log that records all changes to the table:

# Example: Atomic operations with Delta Lake

from delta.tables import *

from pyspark.sql.functions import *

# Update operation is atomic - either all records are updated or none

deltaTable = DeltaTable.forPath(spark, "/data/sales")

deltaTable.update(

condition = "date = '2023-03-15'",

set = { "status": "'processed'" }

)

This transaction log ensures that readers always see a consistent view of the data, even if writers are concurrently modifying it. If a write operation fails midway, the table remains in its previous valid state.

Delta Lake provides two critical capabilities for schema management:

Schema Enforcement: Prevents writing malformed data that could corrupt datasets:

# Attempt to write data with incorrect schema

try:

# This will fail if the DataFrame doesn't match the target schema

df_with_wrong_schema.write.format("delta").mode("append").save("/data/sales")

except Exception as e:

print(f"Schema validation failed: {e}")

Schema Evolution: Allows tables to adapt as data models change:

# Evolve schema by adding a new column

df_with_new_column.write \

.format("delta") \

.mode("append") \

.option("mergeSchema", "true") \

.save("/data/sales")

This combination protects data integrity while allowing for necessary changes as business requirements evolve.

Delta Lake’s time travel capabilities enable accessing previous versions of data for auditing, rollbacks, or reproducible experiments:

# Query a table as it was 3 days ago

df = spark.read \

.format("delta") \

.option("timestampAsOf", "2023-03-12") \

.load("/data/sales")

# Or query by version number

df = spark.read \

.format("delta") \

.option("versionAsOf", 5) \

.load("/data/sales")

This feature is invaluable for compliance requirements, debugging issues, or running reproducible analytics on historical snapshots.

Delta Lake provides a unified solution for batch and streaming workloads, simplifying architectures that previously required separate systems:

# Write streaming data to Delta Lake

streamingDF = spark.readStream \

.format("kafka") \

.option("kafka.bootstrap.servers", "host:port") \

.option("subscribe", "sales_events") \

.load()

query = streamingDF.writeStream \

.format("delta") \

.option("checkpointLocation", "/checkpoints/sales") \

.start("/data/sales")

# Later, read the same table for batch analytics

batchDF = spark.read.format("delta").load("/data/sales")

This seamless integration eliminates the need to maintain separate data pipelines for real-time and batch analytics.

Delta Lake includes a sophisticated optimization engine that enhances query performance:

Data Skipping: Delta Lake maintains statistics about data in each file, allowing queries to skip files that can’t match filter conditions.

Z-Ordering: A multi-dimensional clustering technique that co-locates related data:

-- Optimize the table and Z-order by customer_id and date

OPTIMIZE sales

ZORDER BY (customer_id, date)

Compaction: Combines small files to improve read performance:

-- Compact small files without changing the ordering

OPTIMIZE sales

These optimizations dramatically improve query performance, especially for large-scale datasets.

Organizations use Delta Lake to implement robust data quality frameworks:

# Define quality expectations

from pyspark.sql.functions import col

def validate_sales_data(df):

validations = [

# Ensure no negative prices

(col("price") >= 0, "price_check"),

# Validate order dates

(col("order_date") <= current_date(), "date_check"),

# Ensure product ID exists

(col("product_id").isNotNull(), "product_check")

]

# Apply all validations and collect failures

for condition, check_name in validations:

failed_records = df.filter(~condition)

if failed_records.count() > 0:

# Log or handle validation failures

failed_records.write \

.format("delta") \

.mode("append") \

.save(f"/data/quality/failed_{check_name}")

return df.filter(all_conditions)

# Apply validation during ingestion

clean_df = validate_sales_data(raw_sales_df)

clean_df.write.format("delta").mode("append").save("/data/sales_validated")

This approach ensures that only quality data enters the lake, maintaining trust in downstream analytics.

Delta Lake excels at implementing CDC workflows that capture and apply changes from source systems:

# Merge changes from a CDC feed into a Delta table

from delta.tables import *

# Target table

target = DeltaTable.forPath(spark, "/data/customers")

# Source changes

source = spark.read.format("kafka").option(...).load().select(...)

# Perform merge operation (upsert)

target.alias("target") \

.merge(

source.alias("source"),

"target.customer_id = source.customer_id"

) \

.whenMatchedUpdateAll() \

.whenNotMatchedInsertAll() \

.execute()

This pattern enables efficient synchronization between operational systems and analytical stores.

Data scientists leverage Delta Lake’s capabilities to build reliable feature stores:

# Record feature generation logic with versioning

from datetime import datetime

feature_set_version = datetime.now().strftime("%Y%m%d%H%M%S")

# Generate features

customer_features = spark.sql("""

SELECT

customer_id,

sum(order_total) as total_spend_30d,

count(distinct order_id) as order_count_30d,

max(order_date) as last_order_date

FROM sales

WHERE order_date >= date_sub(current_date(), 30)

GROUP BY customer_id

""")

# Save to versioned feature store

customer_features.write \

.format("delta") \

.mode("overwrite") \

.option("overwriteSchema", "true") \

.save(f"/data/features/customer_metrics/v={feature_set_version}")

# Register current version

spark.sql(f"""

CREATE OR REPLACE VIEW customer_features

AS SELECT * FROM delta.`/data/features/customer_metrics/v={feature_set_version}`

""")

This approach ensures reproducibility and enables point-in-time feature retrieval for training.

A common pattern with Delta Lake is the “multi-hop” architecture that processes data through progressive refinement stages:

Bronze Layer (Raw) → Silver Layer (Validated) → Gold Layer (Business-Ready)

# Bronze layer - ingest raw data

raw_df.write \

.format("delta") \

.mode("append") \

.option("mergeSchema", "true") \

.save("/data/bronze/sales")

# Silver layer - cleansed and validated data

bronze_df = spark.read.format("delta").load("/data/bronze/sales")

silver_df = validate_and_transform(bronze_df)

silver_df.write \

.format("delta") \

.mode("overwrite") \

.partitionBy("date") \

.save("/data/silver/sales")

# Gold layer - business-level aggregations

spark.sql("""

CREATE TABLE IF NOT EXISTS gold.daily_sales

USING delta

AS

SELECT

date,

product_category,

region,

sum(amount) as total_sales,

count(distinct customer_id) as unique_customers

FROM silver.sales

GROUP BY date, product_category, region

""")

This approach provides clear boundaries for different data quality levels and processing stages.

To maximize Delta Lake performance:

- Right-size files: Aim for file sizes between 100MB and 1GB

-- Compact small files

OPTIMIZE sales

WHERE date > '2023-01-01'

- Choose appropriate partitioning: Partition by columns used in common filters

df.write \

.format("delta") \

.partitionBy("year", "month") \

.save("/data/sales")

- Apply Z-ordering: For frequently queried columns that aren’t partition keys

OPTIMIZE sales

ZORDER BY (customer_id, product_id)

- Manage table history: Set retention policies to control storage costs

ALTER TABLE sales

SET TBLPROPERTIES ('delta.logRetentionDuration' = 'interval 30 days')

Delta Lake supports various data modeling techniques:

Dimensional Modeling:

-- Fact table

CREATE TABLE sales_facts (

sale_id BIGINT,

product_key BIGINT,

customer_key BIGINT,

date_key INT,

quantity INT,

amount DECIMAL(10,2)

) USING delta

PARTITIONED BY (date_key)

-- Dimension table

CREATE TABLE product_dim (

product_key BIGINT,

product_id STRING,

product_name STRING,

category STRING,

brand STRING,

...

) USING delta

Data Vault:

-- Hub table

CREATE TABLE customer_hub (

customer_hub_key BIGINT,

customer_id STRING,

load_date TIMESTAMP,

record_source STRING

) USING delta

-- Satellite table

CREATE TABLE customer_sat (

customer_hub_key BIGINT,

load_date TIMESTAMP,

record_source STRING,

customer_name STRING,

email STRING,

...

) USING delta

The choice depends on your specific requirements for history tracking, query patterns, and data integration needs.

Delta Lake works seamlessly with the broader data engineering ecosystem:

dbt Core:

# dbt model definition

models:

- name: sales_daily

config:

materialized: incremental

file_format: delta

incremental_strategy: merge

unique_key: [date_key, product_id]

Apache Airflow:

# Airflow DAG with Delta Lake operations

from airflow import DAG

from airflow.providers.apache.spark.operators.spark_submit import SparkSubmitOperator

with DAG("delta_etl_pipeline", ...) as dag:

process_sales = SparkSubmitOperator(

task_id="process_sales",

application="/path/to/delta_etl.py",

conn_id="spark_conn",

application_args=["--date", "{{ ds }}"]

)

Terraform:

# Infrastructure as code for Delta Lake storage

resource "aws_s3_bucket" "delta_lake_storage" {

bucket = "company-delta-lake"

acl = "private"

versioning {

enabled = true

}

lifecycle_rule {

id = "log-retention"

enabled = true

expiration {

days = 90

}

}

}

Delta Lake can be deployed in various environments:

- Databricks: Native integration with optimized performance

- Self-managed Spark: On any Spark 2.4.2+ cluster

- Amazon EMR: As part of managed Spark infrastructure

- Azure Synapse Analytics: Native Delta Lake support

- Google Dataproc: With Delta Lake libraries installed

As an open-source project (Apache License 2.0), Delta Lake has fostered a vibrant ecosystem:

- Integration with Delta Sharing: For secure data sharing across organizations

- Connectors for BI Tools: PowerBI, Tableau, and others

- Language Support: Beyond Spark, with readers for Python, R, and more

- Community Extensions: For specialized use cases and integrations

The project is governed by the Linux Foundation, ensuring vendor-neutral development.

When comparing Delta Lake to alternatives:

Delta Lake vs. Apache Hudi:

- Both offer ACID transactions and time travel

- Delta Lake provides simpler APIs and tighter Spark integration

- Hudi offers more granular record-level operations

- Delta Lake typically has better query performance

Delta Lake vs. Apache Iceberg:

- Both focus on table management and ACID guarantees

- Delta has better streaming integration

- Iceberg has stronger partition evolution capabilities

- Delta offers more optimization features

Many organizations evaluate these technologies based on their specific use cases and existing infrastructure.

Delta Lake continues to evolve with several exciting developments:

- Improved Change Data Feed: For downstream processing of table changes

- Enhanced Vectorized Query Execution: For greater performance

- Advanced Indexing Capabilities: Beyond Z-ordering

- Expanded Ecosystem Integrations: With more query engines and tools

Delta Lake represents a significant advancement in data lake technology, addressing the fundamental reliability and performance challenges that have historically limited data lake adoption for critical enterprise workloads. By providing ACID transactions, schema enforcement, optimization capabilities, and time travel, it transforms simple object storage into a powerful lakehouse platform.

For organizations struggling with data silos, complex ETL pipelines, or the reliability limitations of traditional data lakes, Delta Lake offers a compelling solution. Its open-source nature ensures vendor neutrality and community innovation, while its integration with the broader data ecosystem makes adoption straightforward.

As data volumes continue to grow and analytics become increasingly critical to business success, technologies like Delta Lake that can provide reliability without sacrificing flexibility will play an increasingly important role in modern data architecture. Whether you’re building a new analytics platform or enhancing an existing data lake, Delta Lake deserves serious consideration as a foundational component of your data strategy.

Hashtags: #DeltaLake #DataLakehouse #ACID #OpenSource #DataEngineering #ApacheSpark #DataGovernance #TimeTravel #BigData #DataLake