Fluentd: The Open-Source Data Collector Revolutionizing Unified Logging for Data Engineering

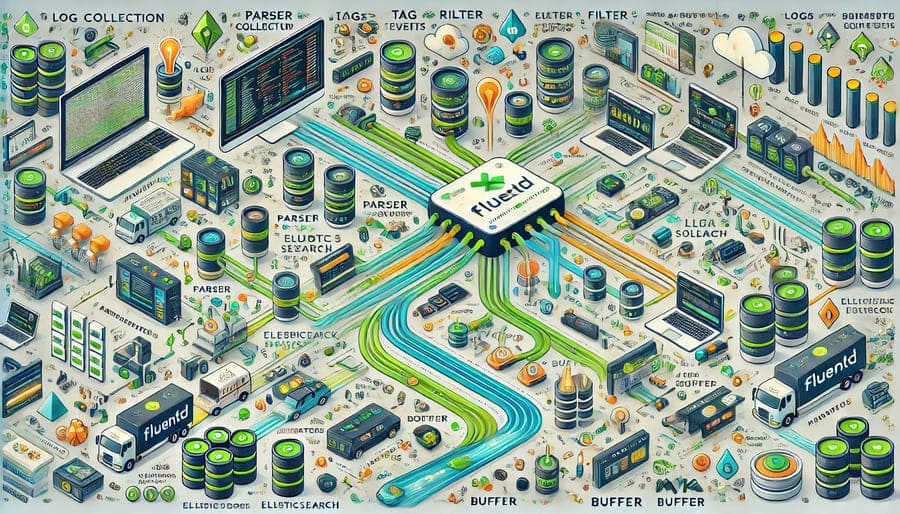

In the complex ecosystem of modern data infrastructure, one challenge remains constant: the need to efficiently collect, process, and route massive volumes of log data from diverse sources. Enter Fluentd, an open-source data collector that has transformed how organizations approach unified logging with its powerful yet elegant design.

Born in 2011 at Treasure Data (later acquired by Arm), Fluentd emerged as a solution to a universal data engineering problem: how to handle log data from multiple sources in different formats and direct it seamlessly to various destinations. Created by Sadayuki “Sada” Furuhashi, Fluentd was designed with the philosophy of creating a unified logging layer between data sources and storage destinations.

Recognized by the Cloud Native Computing Foundation (CNCF) as a graduated project in 2019, Fluentd stands alongside Kubernetes and Prometheus as one of the essential tools in the cloud-native ecosystem. This recognition highlights its importance, stability, and widespread adoption.

What sets Fluentd apart is its thoughtful architecture built around the concept of a “unified logging layer”:

At the heart of Fluentd’s design is its tag-based routing system. Every log event in Fluentd has a tag that identifies its origin and helps determine how it should be processed and where it should be sent.

For example, a web server access log might have the tag apache.access, while a database error log might be tagged as mysql.error. These tags then drive the routing decisions, making it possible to handle different data types appropriately.

Fluentd’s extensibility comes from its plugin system, with over 1000 community-contributed plugins that enable:

- Input plugins: Collect logs from various sources (files, syslog, HTTP, etc.)

- Parser plugins: Convert raw log data into structured records

- Filter plugins: Modify, enrich, or drop log events

- Output plugins: Send processed logs to storage systems, analytics platforms, or other services

- Buffer plugins: Store events temporarily to handle backpressure and ensure reliability

This modular design means Fluentd can adapt to virtually any logging scenario without modifying the core codebase.

Internally, Fluentd structures all data in JSON format, providing a consistent representation regardless of the original source format. This standardization simplifies downstream processing and makes it easier to work with heterogeneous data sources.

A typical event in Fluentd consists of:

- Time: When the event occurred

- Tag: Where the event came from

- Record: The actual log data in JSON format

For data engineering teams, Fluentd serves as a critical component in the logging infrastructure:

Fluentd excels at feeding log data into data processing pipelines:

- Stream data into Kafka for real-time analytics

- Load logs into Elasticsearch for search and visualization

- Send data to cloud storage (S3, GCS, Azure Blob) for archival

- Forward to big data systems like Hadoop or Spark for batch processing

- Push metrics to monitoring systems like Prometheus or Datadog

In containerized environments, Fluentd shines as the logging solution of choice:

- DaemonSet deployment ensures logs are collected from every node

- Kubernetes metadata enrichment adds context to container logs

- Multi-tenancy support for handling logs from multiple applications

- Seamless integration with the broader Kubernetes ecosystem

For cloud-based data infrastructure, Fluentd offers:

- Lightweight resource footprint suitable for cloud instances

- Native integration with all major cloud providers

- Scalability to handle elastic workloads

- Built-in high availability features for production use

Fluentd is built for production workloads:

- Written in C and Ruby for performance and flexibility

- Asynchronous buffering to handle spikes in log volume

- Automatic retries for failed transmissions

- Memory and file-based buffering options

- At-least-once delivery semantics for critical data

As data schemas evolve, Fluentd can adapt:

- Schema-agnostic processing accommodates changing field structures

- Field transformation capabilities for evolving schemas

- Conditional processing based on the presence of specific fields

- Default value insertion for backward compatibility

Beyond simple collection, Fluentd enables sophisticated processing:

- Record transformation for normalizing fields

- Filtering and sampling for high-volume logs

- Aggregation and summarization of events

- Enrichment with additional contextual data

- Regular expression pattern matching for extraction and rewriting

Example configuration for parsing and enriching database logs:

<source>

@type tail

path /var/log/mysql/mysql-slow.log

tag mysql.slow

<parse>

@type multiline

format_firstline /^# Time:/

format1 /# Time: (?<time>\d{4}-\d{2}-\d{2}T\d{2}:\d{2}:\d{2}.\d+Z)\s*$/

format2 /# User@Host: (?<user>\w+)\[(?<client_user>\w+)\] @ (?<host>[^ ]*) \[(?<ip>[^\]]*)\]\s*$/

format3 /# Query_time: (?<query_time>\d+\.\d+)\s*Lock_time: (?<lock_time>\d+\.\d+)\s*Rows_sent: (?<rows_sent>\d+)\s*Rows_examined: (?<rows_examined>\d+)\s*$/

format4 /^(?<query>[\s\S]*?);\s*$/

</parse>

</source>

<filter mysql.slow>

@type record_transformer

<record>

environment ${hostname.split('.')[0]}

db_type mysql

query_time_ms ${record["query_time"].to_f * 1000}

slow_query ${record["query_time"].to_f > 1.0}

</record>

</filter>

<match mysql.slow>

@type copy

<store>

@type elasticsearch

host elasticsearch.internal

port 9200

logstash_format true

logstash_prefix mysql-slow

</store>

<store>

@type kafka

brokers kafka-broker1:9092,kafka-broker2:9092

topic mysql-slow-queries

format json

</store>

</match>

A common pattern uses Fluentd in a multi-tier architecture:

- Edge collection: Fluentd agents on application servers collect local logs

- Aggregation tier: Centralized Fluentd servers receive and process logs from agents

- Storage and analysis: Processed logs are sent to appropriate destinations

This approach provides scalability while minimizing network traffic and processing overhead.

Data engineers often implement environment-aware routing:

<match production.**>

@type copy

<store>

@type elasticsearch

# Production ES cluster settings

</store>

<store>

@type s3

# Long-term archival settings

</store>

</match>

<match staging.**>

@type elasticsearch

# Staging ES cluster settings with shorter retention

</match>

<match development.**>

@type elasticsearch

# Development ES cluster with minimal retention

</match>

For real-time analytics, Fluentd can feed directly into stream processing frameworks:

<match app.events.**>

@type kafka

brokers kafka1:9092,kafka2:9092

default_topic app_events

<format>

@type json

</format>

<buffer topic>

@type memory

flush_interval 1s

</buffer>

</match>

While both serve similar purposes:

- Resource usage: Fluentd typically has a lighter footprint

- Ecosystem: Logstash integrates more deeply with the Elastic Stack

- Configuration: Fluentd uses a more structured, hierarchical approach

- Performance: Fluentd often performs better at scale

A common question is choosing between Fluentd and its lightweight sibling:

- Fluent Bit: Written in C, extremely lightweight, perfect for edge collection

- Fluentd: More feature-rich, better for complex processing, more plugins

- Hybrid approach: Many organizations use Fluent Bit at the edge and Fluentd as aggregators

A newer competitor, Vector, offers some differences:

- Language: Vector is written in Rust for memory safety

- Configuration: Vector emphasizes typed configuration

- Maturity: Fluentd has a longer track record and larger community

Fine-tuning buffers is critical for performance:

<buffer>

@type file

path /var/log/fluentd/buffer

flush_mode interval

flush_interval 60s

flush_thread_count 4

chunk_limit_size 16MB

queue_limit_length 32

retry_forever true

retry_max_interval 30

overflow_action block

</buffer>

For critical environments, Fluentd can be configured for high availability:

<system>

workers 4

root_dir /path/to/working/directory

</system>

<label @copy>

<filter **>

@type stdout

</filter>

</label>

<match **>

@type copy

<store>

@type forward

<server>

name primary

host 192.168.1.3

port 24224

weight 60

</server>

<server>

name secondary

host 192.168.1.4

port 24224

weight 40

</server>

transport tls

tls_verify_hostname true

tls_cert_path /path/to/certificate.pem

<buffer>

@type file

path /var/log/fluentd/forward

retry_forever true

</buffer>

</store>

</match>

For specialized needs, Fluentd plugins can be created:

require 'fluent/plugin/filter'

module Fluent

module Plugin

class MyCustomFilter < Filter

Fluent::Plugin.register_filter('my_custom_filter', self)

def configure(conf)

super

# configuration code

end

def filter(tag, time, record)

# processing logic

# return modified record or nil to drop it

record

end

end

end

end

When implementing Fluentd in production environments:

- Enable TLS for all communications between agents and servers

- Use secure credentials storage for destination authentication

- Implement proper access controls on log files and directories

- Sanitize sensitive data before it leaves the collection point

- Audit log access to maintain compliance

A key aspect often overlooked is monitoring the monitoring system:

- Export internal metrics to Prometheus or other monitoring systems

- Set up alerts for buffer overflow or growing queue sizes

- Log Fluentd’s own logs to track errors and issues

- Monitor resource usage to prevent Fluentd from impacting application performance

For large-scale deployments:

- Shard by log types to distribute processing load

- Implement appropriate buffer settings for high-volume sources

- Consider multi-stage deployments with specialized aggregation layers

- Use load balancing for sending logs to multiple collectors

- Scale horizontally by adding more aggregator instances

As data engineering evolves, Fluentd continues to adapt:

- Enhanced Kubernetes integration for deeper container insights

- Improved streaming analytics capabilities

- Better integration with observability platforms

- Enhanced security features for compliance requirements

- Performance optimizations for handling ever-increasing data volumes

Fluentd has earned its place as a cornerstone of modern logging infrastructure through its elegant design, robust performance, and unmatched flexibility. For data engineering teams, it represents an ideal solution to the challenging problem of unifying diverse data streams into coherent, processable flows.

Its tag-based routing, pluggable architecture, and unified data format create a powerful foundation for building sophisticated logging pipelines. Whether you’re working with containerized microservices, traditional server applications, or cloud-based infrastructure, Fluentd provides the tools needed to collect, process, and route your log data efficiently.

By implementing Fluentd as part of your data engineering stack, you can create a unified logging layer that streamlines operations, enhances troubleshooting, and enables powerful analytics on your log data. As the volume and importance of log data continue to grow, tools like Fluentd that can handle this data reliably and at scale become increasingly indispensable.

#Fluentd #UnifiedLogging #DataCollector #DataEngineering #OpenSource #LogManagement #CNCF #DataPipelines #CloudNative #Kubernetes #LogAggregation #RealTimeData #ETL #DataProcessing #Observability #DevOps #Microservices #LogStreaming #DataOps #Monitoring